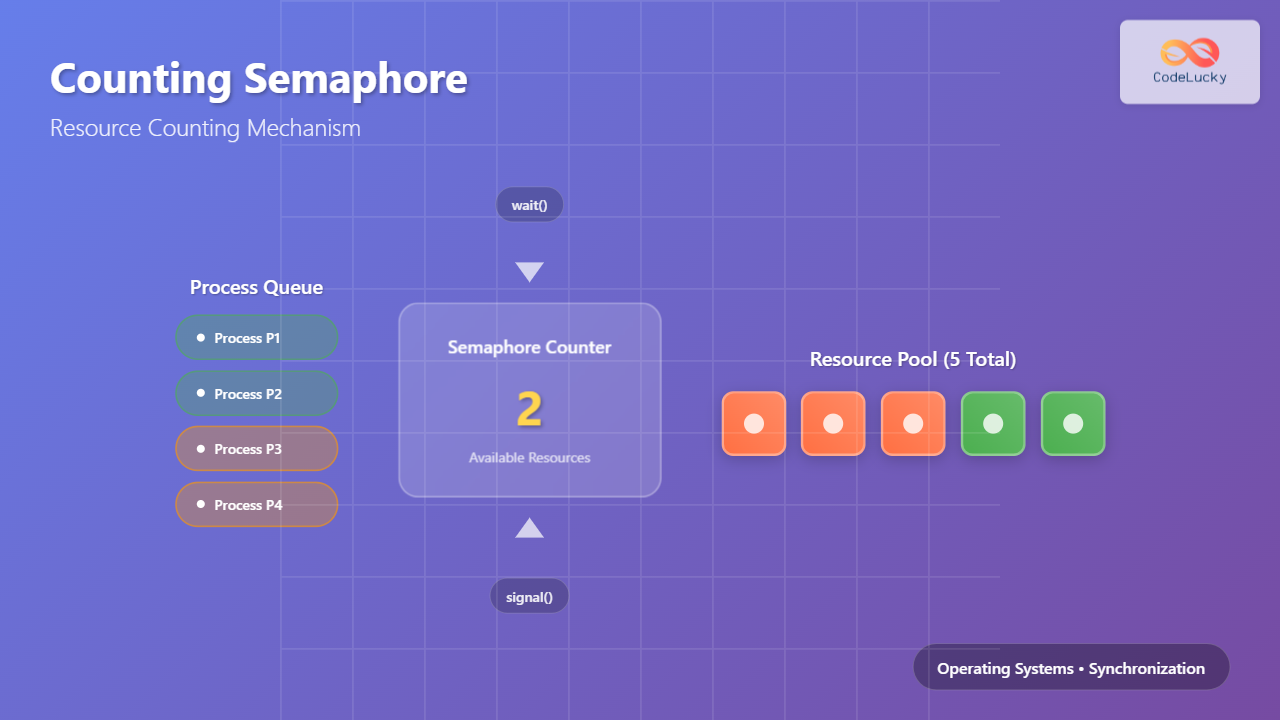

Counting semaphores are fundamental synchronization primitives in operating systems that manage access to a finite number of identical resources. Unlike binary semaphores that allow only one process at a time, counting semaphores can permit multiple processes to access shared resources simultaneously, making them essential for resource management in concurrent programming.

What is a Counting Semaphore?

A counting semaphore is a synchronization mechanism that maintains an integer value representing the number of available resources. This value can range from zero to a predefined maximum, allowing multiple processes to acquire resources concurrently until the limit is reached. When no resources are available, processes must wait until another process releases a resource.

Key Components and Operations

Semaphore Structure

A counting semaphore consists of two main components:

- Counter Value: An integer representing available resources

- Process Queue: A list of processes waiting for resources

Primary Operations

Wait Operation (P or Down)

wait(semaphore S) {

S.value--;

if (S.value < 0) {

// Add calling process to S.queue

block();

}

}

Signal Operation (V or Up)

signal(semaphore S) {

S.value++;

if (S.value <= 0) {

// Remove a process from S.queue

wakeup(process);

}

}

Implementation Example

Let’s examine a practical implementation of a counting semaphore in C:

#include

#include

#include

#include

#define MAX_RESOURCES 3

#define NUM_PROCESSES 5

sem_t resource_semaphore;

void* process_function(void* arg) {

int process_id = *(int*)arg;

printf("Process %d requesting resource...\n", process_id);

// Wait for resource (decrement semaphore)

sem_wait(&resource_semaphore);

printf("Process %d acquired resource\n", process_id);

// Simulate resource usage

sleep(2);

printf("Process %d releasing resource\n", process_id);

// Release resource (increment semaphore)

sem_post(&resource_semaphore);

return NULL;

}

int main() {

pthread_t processes[NUM_PROCESSES];

int process_ids[NUM_PROCESSES];

// Initialize counting semaphore with 3 resources

sem_init(&resource_semaphore, 0, MAX_RESOURCES);

// Create processes

for (int i = 0; i < NUM_PROCESSES; i++) {

process_ids[i] = i + 1;

pthread_create(&processes[i], NULL, process_function, &process_ids[i]);

}

// Wait for all processes to complete

for (int i = 0; i < NUM_PROCESSES; i++) {

pthread_join(processes[i], NULL);

}

sem_destroy(&resource_semaphore);

return 0;

}

Expected Output:

Process 1 requesting resource...

Process 2 requesting resource...

Process 3 requesting resource...

Process 4 requesting resource...

Process 5 requesting resource...

Process 1 acquired resource

Process 2 acquired resource

Process 3 acquired resource

Process 1 releasing resource

Process 4 acquired resource

Process 2 releasing resource

Process 5 acquired resource

Process 3 releasing resource

Process 4 releasing resource

Process 5 releasing resource

Resource Allocation Visualization

Common Use Cases

Database Connection Pool

import threading

import time

import random

class DatabaseConnectionPool:

def __init__(self, max_connections):

self.semaphore = threading.Semaphore(max_connections)

self.connections = []

def get_connection(self):

self.semaphore.acquire() # Wait for available connection

print(f"Thread {threading.current_thread().name} acquired connection")

return "Database Connection"

def release_connection(self, connection):

print(f"Thread {threading.current_thread().name} released connection")

self.semaphore.release() # Signal connection availability

def worker(pool, worker_id):

connection = pool.get_connection()

# Simulate database operation

time.sleep(random.uniform(1, 3))

pool.release_connection(connection)

# Create connection pool with 2 connections

pool = DatabaseConnectionPool(2)

# Create 5 worker threads

threads = []

for i in range(5):

t = threading.Thread(target=worker, args=(pool, i), name=f"Worker-{i}")

threads.append(t)

t.start()

for t in threads:

t.join()

Print Spooler Management

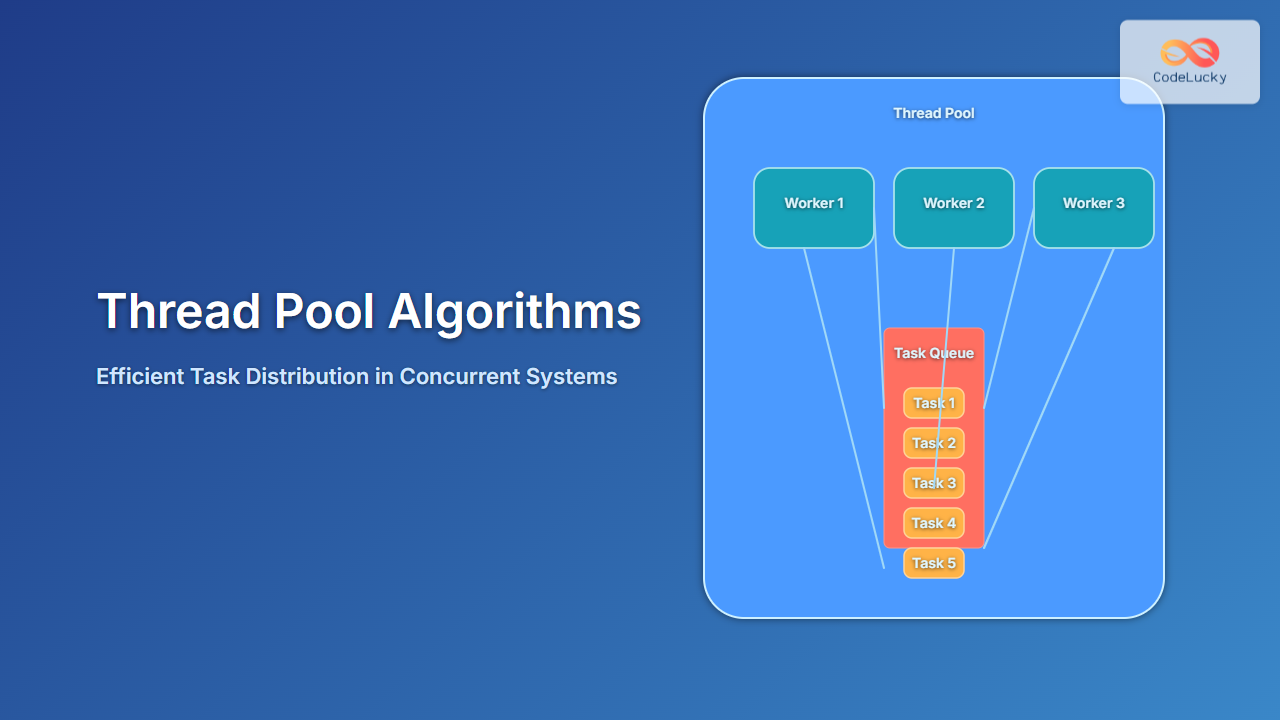

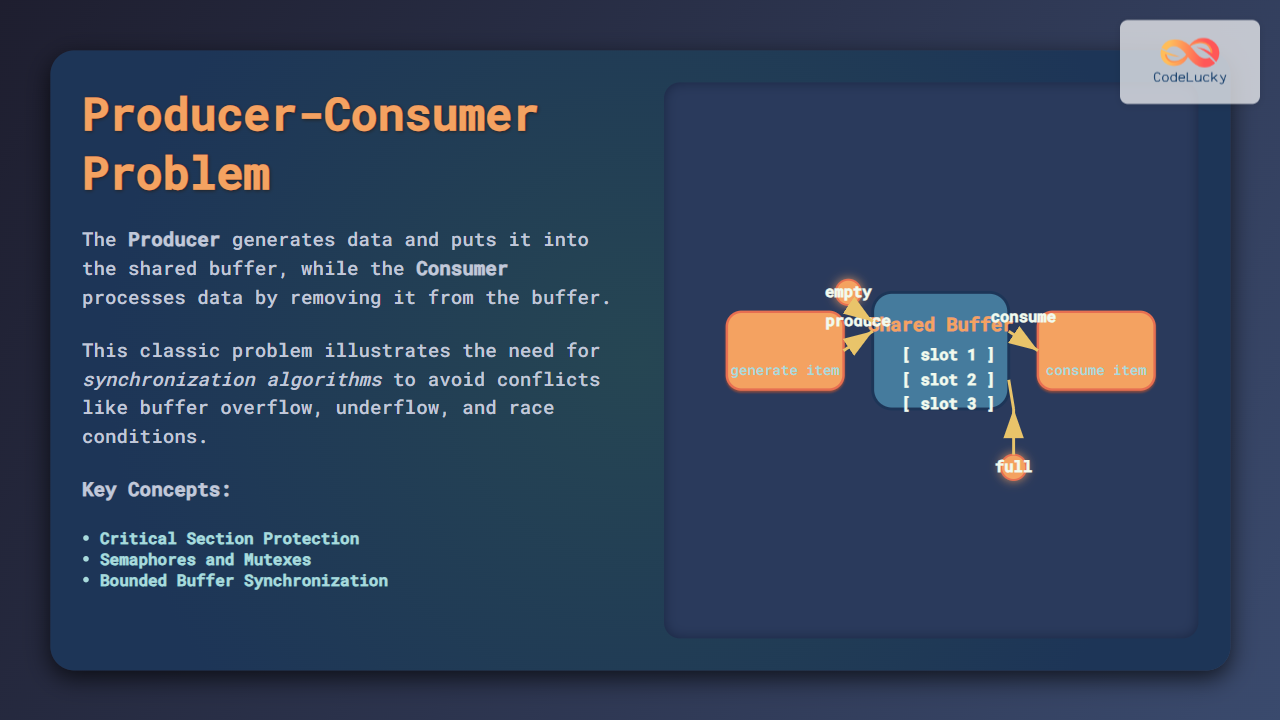

Producer-Consumer with Buffer Limit

#include

#include

#include

#include

#define BUFFER_SIZE 5

int buffer[BUFFER_SIZE];

int in = 0, out = 0;

sem_t empty_slots; // Count of empty buffer slots

sem_t full_slots; // Count of filled buffer slots

pthread_mutex_t buffer_mutex;

void* producer(void* arg) {

int producer_id = *(int*)arg;

for (int i = 0; i < 3; i++) {

int item = producer_id * 10 + i;

// Wait for empty slot

sem_wait(∅_slots);

// Lock buffer access

pthread_mutex_lock(&buffer_mutex);

buffer[in] = item;

printf("Producer %d produced item %d at position %d\n",

producer_id, item, in);

in = (in + 1) % BUFFER_SIZE;

pthread_mutex_unlock(&buffer_mutex);

// Signal filled slot

sem_post(&full_slots);

sleep(1);

}

return NULL;

}

void* consumer(void* arg) {

int consumer_id = *(int*)arg;

for (int i = 0; i < 2; i++) {

// Wait for filled slot

sem_wait(&full_slots);

// Lock buffer access

pthread_mutex_lock(&buffer_mutex);

int item = buffer[out];

printf("Consumer %d consumed item %d from position %d\n",

consumer_id, item, out);

out = (out + 1) % BUFFER_SIZE;

pthread_mutex_unlock(&buffer_mutex);

// Signal empty slot

sem_post(∅_slots);

sleep(2);

}

return NULL;

}

int main() {

pthread_t producers[2], consumers[3];

int producer_ids[2] = {1, 2};

int consumer_ids[3] = {1, 2, 3};

// Initialize semaphores

sem_init(∅_slots, 0, BUFFER_SIZE); // All slots empty initially

sem_init(&full_slots, 0, 0); // No slots filled initially

pthread_mutex_init(&buffer_mutex, NULL);

// Create producers

for (int i = 0; i < 2; i++) {

pthread_create(&producers[i], NULL, producer, &producer_ids[i]);

}

// Create consumers

for (int i = 0; i < 3; i++) {

pthread_create(&consumers[i], NULL, consumer, &consumer_ids[i]);

}

// Wait for completion

for (int i = 0; i < 2; i++) {

pthread_join(producers[i], NULL);

}

for (int i = 0; i < 3; i++) {

pthread_join(consumers[i], NULL);

}

// Cleanup

sem_destroy(∅_slots);

sem_destroy(&full_slots);

pthread_mutex_destroy(&buffer_mutex);

return 0;

}

Counting Semaphore vs Binary Semaphore

| Aspect | Binary Semaphore | Counting Semaphore |

|---|---|---|

| Value Range | 0 or 1 | 0 to N |

| Purpose | Mutual Exclusion | Resource Counting |

| Concurrent Access | One process only | Multiple processes (up to N) |

| Use Cases | Critical sections | Resource pools, buffers |

Implementation Considerations

Atomic Operations

Semaphore operations must be atomic to prevent race conditions:

// Non-atomic implementation (WRONG)

void unsafe_wait(semaphore* s) {

s->value--; // Not atomic

if (s->value < 0) { // Race condition possible

block_process();

}

}

// Atomic implementation (CORRECT)

void safe_wait(semaphore* s) {

disable_interrupts();

s->value--;

if (s->value < 0) {

add_to_queue(current_process);

enable_interrupts();

block_process();

} else {

enable_interrupts();

}

}

Starvation Prevention

To prevent starvation, implement fair queuing mechanisms:

- FIFO Queue: First process to wait gets first access

- Priority Queue: Higher priority processes served first

- Aging: Increase priority of waiting processes over time

Best Practices and Common Pitfalls

Best Practices

- Initialize Properly: Set initial value equal to available resources

- Balanced Operations: Every wait() should have corresponding signal()

- Error Handling: Check return values of semaphore operations

- Resource Cleanup: Always destroy semaphores when done

Common Pitfalls

Deadlock Example

// DEADLOCK SCENARIO

sem_t resource1, resource2;

void process_a() {

sem_wait(&resource1); // Acquire resource1

sem_wait(&resource2); // Wait for resource2 (may deadlock)

// Critical section

sem_post(&resource2);

sem_post(&resource1);

}

void process_b() {

sem_wait(&resource2); // Acquire resource2

sem_wait(&resource1); // Wait for resource1 (may deadlock)

// Critical section

sem_post(&resource1);

sem_post(&resource2);

}

Solution: Ordered Resource Allocation

// DEADLOCK PREVENTION

void process_a() {

sem_wait(&resource1); // Always acquire resource1 first

sem_wait(&resource2); // Then resource2

// Critical section

sem_post(&resource2); // Release in reverse order

sem_post(&resource1);

}

void process_b() {

sem_wait(&resource1); // Same order as process_a

sem_wait(&resource2);

// Critical section

sem_post(&resource2);

sem_post(&resource1);

}

Performance Analysis

Time Complexity

- Wait Operation: O(1) – Constant time for counter decrement and queue operations

- Signal Operation: O(1) – Constant time for counter increment and process wakeup

Space Complexity

- Semaphore Structure: O(1) – Fixed size regardless of process count

- Process Queue: O(n) – Where n is the number of waiting processes

Advanced Applications

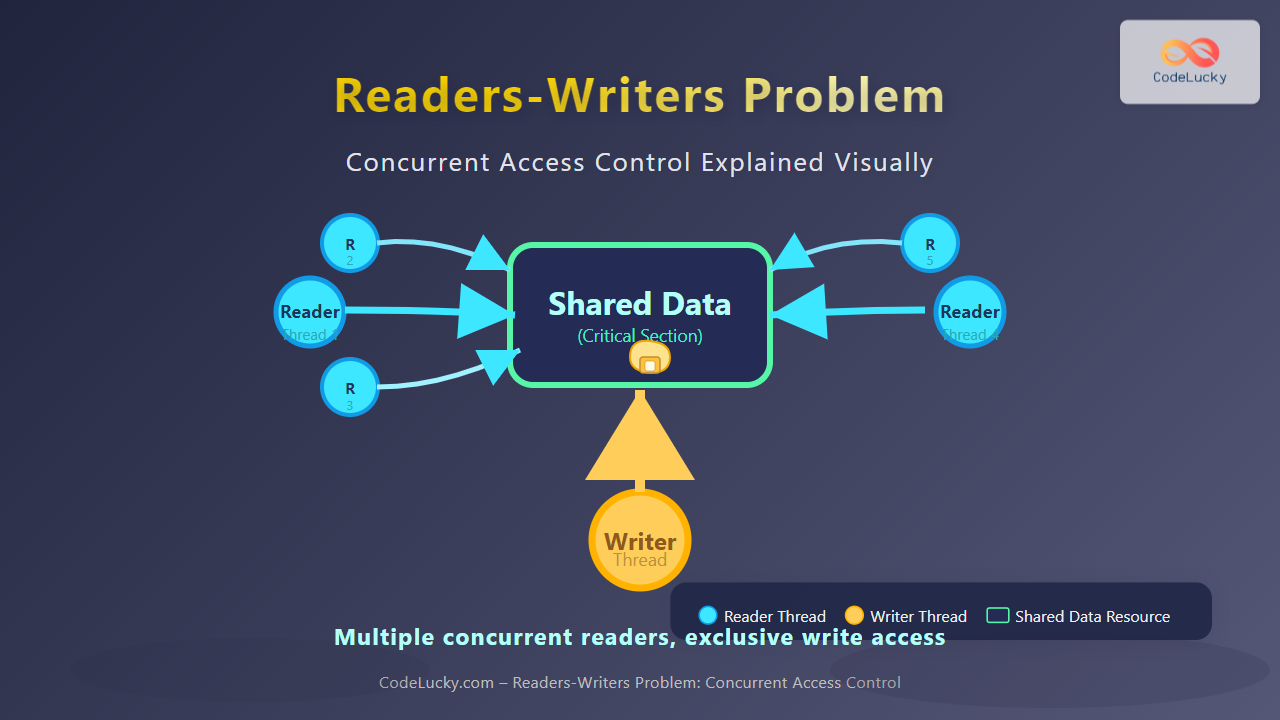

Read-Write Lock Implementation

typedef struct {

sem_t readers_sem; // Controls reader access

sem_t writers_sem; // Controls writer access

int reader_count; // Number of active readers

pthread_mutex_t count_mutex; // Protects reader_count

} rwlock_t;

void rwlock_init(rwlock_t* lock) {

sem_init(&lock->readers_sem, 0, 1);

sem_init(&lock->writers_sem, 0, 1);

lock->reader_count = 0;

pthread_mutex_init(&lock->count_mutex, NULL);

}

void read_lock(rwlock_t* lock) {

pthread_mutex_lock(&lock->count_mutex);

lock->reader_count++;

if (lock->reader_count == 1) {

sem_wait(&lock->writers_sem); // First reader blocks writers

}

pthread_mutex_unlock(&lock->count_mutex);

}

void read_unlock(rwlock_t* lock) {

pthread_mutex_lock(&lock->count_mutex);

lock->reader_count--;

if (lock->reader_count == 0) {

sem_post(&lock->writers_sem); // Last reader unblocks writers

}

pthread_mutex_unlock(&lock->count_mutex);

}

void write_lock(rwlock_t* lock) {

sem_wait(&lock->writers_sem); // Exclusive access for writers

}

void write_unlock(rwlock_t* lock) {

sem_post(&lock->writers_sem); // Release exclusive access

}

Conclusion

Counting semaphores are powerful synchronization primitives that enable efficient resource management in concurrent systems. They provide a flexible mechanism for controlling access to multiple identical resources while preventing race conditions and ensuring proper synchronization between processes.

Key takeaways include understanding the fundamental operations, implementing proper error handling, avoiding common pitfalls like deadlocks, and choosing appropriate use cases. Whether managing database connections, printer queues, or buffer pools, counting semaphores provide the foundation for robust concurrent programming in operating systems.

Mastering counting semaphores is essential for system programmers working with multi-threaded applications, operating system kernels, and distributed systems where resource contention and synchronization are critical concerns.