What are Threads in Operating Systems?

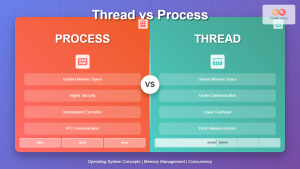

A thread is the smallest unit of execution within a process that can be scheduled independently by the operating system. Unlike processes, threads share the same memory space and resources of their parent process, making them lightweight processes. Threads enable concurrent execution within a single program, allowing multiple tasks to run simultaneously and improving system efficiency.

Key Characteristics of Threads

Shared Resources

Threads within the same process share several resources:

- Memory space: Code, data, and heap segments

- File descriptors: Open files and I/O streams

- Signal handlers: Process-wide signal handling

- Process ID: All threads share the same PID

- Working directory: Current directory context

Private Resources

Each thread maintains its own:

- Stack: Local variables and function calls

- Program counter: Current instruction pointer

- Register set: CPU register values

- Thread ID: Unique identifier within the process

Types of Threads

User-Level Threads (ULT)

User-level threads are managed entirely by user-space thread libraries without kernel intervention. The kernel is unaware of their existence and treats the entire process as a single execution unit.

Advantages:

- Fast thread creation and switching

- No system calls required for thread operations

- Portable across different operating systems

- Custom scheduling algorithms possible

Disadvantages:

- Blocking system calls block entire process

- No true parallelism on multiprocessor systems

- Complex signal handling

Kernel-Level Threads (KLT)

Kernel-level threads are managed directly by the operating system kernel. Each thread has its own thread control block (TCB) in kernel space.

Advantages:

- True parallelism on multiprocessor systems

- Non-blocking system calls

- Better fault tolerance

- Kernel-level scheduling

Disadvantages:

- Higher overhead for thread operations

- System calls required for thread management

- Platform-dependent implementation

Hybrid Threading Models

Many modern systems implement hybrid models combining user-level and kernel-level threads:

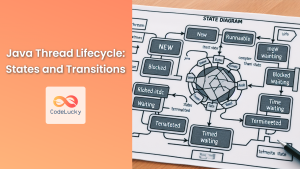

Thread Lifecycle

Threads go through various states during their execution:

Thread States Explained

- New: Thread object created but not yet started

- Ready: Thread ready to run, waiting for CPU assignment

- Running: Thread currently executing on CPU

- Blocked: Thread waiting for I/O or synchronization

- Terminated: Thread execution completed

Multithreading Implementation

POSIX Threads (Pthreads) Example

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

void* worker_thread(void* arg) {

int thread_id = *(int*)arg;

printf("Thread %d: Starting execution\n", thread_id);

// Simulate work

for (int i = 0; i < 5; i++) {

printf("Thread %d: Working... step %d\n", thread_id, i + 1);

sleep(1);

}

printf("Thread %d: Finished\n", thread_id);

return NULL;

}

int main() {

pthread_t threads[3];

int thread_ids[3] = {1, 2, 3};

// Create threads

for (int i = 0; i < 3; i++) {

if (pthread_create(&threads[i], NULL, worker_thread, &thread_ids[i]) != 0) {

fprintf(stderr, "Error creating thread %d\n", i + 1);

return 1;

}

}

// Wait for all threads to complete

for (int i = 0; i < 3; i++) {

pthread_join(threads[i], NULL);

}

printf("All threads completed\n");

return 0;

}Expected Output:

Thread 1: Starting execution

Thread 2: Starting execution

Thread 3: Starting execution

Thread 1: Working... step 1

Thread 2: Working... step 1

Thread 3: Working... step 1

Thread 1: Working... step 2

Thread 2: Working... step 2

Thread 3: Working... step 2

...

Thread 1: Finished

Thread 2: Finished

Thread 3: Finished

All threads completedJava Threading Example

class WorkerThread extends Thread {

private int threadId;

public WorkerThread(int id) {

this.threadId = id;

}

@Override

public void run() {

System.out.println("Thread " + threadId + ": Starting execution");

for (int i = 0; i < 5; i++) {

System.out.println("Thread " + threadId + ": Working... step " + (i + 1));

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

System.err.println("Thread " + threadId + " interrupted");

return;

}

}

System.out.println("Thread " + threadId + ": Finished");

}

}

public class MultithreadingExample {

public static void main(String[] args) {

WorkerThread[] threads = new WorkerThread[3];

// Create and start threads

for (int i = 0; i < 3; i++) {

threads[i] = new WorkerThread(i + 1);

threads[i].start();

}

// Wait for all threads to complete

for (WorkerThread thread : threads) {

try {

thread.join();

} catch (InterruptedException e) {

System.err.println("Main thread interrupted");

}

}

System.out.println("All threads completed");

}

}Thread Synchronization

When multiple threads access shared resources, synchronization mechanisms prevent race conditions and ensure data consistency:

Common Synchronization Primitives

- Mutex (Mutual Exclusion): Binary lock for exclusive access

- Semaphore: Counter-based synchronization primitive

- Condition Variables: Signal-based thread coordination

- Read-Write Locks: Allow concurrent reads, exclusive writes

- Spin Locks: Busy-waiting synchronization mechanism

Mutex Example in C

#include <pthread.h>

#include <stdio.h>

int shared_counter = 0;

pthread_mutex_t counter_mutex = PTHREAD_MUTEX_INITIALIZER;

void* increment_counter(void* arg) {

for (int i = 0; i < 100000; i++) {

pthread_mutex_lock(&counter_mutex);

shared_counter++;

pthread_mutex_unlock(&counter_mutex);

}

return NULL;

}

int main() {

pthread_t threads[2];

// Create threads

pthread_create(&threads[0], NULL, increment_counter, NULL);

pthread_create(&threads[1], NULL, increment_counter, NULL);

// Wait for completion

pthread_join(threads[0], NULL);

pthread_join(threads[1], NULL);

printf("Final counter value: %d\n", shared_counter);

printf("Expected value: 200000\n");

return 0;

}Thread Scheduling

Thread schedulers determine which thread runs on available CPU cores. Common scheduling algorithms include:

Scheduling Algorithms

- Round Robin: Time-sliced execution with fixed quantum

- Priority-based: Higher priority threads execute first

- Multilevel Feedback Queue: Dynamic priority adjustment

- Completely Fair Scheduler (CFS): Linux’s default scheduler

Advantages and Disadvantages of Threading

Advantages

- Improved Performance: Concurrent execution and parallelism

- Resource Sharing: Efficient memory and resource utilization

- Responsiveness: Better user interface responsiveness

- Scalability: Better utilization of multiprocessor systems

- Communication: Fast inter-thread communication

Disadvantages

- Complexity: Increased programming complexity

- Synchronization Issues: Race conditions and deadlocks

- Debugging Difficulty: Non-deterministic behavior

- Context Switching: Overhead from thread switching

- Memory Overhead: Additional memory for thread stacks

Threading Models in Different Operating Systems

Linux Threading

Linux implements threads using the clone() system call and the Native POSIX Thread Library (NPTL). Each thread has its own task_struct in the kernel.

# View threads of a process

ps -eLf | grep process_name

# Monitor thread activity

top -H -p PIDWindows Threading

Windows provides comprehensive threading support through Win32 API and .NET Framework threading classes.

// Windows thread creation

HANDLE hThread = CreateThread(

NULL, // Security attributes

0, // Stack size

ThreadFunction, // Thread function

NULL, // Parameters

0, // Creation flags

&threadId // Thread ID

);macOS Threading

macOS supports POSIX threads and provides Grand Central Dispatch (GCD) for concurrent programming.

// GCD example

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

dispatch_async(queue, ^{

// Background work

printf("Background thread execution\n");

});Best Practices for Multithreading

Design Guidelines

- Minimize Shared State: Reduce shared data between threads

- Use Thread-Safe Libraries: Employ reentrant functions

- Avoid Deadlocks: Consistent lock ordering

- Handle Exceptions: Proper error handling in threads

- Resource Cleanup: Ensure proper resource deallocation

Performance Optimization

- Thread Pool Usage: Reuse threads instead of creating new ones

- Load Balancing: Distribute work evenly across threads

- Cache Considerations: Minimize false sharing

- NUMA Awareness: Consider memory locality

Modern Threading Concepts

Green Threads

Lightweight threads scheduled by runtime rather than OS kernel, commonly used in languages like Go (goroutines) and Erlang (processes).

Async/Await Pattern

Modern asynchronous programming model that provides thread-like behavior without explicit thread management.

# Python async/await example

import asyncio

async def worker_coroutine(worker_id):

print(f"Worker {worker_id}: Starting")

await asyncio.sleep(1) # Non-blocking sleep

print(f"Worker {worker_id}: Finished")

async def main():

tasks = [worker_coroutine(i) for i in range(3)]

await asyncio.gather(*tasks)

asyncio.run(main())Conclusion

Threads represent a fundamental concept in modern operating systems, enabling efficient concurrent execution within processes. Understanding thread types, lifecycle, synchronization, and best practices is crucial for developing efficient multithreaded applications. While threading introduces complexity, proper implementation can significantly improve application performance and user experience.

As computing systems continue evolving toward greater parallelism, mastering threading concepts becomes increasingly important for system programmers and application developers. Whether implementing low-level system software or high-level applications, threads provide the foundation for concurrent programming in modern operating systems.

- What are Threads in Operating Systems?

- Key Characteristics of Threads

- Types of Threads

- Thread Lifecycle

- Multithreading Implementation

- Thread Synchronization

- Thread Scheduling

- Advantages and Disadvantages of Threading

- Threading Models in Different Operating Systems

- Best Practices for Multithreading

- Modern Threading Concepts

- Conclusion