A/B testing has revolutionized how modern product teams make decisions, transforming gut feelings into data-backed insights. This systematic approach to experimentation allows teams to compare two versions of a product feature, webpage, or user interface to determine which performs better based on specific metrics.

In today’s competitive digital landscape, companies that embrace A/B testing see significant improvements in conversion rates, user engagement, and overall product performance. Organizations like Google, Amazon, and Netflix run thousands of A/B tests annually, using data to guide every product decision from minor UI tweaks to major feature launches.

Understanding A/B Testing Fundamentals

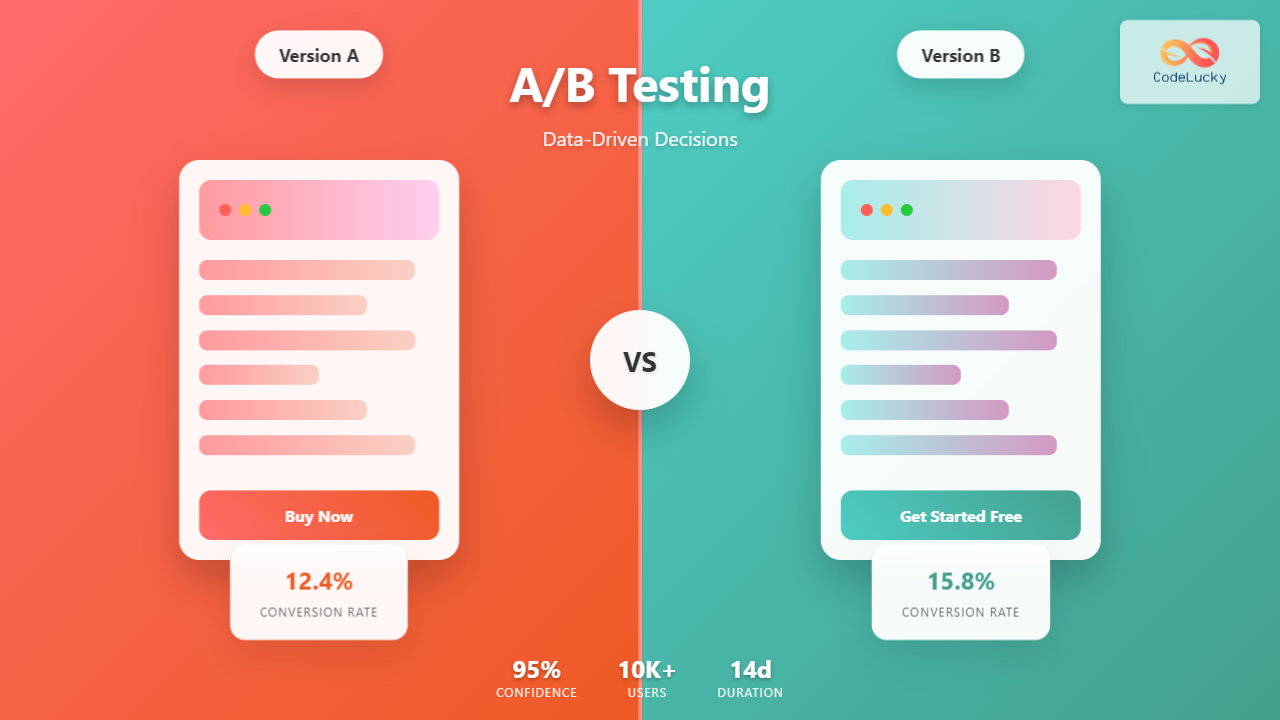

A/B testing, also known as split testing, involves dividing your audience into two groups and showing each group a different version of your product or feature. Version A (the control) represents your current implementation, while Version B (the variant) contains the changes you want to test.

The core principle lies in statistical significance – ensuring that observed differences between versions aren’t due to random chance. This scientific approach eliminates bias and provides concrete evidence for decision-making, making it an essential tool in agile development environments where rapid iteration and continuous improvement are paramount.

Key Components of Effective A/B Testing

Successful A/B testing requires several critical elements working together. Your hypothesis must be clear and measurable, defining exactly what you expect to change and why. The test population should be large enough to achieve statistical significance, typically requiring hundreds or thousands of users depending on your conversion rates and expected effect size.

Random assignment ensures unbiased results by distributing users between control and variant groups without any systematic differences. Duration planning involves running tests long enough to account for temporal variations while avoiding external factors that might skew results.

Setting Up Your A/B Testing Framework

Building a robust A/B testing framework starts with selecting the right tools and establishing clear processes. Popular platforms like Optimizely, VWO, and Google Optimize provide user-friendly interfaces for creating and managing experiments, while custom solutions offer greater flexibility for complex testing scenarios.

Your testing infrastructure should integrate seamlessly with existing analytics tools, enabling comprehensive data collection and analysis. Consider factors like page load speed impact, mobile compatibility, and cross-browser functionality when implementing testing scripts.

Defining Success Metrics

Primary metrics directly measure your main objective, such as conversion rate, click-through rate, or revenue per visitor. Secondary metrics provide additional context, helping you understand broader impacts of your changes. For example, while testing a checkout button color might focus on conversion rate as the primary metric, you’d also monitor metrics like time to purchase and cart abandonment rate.

Guardrail metrics protect against negative side effects by monitoring key business indicators that shouldn’t be adversely affected. These might include overall site performance, user satisfaction scores, or long-term retention rates.

Statistical Principles in A/B Testing

Understanding statistical concepts is crucial for interpreting A/B test results accurately. Statistical significance indicates the probability that observed differences occurred by chance, typically set at 95% confidence level (p-value < 0.05). However, statistical significance doesn’t guarantee practical significance – a statistically significant 0.1% improvement might not justify implementation costs.

Effect size measures the magnitude of difference between variants, helping you assess practical importance. Confidence intervals provide a range of plausible values for the true effect, offering more nuanced insights than simple point estimates.

Sample Size Calculation

Proper sample size calculation prevents underpowered tests that fail to detect meaningful differences. Key factors include baseline conversion rate, minimum detectable effect (the smallest improvement you consider worthwhile), statistical power (typically 80%), and significance level.

Online calculators can help determine required sample sizes, but remember that these calculations assume equal traffic allocation between variants. Unequal allocation might be necessary for risk management when testing potentially disruptive changes.

Advanced A/B Testing Techniques

Multivariate testing expands beyond simple A/B comparisons by testing multiple elements simultaneously. This approach reveals interaction effects between different changes, providing deeper insights into user behavior. However, multivariate tests require significantly larger sample sizes and more complex analysis.

Sequential testing allows you to monitor results continuously and stop tests early when statistical significance is achieved, reducing time to insights. Bayesian approaches offer alternative statistical frameworks that can be more intuitive for business stakeholders, providing probability distributions rather than binary significant/not-significant results.

Personalization and Segmentation

Advanced A/B testing incorporates user segmentation to understand how different user groups respond to changes. Geographic, demographic, behavioral, and device-based segments often show varying preferences, enabling more targeted optimization strategies.

Contextual testing considers external factors like seasonality, traffic sources, and user journey stages. These factors can significantly impact test results, making it important to control for or analyze their effects explicitly.

Common A/B Testing Pitfalls

Peeking at results before reaching statistical significance is a widespread mistake that inflates false positive rates. Continuous monitoring is tempting, especially when early results look promising, but premature conclusions can lead to poor decisions.

Selection bias occurs when test groups aren’t truly random, often due to technical implementation issues or external factors affecting user assignment. Cookie deletion, browser differences, and returning users can complicate randomization efforts.

Interpretation Errors

Confusing correlation with causation remains a persistent issue in A/B test interpretation. Just because a change correlates with improved metrics doesn’t prove causation – external factors or measurement issues might explain the relationship.

Multiple comparison problems arise when testing numerous variants or metrics simultaneously without adjusting significance levels. This increases the likelihood of false discoveries, leading to implementations based on random variation rather than true improvements.

Implementation Best Practices

Start with high-impact, low-risk tests to build confidence and demonstrate value. Focus on elements with clear hypotheses backed by user research, analytics insights, or industry best practices. Successful early tests create momentum and support for more comprehensive testing programs.

Documentation and knowledge sharing ensure organizational learning from each test. Record hypotheses, methodologies, results, and lessons learned in accessible formats. This historical knowledge prevents repeated mistakes and builds institutional testing expertise.

Integration with Agile Workflows

A/B testing fits naturally into agile development cycles when properly integrated. Plan tests during sprint planning, ensuring adequate time for implementation, data collection, and analysis. Consider testing as part of your definition of done for features that impact user experience.

Collaborate closely with designers, developers, and stakeholders throughout the testing process. Cross-functional involvement improves test quality and increases buy-in for data-driven decisions.

Measuring Long-term Impact

Short-term A/B test results don’t always predict long-term effects. Novelty effects can make changes appear beneficial initially before performance returns to baseline levels. Monitor key metrics for weeks or months after implementation to understand true impact.

Customer lifetime value and retention metrics provide crucial long-term perspectives often missed in short-term conversion-focused tests. A change that improves immediate conversions might negatively affect long-term user satisfaction or engagement.

Building a Testing Culture

Creating a data-driven culture requires leadership support and clear processes for acting on test results. Celebrate both positive and negative results as learning opportunities, emphasizing that “failed” tests still provide valuable insights.

Invest in team education and training to improve testing literacy across the organization. When more team members understand statistical principles and testing methodologies, the quality and impact of your testing program improves significantly.

Tools and Technology Stack

Selecting appropriate A/B testing tools depends on your technical requirements, budget, and team capabilities. Cloud-based solutions like Optimizely and Adobe Target offer comprehensive features with minimal technical setup, while open-source alternatives like Wasabi provide greater customization options.

Consider integration capabilities with your existing analytics, customer data platforms, and development workflows. Seamless data flow between systems enables more sophisticated analysis and faster iteration cycles.

Data Privacy and Compliance

Modern A/B testing must account for privacy regulations like GDPR and CCPA. Ensure your testing platform handles user consent appropriately and provides necessary data controls. Consider anonymization techniques and data retention policies as part of your testing infrastructure.

Transparent communication about testing activities builds user trust while ensuring regulatory compliance. Clear privacy policies and opt-out mechanisms demonstrate respect for user preferences.

Future of A/B Testing

Machine learning and artificial intelligence are transforming A/B testing capabilities. Automated experiment design, dynamic traffic allocation, and intelligent stopping rules reduce manual overhead while improving test efficiency.

Real-time personalization combines A/B testing insights with individual user behavior to deliver customized experiences at scale. This evolution from population-level insights to individual-level optimization represents the next frontier in data-driven product development.

As A/B testing continues evolving, organizations that master both fundamental principles and emerging technologies will maintain competitive advantages through superior data-driven decision making. The key lies in balancing statistical rigor with practical business needs, creating sustainable testing programs that drive continuous improvement and innovation.