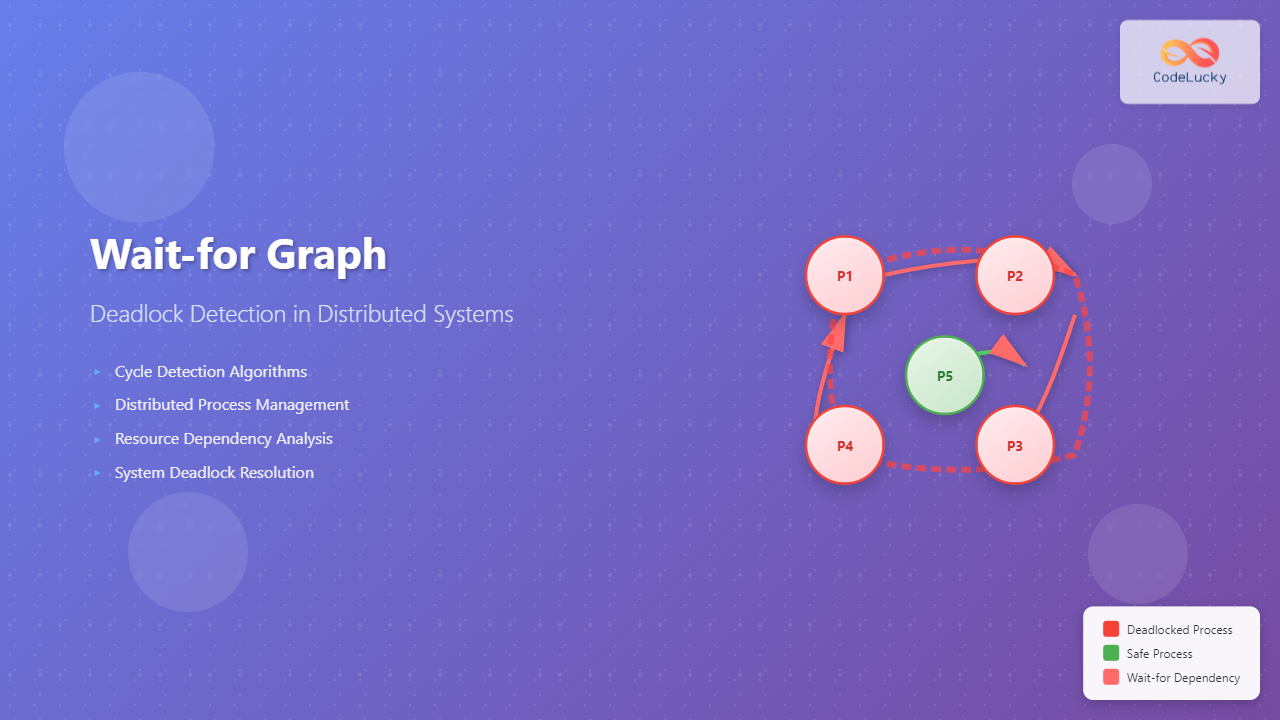

Introduction to Wait-for Graphs

In distributed systems, deadlock detection remains one of the most critical challenges for maintaining system reliability and performance. A wait-for graph serves as a fundamental data structure for identifying and resolving deadlocks across multiple processes and resources in distributed environments.

Unlike centralized systems where deadlock detection can be performed locally, distributed systems require sophisticated mechanisms to track dependencies across network boundaries. The wait-for graph provides a visual and algorithmic approach to represent process dependencies and detect circular waiting conditions that lead to deadlocks.

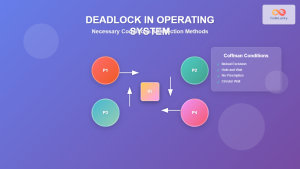

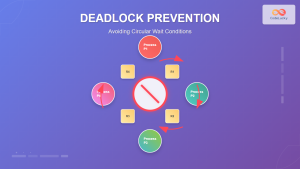

Understanding Deadlock in Distributed Systems

What is a Deadlock?

A deadlock occurs when two or more processes are permanently blocked, each waiting for resources held by the other processes. In distributed systems, this complexity multiplies as processes may exist on different machines, making detection and resolution significantly more challenging.

Necessary Conditions for Deadlock

For a deadlock to occur, four conditions must be satisfied simultaneously:

- Mutual Exclusion: Resources cannot be shared and can only be used by one process at a time

- Hold and Wait: Processes hold allocated resources while waiting for additional resources

- No Preemption: Resources cannot be forcibly removed from processes

- Circular Wait: A circular chain of processes exists where each process waits for a resource held by the next process

Wait-for Graph Fundamentals

Structure and Components

A wait-for graph is a directed graph where:

- Nodes: Represent processes or transactions in the system

- Directed Edges: Represent waiting relationships (Process A → Process B means A is waiting for B)

- Cycles: Indicate the presence of deadlocks

Types of Wait-for Graphs

Single-Site Wait-for Graph: Used in centralized systems where all processes and resources exist on a single machine.

Multi-Site Wait-for Graph: Distributed across multiple sites in a distributed system, requiring coordination between different nodes.

Deadlock Detection Algorithms

Centralized Deadlock Detection

In centralized detection, one designated site maintains the global wait-for graph and performs deadlock detection:

Algorithm Steps:

- Each site sends local dependency information to the central coordinator

- Central coordinator constructs the global wait-for graph

- Cycle detection algorithm identifies deadlocks

- Resolution commands are sent back to participating sites

Distributed Deadlock Detection

Distributed detection eliminates single points of failure by distributing detection responsibilities across multiple sites:

Implementation Strategies

Edge-Chasing Algorithm

The edge-chasing algorithm is widely used for distributed deadlock detection. It works by sending probe messages along the edges of the wait-for graph:

Algorithm Implementation:

class EdgeChasingDetector:

def __init__(self):

self.local_graph = {}

self.probe_messages = {}

def add_edge(self, from_process, to_process):

if from_process not in self.local_graph:

self.local_graph[from_process] = []

self.local_graph[from_process].append(to_process)

def initiate_probe(self, initiator, current, path):

probe_id = f"{initiator}_{current}_{len(path)}"

if current == initiator and len(path) > 1:

return self.handle_deadlock(path)

if current in self.local_graph:

for next_process in self.local_graph[current]:

new_path = path + [next_process]

self.send_probe(probe_id, initiator, next_process, new_path)

def handle_deadlock(self, cycle_path):

print(f"Deadlock detected: {' -> '.join(cycle_path)}")

return self.resolve_deadlock(cycle_path)

Timeout-Based Detection

This approach uses timeouts to detect potential deadlocks, assuming that processes waiting beyond a threshold are likely involved in deadlocks:

class TimeoutDetector:

def __init__(self, timeout_threshold=30):

self.timeout_threshold = timeout_threshold

self.waiting_processes = {}

self.start_times = {}

def register_wait(self, process_id, resource_id):

self.waiting_processes[process_id] = resource_id

self.start_times[process_id] = time.time()

def check_timeouts(self):

current_time = time.time()

suspected_deadlocks = []

for process_id, start_time in self.start_times.items():

if current_time - start_time > self.timeout_threshold:

suspected_deadlocks.append(process_id)

return self.analyze_suspected_processes(suspected_deadlocks)

Advanced Techniques

Hierarchical Deadlock Detection

Large distributed systems often employ hierarchical detection to manage complexity and reduce communication overhead:

Phantom Deadlock Prevention

Phantom deadlocks occur when the global state used for detection is inconsistent due to message delays. Prevention techniques include:

- Timestamp-based ordering: Using logical timestamps to ensure consistent global state

- Atomic snapshot algorithms: Capturing consistent global snapshots

- Vector clocks: Maintaining causal ordering of events

Real-World Applications

Database Management Systems

Distributed databases extensively use wait-for graphs for transaction deadlock detection:

class DatabaseDeadlockDetector:

def __init__(self):

self.transaction_graph = TransactionWaitGraph()

self.lock_manager = DistributedLockManager()

def detect_deadlock(self):

# Build wait-for graph from lock dependencies

self.build_graph_from_locks()

# Detect cycles using DFS

cycles = self.find_cycles_dfs()

if cycles:

victim_transaction = self.select_victim(cycles[0])

self.abort_transaction(victim_transaction)

return True

return False

def select_victim(self, cycle):

# Choose transaction with least cost to abort

min_cost = float('inf')

victim = None

for transaction in cycle:

cost = self.calculate_abort_cost(transaction)

if cost < min_cost:

min_cost = cost

victim = transaction

return victim

Cloud Computing Environments

Cloud platforms implement sophisticated deadlock detection for resource allocation across virtual machines and containers:

- Resource scheduling: Preventing deadlocks in CPU, memory, and storage allocation

- Container orchestration: Managing dependencies between containerized services

- Network resource management: Detecting deadlocks in bandwidth allocation

Performance Optimization

Communication Overhead Reduction

Several strategies minimize the communication overhead inherent in distributed deadlock detection:

- Lazy propagation: Delaying updates to reduce message frequency

- Incremental updates: Sending only changes rather than complete graph states

- Compression techniques: Reducing message sizes through graph compression

Scalability Considerations

As systems scale, deadlock detection algorithms must adapt:

class ScalableDeadlockDetector:

def __init__(self, partitioning_strategy="hash"):

self.partitioning_strategy = partitioning_strategy

self.local_detectors = {}

self.coordinator_nodes = []

def partition_graph(self, global_graph):

if self.partitioning_strategy == "hash":

return self.hash_partition(global_graph)

elif self.partitioning_strategy == "geographic":

return self.geographic_partition(global_graph)

def hash_partition(self, graph):

partitions = {}

for node in graph.nodes:

partition_id = hash(node) % len(self.coordinator_nodes)

if partition_id not in partitions:

partitions[partition_id] = []

partitions[partition_id].append(node)

return partitions

Challenges and Limitations

False Positives and Negatives

Distributed deadlock detection faces several accuracy challenges:

- False positives: Detecting deadlocks that don’t actually exist due to outdated information

- False negatives: Missing actual deadlocks due to incomplete global state

- Race conditions: Concurrent operations interfering with detection accuracy

Network Partitions

Network partitions create significant challenges for distributed deadlock detection:

- Split-brain scenarios: Multiple coordinators operating independently

- Incomplete information: Missing critical dependency information

- Recovery complexity: Reconciling state after partition healing

Best Practices and Recommendations

Design Guidelines

When implementing wait-for graph based deadlock detection, follow these guidelines:

- Choose appropriate detection frequency: Balance between detection overhead and resolution time

- Implement robust victim selection: Minimize system disruption during deadlock resolution

- Design for fault tolerance: Handle coordinator failures and network partitions gracefully

- Monitor performance metrics: Track detection accuracy, latency, and overhead

Implementation Checklist

- Define clear process and resource identification schemes

- Establish reliable communication protocols between sites

- Implement efficient graph storage and manipulation algorithms

- Design comprehensive testing scenarios including edge cases

- Plan for system monitoring and debugging capabilities

Future Directions

The field of distributed deadlock detection continues evolving with emerging technologies:

- Machine learning approaches: Using AI to predict and prevent deadlocks proactively

- Blockchain integration: Leveraging distributed ledger technology for consensus-based detection

- Edge computing considerations: Adapting algorithms for IoT and edge computing environments

- Quantum computing implications: Exploring quantum algorithms for deadlock detection

Conclusion

Wait-for graphs provide a powerful foundation for deadlock detection in distributed systems, offering both theoretical elegance and practical utility. While challenges such as phantom deadlocks, network partitions, and scalability concerns remain, modern algorithms and implementation strategies have made distributed deadlock detection both feasible and efficient.

Success in implementing these systems requires careful consideration of trade-offs between detection accuracy, performance overhead, and system complexity. As distributed systems continue growing in scale and importance, mastering wait-for graph techniques becomes increasingly valuable for system architects and developers.

The key to effective deadlock detection lies not just in choosing the right algorithm, but in understanding the specific requirements and constraints of your distributed environment. By combining solid theoretical knowledge with practical implementation experience, developers can build robust systems that gracefully handle deadlock scenarios while maintaining optimal performance.