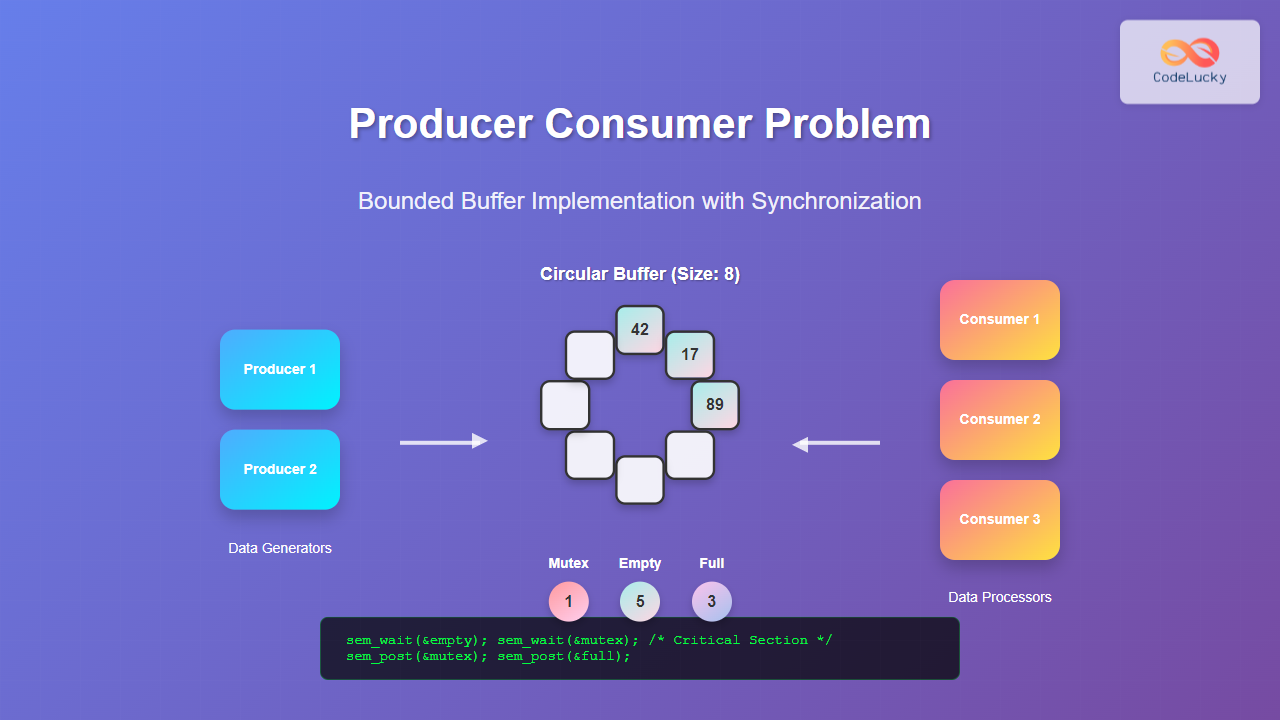

The Producer Consumer Problem, also known as the bounded buffer problem, is one of the most fundamental synchronization problems in operating systems and concurrent programming. This classic problem demonstrates the challenges of coordinating multiple processes that share a common resource – a bounded buffer.

In this comprehensive guide, we’ll explore the intricacies of the producer consumer problem, implement solutions using various synchronization mechanisms, and understand how this concept applies to real-world systems.

Understanding the Producer Consumer Problem

The producer consumer problem involves two types of processes:

- Producer Process: Generates data and places it into a shared buffer

- Consumer Process: Removes and processes data from the shared buffer

The challenge arises when multiple producers and consumers access the same bounded buffer concurrently. Without proper synchronization, this can lead to:

- Race conditions

- Data corruption

- Buffer overflow or underflow

- Deadlock situations

Key Components of Bounded Buffer

A bounded buffer implementation requires several essential components:

1. Buffer Structure

The buffer is typically implemented as a circular array with fixed size. Key attributes include:

- Size: Maximum number of items the buffer can hold

- In pointer: Points to the next empty slot for producers

- Out pointer: Points to the next item for consumers

- Count: Current number of items in the buffer

2. Synchronization Mechanisms

To ensure thread-safe operations, we need:

- Mutex (Mutual Exclusion): Ensures only one process accesses the buffer at a time

- Semaphores: Control access based on available resources

- Condition Variables: Allow processes to wait for specific conditions

Implementation Using Semaphores

Let’s implement the producer consumer problem using semaphores, which is one of the most elegant solutions:

C Implementation

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

#include <semaphore.h>

#include <unistd.h>

#define BUFFER_SIZE 5

#define NUM_PRODUCERS 2

#define NUM_CONSUMERS 2

#define ITEMS_TO_PRODUCE 10

// Shared buffer

int buffer[BUFFER_SIZE];

int in = 0; // Points to next empty slot

int out = 0; // Points to next item to consume

// Semaphores

sem_t empty; // Count of empty slots

sem_t full; // Count of filled slots

sem_t mutex; // Mutual exclusion for buffer access

// Producer function

void* producer(void* arg) {

int producer_id = *(int*)arg;

for(int i = 0; i < ITEMS_TO_PRODUCE; i++) {

int item = rand() % 100 + 1; // Generate random item

// Wait for empty slot

sem_wait(∅);

// Acquire mutex for critical section

sem_wait(&mutex);

// Critical Section - Add item to buffer

buffer[in] = item;

printf("Producer %d produced item %d at position %d\n",

producer_id, item, in);

in = (in + 1) % BUFFER_SIZE;

// Release mutex

sem_post(&mutex);

// Signal that buffer has one more item

sem_post(&full);

usleep(100000); // Simulate production time

}

return NULL;

}

// Consumer function

void* consumer(void* arg) {

int consumer_id = *(int*)arg;

for(int i = 0; i < ITEMS_TO_PRODUCE; i++) {

// Wait for filled slot

sem_wait(&full);

// Acquire mutex for critical section

sem_wait(&mutex);

// Critical Section - Remove item from buffer

int item = buffer[out];

printf("Consumer %d consumed item %d from position %d\n",

consumer_id, item, out);

out = (out + 1) % BUFFER_SIZE;

// Release mutex

sem_post(&mutex);

// Signal that buffer has one more empty slot

sem_post(∅);

usleep(150000); // Simulate consumption time

}

return NULL;

}

int main() {

pthread_t producers[NUM_PRODUCERS];

pthread_t consumers[NUM_CONSUMERS];

// Initialize semaphores

sem_init(∅, 0, BUFFER_SIZE); // Initially all slots are empty

sem_init(&full, 0, 0); // Initially no slots are full

sem_init(&mutex, 0, 1); // Binary semaphore for mutual exclusion

// Create producer and consumer IDs

int producer_ids[NUM_PRODUCERS];

int consumer_ids[NUM_CONSUMERS];

// Create producer threads

for(int i = 0; i < NUM_PRODUCERS; i++) {

producer_ids[i] = i + 1;

pthread_create(&producers[i], NULL, producer, &producer_ids[i]);

}

// Create consumer threads

for(int i = 0; i < NUM_CONSUMERS; i++) {

consumer_ids[i] = i + 1;

pthread_create(&consumers[i], NULL, consumer, &consumer_ids[i]);

}

// Wait for all threads to complete

for(int i = 0; i < NUM_PRODUCERS; i++) {

pthread_join(producers[i], NULL);

}

for(int i = 0; i < NUM_CONSUMERS; i++) {

pthread_join(consumers[i], NULL);

}

// Destroy semaphores

sem_destroy(∅);

sem_destroy(&full);

sem_destroy(&mutex);

printf("All producers and consumers have finished.\n");

return 0;

}

Sample Output

Producer 1 produced item 42 at position 0

Producer 2 produced item 67 at position 1

Consumer 1 consumed item 42 from position 0

Producer 1 produced item 23 at position 2

Consumer 2 consumed item 67 from position 1

Producer 2 produced item 89 at position 3

Consumer 1 consumed item 23 from position 2

...

All producers and consumers have finished.

Python Implementation with Threading

Here’s a Python implementation using threading and synchronization primitives:

import threading

import time

import random

from collections import deque

class BoundedBuffer:

def __init__(self, size):

self.buffer = deque(maxlen=size)

self.size = size

self.lock = threading.Lock()

self.not_empty = threading.Condition(self.lock)

self.not_full = threading.Condition(self.lock)

def put(self, item, producer_id):

with self.lock:

# Wait while buffer is full

while len(self.buffer) == self.size:

print(f"Producer {producer_id} waiting - buffer full")

self.not_full.wait()

# Add item to buffer

self.buffer.append(item)

print(f"Producer {producer_id} produced item {item} - "

f"Buffer size: {len(self.buffer)}")

# Notify consumers that buffer is not empty

self.not_empty.notify()

def get(self, consumer_id):

with self.lock:

# Wait while buffer is empty

while len(self.buffer) == 0:

print(f"Consumer {consumer_id} waiting - buffer empty")

self.not_empty.wait()

# Remove item from buffer

item = self.buffer.popleft()

print(f"Consumer {consumer_id} consumed item {item} - "

f"Buffer size: {len(self.buffer)}")

# Notify producers that buffer is not full

self.not_full.notify()

return item

class Producer(threading.Thread):

def __init__(self, buffer, producer_id, items_to_produce):

super().__init__()

self.buffer = buffer

self.producer_id = producer_id

self.items_to_produce = items_to_produce

def run(self):

for i in range(self.items_to_produce):

item = random.randint(1, 100)

self.buffer.put(item, self.producer_id)

time.sleep(random.uniform(0.1, 0.5)) # Simulate production time

class Consumer(threading.Thread):

def __init__(self, buffer, consumer_id, items_to_consume):

super().__init__()

self.buffer = buffer

self.consumer_id = consumer_id

self.items_to_consume = items_to_consume

def run(self):

for i in range(self.items_to_consume):

item = self.buffer.get(self.consumer_id)

time.sleep(random.uniform(0.2, 0.6)) # Simulate consumption time

# Main execution

if __name__ == "__main__":

BUFFER_SIZE = 3

NUM_PRODUCERS = 2

NUM_CONSUMERS = 2

ITEMS_PER_PRODUCER = 5

# Create bounded buffer

buffer = BoundedBuffer(BUFFER_SIZE)

# Create and start producer threads

producers = []

for i in range(NUM_PRODUCERS):

producer = Producer(buffer, i + 1, ITEMS_PER_PRODUCER)

producers.append(producer)

producer.start()

# Create and start consumer threads

consumers = []

for i in range(NUM_CONSUMERS):

consumer = Consumer(buffer, i + 1, ITEMS_PER_PRODUCER)

consumers.append(consumer)

consumer.start()

# Wait for all threads to complete

for producer in producers:

producer.join()

for consumer in consumers:

consumer.join()

print("All producers and consumers have finished.")

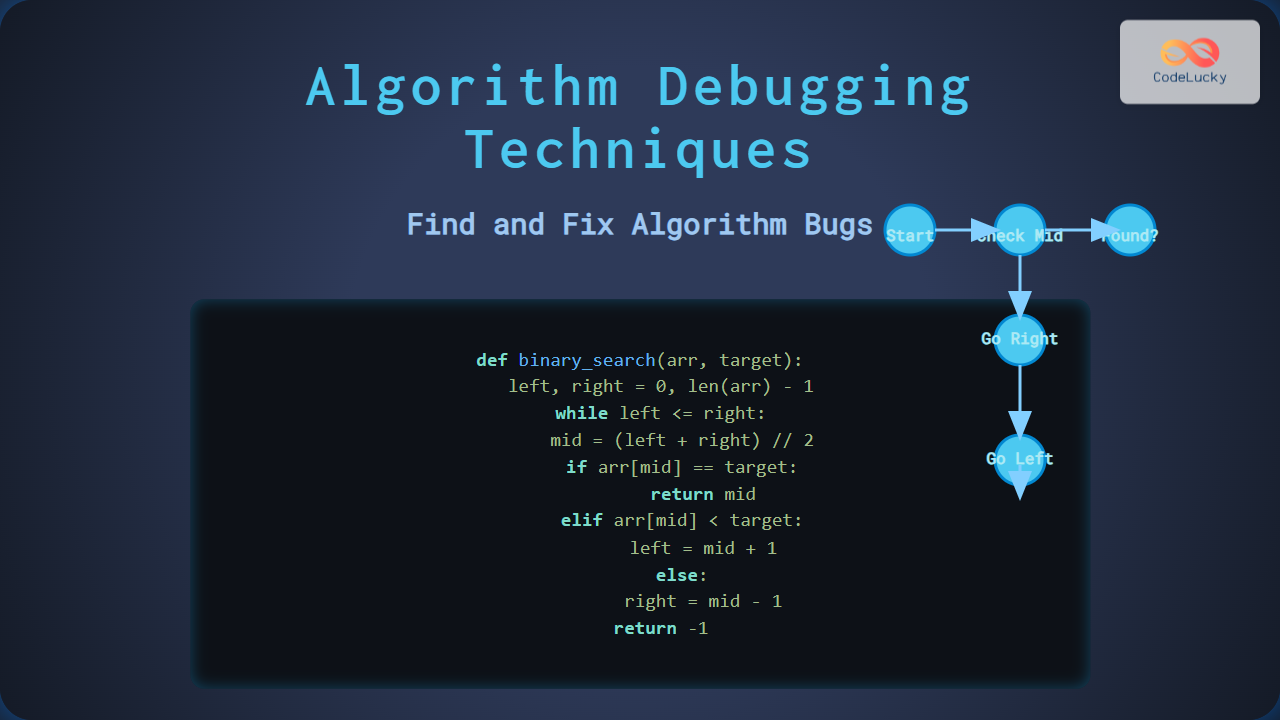

Synchronization Flow Visualization

Common Problems and Solutions

1. Race Conditions

Race conditions occur when multiple processes access shared resources simultaneously without proper synchronization.

Problem Example:

// Incorrect implementation - Race condition

void producer() {

if (count < BUFFER_SIZE) { // Check

buffer[in] = item; // Use - Another thread might modify count here

count++;

in = (in + 1) % BUFFER_SIZE;

}

}

Solution:

Always use proper synchronization mechanisms like mutexes or semaphores to make operations atomic.

2. Deadlock Prevention

Deadlocks can occur when processes wait indefinitely for resources. In producer-consumer scenarios, careful design of semaphore operations prevents deadlocks.

3. Starvation Issues

Starvation occurs when a process is perpetually denied access to resources. Solutions include:

- Fair scheduling algorithms

- Priority-based access control

- Time-bounded waiting mechanisms

Advanced Implementations

Multiple Buffer Implementation

For high-throughput scenarios, multiple buffers can be used to reduce contention:

public class MultiBufferProducerConsumer {

private final int BUFFER_COUNT = 3;

private final Queue<Integer>[] buffers;

private final ReentrantLock[] locks;

private final Condition[] notEmpty;

private final Condition[] notFull;

private int currentProducerBuffer = 0;

private int currentConsumerBuffer = 0;

@SuppressWarnings("unchecked")

public MultiBufferProducerConsumer(int bufferSize) {

buffers = new Queue[BUFFER_COUNT];

locks = new ReentrantLock[BUFFER_COUNT];

notEmpty = new Condition[BUFFER_COUNT];

notFull = new Condition[BUFFER_COUNT];

for (int i = 0; i < BUFFER_COUNT; i++) {

buffers[i] = new LinkedList<>();

locks[i] = new ReentrantLock();

notEmpty[i] = locks[i].newCondition();

notFull[i] = locks[i].newCondition();

}

}

public void produce(int item) throws InterruptedException {

int bufferIndex = currentProducerBuffer;

ReentrantLock lock = locks[bufferIndex];

lock.lock();

try {

while (buffers[bufferIndex].size() == BUFFER_SIZE) {

notFull[bufferIndex].await();

}

buffers[bufferIndex].offer(item);

System.out.println("Produced " + item + " in buffer " + bufferIndex);

notEmpty[bufferIndex].signal();

// Switch to next buffer for load distribution

currentProducerBuffer = (currentProducerBuffer + 1) % BUFFER_COUNT;

} finally {

lock.unlock();

}

}

public int consume() throws InterruptedException {

int bufferIndex = currentConsumerBuffer;

ReentrantLock lock = locks[bufferIndex];

lock.lock();

try {

while (buffers[bufferIndex].isEmpty()) {

notEmpty[bufferIndex].await();

}

int item = buffers[bufferIndex].poll();

System.out.println("Consumed " + item + " from buffer " + bufferIndex);

notFull[bufferIndex].signal();

// Switch to next buffer for load distribution

currentConsumerBuffer = (currentConsumerBuffer + 1) % BUFFER_COUNT;

return item;

} finally {

lock.unlock();

}

}

}

Real-World Applications

1. Operating System Kernel

- Pipe Implementation: Inter-process communication uses bounded buffers

- Device Drivers: Network and storage drivers implement producer-consumer patterns

- Print Spooling: Print jobs are queued in bounded buffers

2. Web Servers

- Request Processing: HTTP requests are buffered before processing

- Database Connections: Connection pools use bounded buffer concepts

- Logging Systems: Log messages are buffered before writing to disk

3. Multimedia Applications

- Audio/Video Streaming: Frame buffers for smooth playback

- Real-time Processing: Signal processing pipelines

- Gaming Engines: Render queues and input buffers

Performance Considerations

Buffer Size Selection

Choosing the right buffer size is crucial for optimal performance:

- Small Buffers: Lower memory usage but more frequent blocking

- Large Buffers: Better throughput but higher memory consumption

- Dynamic Sizing: Adaptive buffer sizes based on load

Lock Granularity

The level of locking affects performance:

- Coarse-grained Locking: Simple but may reduce concurrency

- Fine-grained Locking: Better concurrency but more complex

- Lock-free Algorithms: Highest performance but implementation complexity

Testing and Debugging

Common Test Scenarios

import unittest

import threading

import time

class TestProducerConsumer(unittest.TestCase):

def setUp(self):

self.buffer = BoundedBuffer(5)

self.produced_items = []

self.consumed_items = []

self.lock = threading.Lock()

def test_single_producer_consumer(self):

"""Test basic functionality with one producer and one consumer"""

def producer():

for i in range(10):

self.buffer.put(i, 1)

with self.lock:

self.produced_items.append(i)

def consumer():

for i in range(10):

item = self.buffer.get(1)

with self.lock:

self.consumed_items.append(item)

producer_thread = threading.Thread(target=producer)

consumer_thread = threading.Thread(target=consumer)

producer_thread.start()

consumer_thread.start()

producer_thread.join()

consumer_thread.join()

self.assertEqual(len(self.produced_items), 10)

self.assertEqual(len(self.consumed_items), 10)

self.assertEqual(set(self.produced_items), set(self.consumed_items))

def test_multiple_producers_consumers(self):

"""Test with multiple producers and consumers"""

NUM_THREADS = 3

ITEMS_PER_THREAD = 5

def producer(producer_id):

for i in range(ITEMS_PER_THREAD):

item = producer_id * 100 + i

self.buffer.put(item, producer_id)

with self.lock:

self.produced_items.append(item)

def consumer(consumer_id):

for i in range(ITEMS_PER_THREAD):

item = self.buffer.get(consumer_id)

with self.lock:

self.consumed_items.append(item)

threads = []

# Create producer threads

for i in range(NUM_THREADS):

thread = threading.Thread(target=producer, args=(i,))

threads.append(thread)

thread.start()

# Create consumer threads

for i in range(NUM_THREADS):

thread = threading.Thread(target=consumer, args=(i,))

threads.append(thread)

thread.start()

# Wait for all threads

for thread in threads:

thread.join()

self.assertEqual(len(self.produced_items), NUM_THREADS * ITEMS_PER_THREAD)

self.assertEqual(len(self.consumed_items), NUM_THREADS * ITEMS_PER_THREAD)

self.assertEqual(set(self.produced_items), set(self.consumed_items))

if __name__ == '__main__':

unittest.main()

Best Practices and Guidelines

Design Principles

- Always use proper synchronization: Never access shared resources without appropriate locking mechanisms

- Minimize critical section size: Keep locked code sections as small as possible

- Avoid nested locks: Prevent deadlock by avoiding complex locking hierarchies

- Use timeout mechanisms: Implement timeouts to prevent infinite waiting

- Monitor and log: Add comprehensive logging for debugging and monitoring

Performance Optimization

- Batch Operations: Process multiple items in single critical sections

- Non-blocking Algorithms: Consider lock-free implementations for high-performance scenarios

- Buffer Partitioning: Use multiple smaller buffers instead of one large buffer

- Producer-Consumer Balancing: Adjust the number of producers and consumers based on workload

Conclusion

The Producer Consumer Problem with bounded buffer implementation is a cornerstone concept in concurrent programming and operating systems. Understanding its intricacies, implementation techniques, and real-world applications is essential for developing robust, scalable systems.

Key takeaways include:

- Proper synchronization is crucial for preventing race conditions and ensuring data integrity

- Different synchronization mechanisms (semaphores, mutexes, condition variables) offer various advantages

- Performance optimization requires careful consideration of buffer size, lock granularity, and algorithmic choices

- Real-world applications span from operating system kernels to web servers and multimedia applications

- Testing and debugging concurrent systems requires specialized techniques and comprehensive test scenarios

By mastering these concepts and implementations, you’ll be well-equipped to design and implement efficient concurrent systems that can handle the complexities of modern computing environments. The producer consumer problem serves as a foundation for understanding more complex synchronization challenges in distributed systems, parallel computing, and high-performance applications.

- Understanding the Producer Consumer Problem

- Key Components of Bounded Buffer

- Implementation Using Semaphores

- Python Implementation with Threading

- Synchronization Flow Visualization

- Common Problems and Solutions

- Advanced Implementations

- Real-World Applications

- Performance Considerations

- Testing and Debugging

- Best Practices and Guidelines

- Conclusion