Direct Memory Access (DMA) is a crucial feature in modern operating systems that allows hardware devices to transfer data directly to and from memory without constant CPU supervision. This mechanism significantly improves system performance by freeing the CPU from routine data transfer operations, enabling it to focus on more complex computational tasks.

What is DMA (Direct Memory Access)?

DMA is a hardware feature that permits certain hardware subsystems to access main system memory independently of the central processing unit. Instead of the CPU handling every byte of data transfer between devices and memory, a specialized controller called the DMA controller manages these operations autonomously.

The primary advantage of DMA is that it eliminates the need for the CPU to be involved in every data transfer operation, which would otherwise consume valuable processing cycles. This is particularly beneficial for high-throughput operations like disk I/O, network communications, and multimedia processing.

How DMA Works in Operating Systems

The DMA process involves several key steps that occur when a device needs to transfer data:

DMA Transfer Process

- Initialization: The CPU programs the DMA controller with transfer parameters including source address, destination address, and transfer size.

- Bus Request: When ready to transfer data, the DMA controller requests control of the system bus from the CPU.

- Bus Grant: The CPU grants bus control to the DMA controller and enters a wait state.

- Data Transfer: The DMA controller manages the actual data transfer between the device and memory.

- Completion: After transfer completion, the DMA controller releases the bus back to the CPU and signals completion via an interrupt.

Types of DMA Controllers

Operating systems work with different types of DMA controllers, each designed for specific use cases and performance requirements:

1. Single-Channel DMA Controller

The simplest form of DMA controller that can handle one transfer at a time. While cost-effective, it may create bottlenecks in systems with multiple devices requiring simultaneous data transfers.

2. Multi-Channel DMA Controller

Features multiple independent channels, allowing simultaneous data transfers between different devices and memory. Each channel can be programmed independently with its own transfer parameters.

3. Bus Master DMA

Advanced DMA controllers that can take complete control of the system bus, enabling more efficient and flexible data transfers. Common in modern PCI and PCIe devices.

4. Scatter-Gather DMA

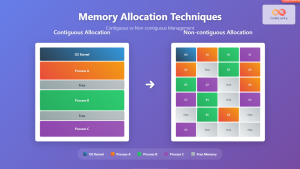

Sophisticated DMA implementation that can handle non-contiguous memory regions in a single operation, reducing the overhead of multiple transfer setups.

DMA Implementation in Modern Operating Systems

Device Driver Integration

Operating system device drivers must be specifically designed to work with DMA controllers. Here’s a simplified example of how a driver might set up a DMA transfer:

// Simplified DMA setup in a device driver

struct dma_transfer {

void *source_addr;

void *dest_addr;

size_t transfer_size;

int channel;

};

int setup_dma_transfer(struct dma_transfer *transfer) {

// Allocate DMA channel

int channel = allocate_dma_channel();

if (channel < 0) return -1;

// Program DMA controller registers

write_dma_register(DMA_SOURCE_ADDR, transfer->source_addr);

write_dma_register(DMA_DEST_ADDR, transfer->dest_addr);

write_dma_register(DMA_COUNT, transfer->transfer_size);

write_dma_register(DMA_MODE, DMA_MODE_SINGLE | DMA_MODE_READ);

// Enable DMA channel

enable_dma_channel(channel);

return 0;

}

Memory Management Considerations

DMA operations require special memory management considerations:

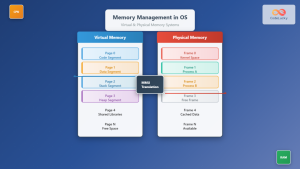

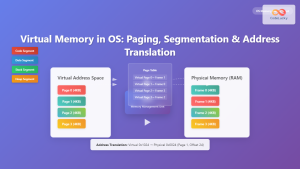

- Physical Memory Mapping: DMA controllers work with physical addresses, requiring the OS to maintain proper virtual-to-physical address mappings.

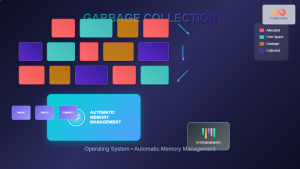

- Cache Coherency: The OS must ensure cache coherency between CPU cache and DMA-accessible memory.

- Memory Alignment: DMA transfers often require specific memory alignment for optimal performance.

- Buffer Management: The OS must manage DMA buffers to prevent conflicts and ensure data integrity.

DMA Modes of Operation

DMA controllers operate in different modes depending on system requirements and hardware capabilities:

Burst Mode

In burst mode, the DMA controller takes complete control of the system bus for the entire duration of the data transfer. This provides maximum transfer speed but may impact overall system responsiveness.

Cycle Stealing Mode

The DMA controller “steals” bus cycles from the CPU when the CPU is not actively using the bus. This approach provides a balance between transfer efficiency and system responsiveness.

Transparent Mode

Data transfer occurs only when the CPU is not using the bus, such as during internal operations. This mode has minimal impact on CPU performance but may result in slower transfer rates.

Benefits of DMA in Operating Systems

Performance Improvements

- Reduced CPU Overhead: Frees CPU from routine data transfer tasks

- Increased Throughput: Enables parallel processing while data transfers occur

- Lower Latency: Direct memory access eliminates intermediate buffering

- Better Resource Utilization: Allows CPU to focus on computational tasks

System Efficiency

- Concurrent Operations: Multiple devices can transfer data simultaneously

- Reduced Context Switching: Fewer interrupts mean less context switching overhead

- Improved Multitasking: Better overall system responsiveness

Common DMA Applications

Storage Systems

Modern storage controllers use DMA extensively for high-speed data transfers between storage devices and system memory. SSDs and hard drives rely on DMA to achieve their maximum transfer rates.

Network Communications

Network interface cards (NICs) use DMA to transfer packets directly to and from memory buffers, enabling high-bandwidth network communications without overwhelming the CPU.

Graphics Processing

Graphics cards use DMA to transfer texture data, vertex information, and frame buffers, enabling smooth graphics rendering and video playback.

Audio Processing

Sound cards use DMA for continuous audio streaming, ensuring uninterrupted playback and recording without audio dropouts or glitches.

DMA Challenges and Limitations

Security Considerations

DMA can pose security risks if not properly managed:

- DMA Attacks: Malicious devices can potentially access sensitive memory regions

- Memory Protection: Requires proper implementation of IOMMU (Input-Output Memory Management Unit)

- Buffer Overflow: Improper DMA setup can lead to memory corruption

Hardware Limitations

- Address Space Restrictions: Some DMA controllers have limited addressing capabilities

- Transfer Size Limits: Maximum transfer sizes may be constrained by hardware

- Bus Contention: Multiple DMA operations can compete for bus bandwidth

Modern DMA Developments

IOMMU Integration

Modern systems implement Input-Output Memory Management Units (IOMMU) to provide virtual memory support for DMA operations, enhancing both security and functionality.

PCIe and Advanced DMA

PCIe devices implement sophisticated DMA capabilities including:

- Multiple virtual channels

- Quality of Service (QoS) management

- Advanced error reporting

- Power management integration

Virtualization Support

Modern DMA controllers support virtualization features, allowing secure DMA operations in virtual machine environments through technologies like Intel VT-d and AMD-Vi.

Programming DMA in Operating Systems

Kernel-Level Implementation

// Example interrupt handler for DMA completion

void dma_interrupt_handler(int irq, void *dev_data) {

struct dma_device *dma_dev = (struct dma_device *)dev_data;

// Check DMA status

uint32_t status = read_dma_status(dma_dev->base_addr);

if (status & DMA_COMPLETE) {

// Signal completion to waiting process

complete(&dma_dev->transfer_complete);

// Clear interrupt

write_dma_register(dma_dev->base_addr + DMA_STATUS,

DMA_COMPLETE);

}

if (status & DMA_ERROR) {

// Handle DMA error

dma_dev->error_count++;

handle_dma_error(dma_dev, status);

}

}

User-Space Interface

Operating systems typically provide user-space applications with high-level APIs that abstract DMA complexities:

// User-space API example for asynchronous I/O with DMA

int async_read(int fd, void *buffer, size_t count, off_t offset) {

struct aiocb *aio_req = malloc(sizeof(struct aiocb));

aio_req->aio_fildes = fd;

aio_req->aio_buf = buffer;

aio_req->aio_nbytes = count;

aio_req->aio_offset = offset;

// Submit asynchronous read request (uses DMA internally)

if (aio_read(aio_req) == -1) {

free(aio_req);

return -1;

}

return 0; // Request submitted successfully

}

Performance Optimization with DMA

Buffer Alignment

Proper buffer alignment can significantly improve DMA performance:

// Allocate aligned buffer for optimal DMA performance

void* allocate_dma_buffer(size_t size) {

size_t alignment = 4096; // Page alignment

void *buffer = aligned_alloc(alignment, size);

if (buffer) {

// Lock pages in memory to prevent paging

if (mlock(buffer, size) != 0) {

free(buffer);

return NULL;

}

}

return buffer;

}

Scatter-Gather Lists

Advanced DMA controllers support scatter-gather operations for non-contiguous memory transfers:

struct sg_entry {

dma_addr_t addr;

uint32_t length;

uint32_t flags;

};

int setup_sg_dma(struct sg_entry *sg_list, int num_entries) {

for (int i = 0; i < num_entries; i++) {

// Program each scatter-gather entry

write_dma_register(SG_ADDR(i), sg_list[i].addr);

write_dma_register(SG_LEN(i), sg_list[i].length);

write_dma_register(SG_FLAGS(i), sg_list[i].flags);

}

// Start scatter-gather DMA transfer

write_dma_register(DMA_CONTROL, DMA_START | DMA_SG_MODE);

return 0;

}

Troubleshooting DMA Issues

Common Problems

- Cache Coherency Issues: Data inconsistencies between CPU cache and memory

- Address Translation Errors: Incorrect virtual-to-physical address mapping

- Timing Problems: Race conditions between CPU and DMA operations

- Resource Conflicts: Multiple devices competing for DMA channels

Debugging Techniques

- Hardware Debugging: Use logic analyzers to monitor bus transactions

- Software Tracing: Implement detailed logging in device drivers

- Performance Monitoring: Track DMA transfer rates and completion times

- Memory Analysis: Verify data integrity after DMA transfers

Future of DMA Technology

Emerging Trends

- AI/ML Accelerators: Specialized DMA for machine learning workloads

- Storage Class Memory: DMA optimizations for persistent memory

- Edge Computing: Low-power DMA implementations for IoT devices

- Quantum Computing: DMA adaptations for quantum control systems

Performance Enhancements

- Multi-Queue DMA: Parallel processing of multiple transfer queues

- Adaptive Algorithms: Dynamic optimization based on workload patterns

- Hardware Acceleration: Dedicated DMA processing units

- Software-Defined DMA: Programmable DMA controllers

Conclusion

Direct Memory Access (DMA) represents a fundamental optimization in modern operating system design, enabling efficient data transfer between devices and memory without constant CPU intervention. Understanding DMA principles, implementation techniques, and optimization strategies is crucial for system developers, device driver programmers, and anyone working on performance-critical applications.

As computing systems continue to evolve with faster storage devices, higher bandwidth networks, and more demanding applications, DMA technology will continue to play a vital role in achieving optimal system performance. The integration of advanced features like IOMMU support, virtualization capabilities, and scatter-gather operations ensures that DMA remains relevant and powerful in modern computing environments.

Proper implementation of DMA requires careful consideration of security implications, hardware limitations, and software design patterns. By following best practices and understanding the underlying principles, developers can harness the full potential of DMA to create efficient, high-performance systems that meet the demands of today’s computing challenges.