Introduction to Deadlock Detection and Recovery

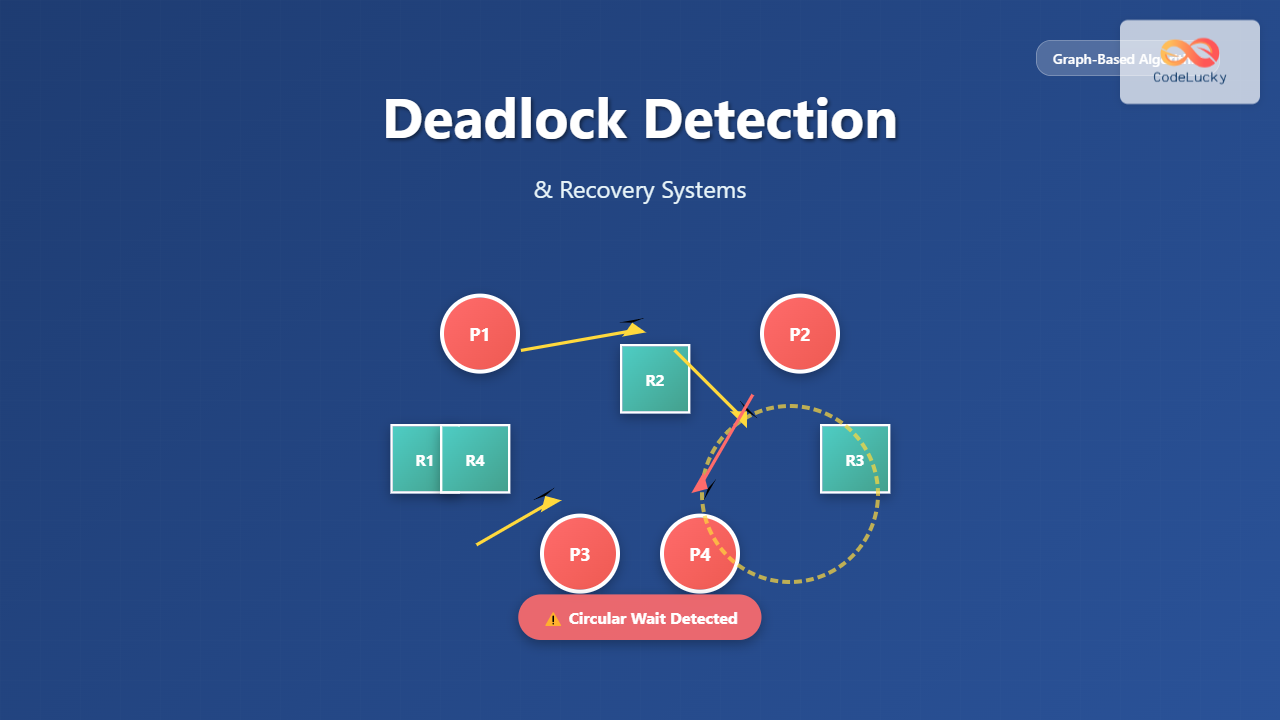

Deadlock is one of the most critical challenges in operating systems where multiple processes compete for limited resources. When processes become stuck waiting for each other indefinitely, system performance degrades significantly. Graph-based algorithms provide elegant solutions for detecting and recovering from deadlocks by visualizing resource dependencies as mathematical structures.

This comprehensive guide explores advanced deadlock detection mechanisms, focusing on resource allocation graphs, wait-for graphs, and proven recovery strategies that maintain system stability while resolving conflicts efficiently.

Understanding Deadlock Fundamentals

The Four Coffman Conditions

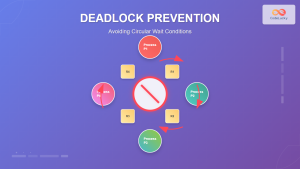

Deadlock occurs when all four conditions exist simultaneously:

- Mutual Exclusion: Resources cannot be shared among processes

- Hold and Wait: Processes hold resources while requesting additional ones

- No Preemption: Resources cannot be forcibly removed from processes

- Circular Wait: A circular chain of processes exists where each waits for the next

Graph-based detection algorithms primarily focus on identifying circular wait conditions through mathematical graph theory principles.

Resource Allocation Graph Components

A Resource Allocation Graph (RAG) consists of:

- Process Nodes: Represented as circles (P1, P2, P3…)

- Resource Nodes: Represented as rectangles (R1, R2, R3…)

- Request Edges: Arrows from processes to resources (Pi → Rj)

- Assignment Edges: Arrows from resources to processes (Ri → Pj)

Graph-Based Detection Algorithms

Resource Allocation Graph Algorithm

The RAG algorithm detects deadlocks by identifying cycles in the resource allocation graph. Here’s the step-by-step implementation:

class ResourceAllocationGraph:

def __init__(self):

self.processes = set()

self.resources = set()

self.request_edges = {} # process -> [resources]

self.assignment_edges = {} # resource -> process

def add_request_edge(self, process, resource):

if process not in self.request_edges:

self.request_edges[process] = []

self.request_edges[process].append(resource)

def add_assignment_edge(self, resource, process):

self.assignment_edges[resource] = process

def detect_cycle_dfs(self, node, visited, rec_stack, path):

visited[node] = True

rec_stack[node] = True

path.append(node)

# Get adjacent nodes

adjacent = []

if node in self.request_edges:

for resource in self.request_edges[node]:

if resource in self.assignment_edges:

adjacent.append(self.assignment_edges[resource])

for neighbor in adjacent:

if not visited.get(neighbor, False):

if self.detect_cycle_dfs(neighbor, visited, rec_stack, path):

return True

elif rec_stack.get(neighbor, False):

# Cycle detected

cycle_start = path.index(neighbor)

self.deadlock_cycle = path[cycle_start:]

return True

rec_stack[node] = False

path.pop()

return False

def detect_deadlock(self):

visited = {}

rec_stack = {}

for process in self.processes:

if not visited.get(process, False):

if self.detect_cycle_dfs(process, visited, rec_stack, []):

return True, self.deadlock_cycle

return False, []

Wait-For Graph Algorithm

The Wait-For Graph simplifies detection by eliminating resource nodes, creating direct edges between processes that wait for each other:

class WaitForGraph:

def __init__(self):

self.graph = {}

def add_edge(self, waiting_process, holding_process):

if waiting_process not in self.graph:

self.graph[waiting_process] = []

self.graph[waiting_process].append(holding_process)

def detect_cycle_iterative(self):

color = {} # 0: white, 1: gray, 2: black

parent = {}

for node in self.graph:

color[node] = 0

parent[node] = None

def dfs_visit(u):

color[u] = 1 # Gray

if u in self.graph:

for v in self.graph[u]:

if color.get(v, 0) == 0:

parent[v] = u

if dfs_visit(v):

return True

elif color.get(v, 0) == 1:

# Back edge found - cycle detected

return True

color[u] = 2 # Black

return False

for node in self.graph:

if color[node] == 0:

if dfs_visit(node):

return True

return False

Banker’s Algorithm for Deadlock Detection

The Banker’s Algorithm extends detection to multi-instance resources using matrix operations:

class BankersAlgorithm:

def __init__(self, processes, resources):

self.processes = processes

self.resources = resources

self.allocation = [[0] * len(resources) for _ in range(len(processes))]

self.max_need = [[0] * len(resources) for _ in range(len(processes))]

self.available = [0] * len(resources)

def calculate_need(self):

need = []

for i in range(len(self.processes)):

need_row = []

for j in range(len(self.resources)):

need_row.append(self.max_need[i][j] - self.allocation[i][j])

need.append(need_row)

return need

def is_safe_state(self):

need = self.calculate_need()

finish = [False] * len(self.processes)

work = self.available[:]

safe_sequence = []

while True:

found = False

for i in range(len(self.processes)):

if not finish[i]:

can_allocate = True

for j in range(len(self.resources)):

if need[i][j] > work[j]:

can_allocate = False

break

if can_allocate:

# Process can complete

for j in range(len(self.resources)):

work[j] += self.allocation[i][j]

finish[i] = True

safe_sequence.append(self.processes[i])

found = True

if not found:

break

return all(finish), safe_sequence

Deadlock Detection Implementation Examples

Example 1: Simple Resource Allocation Graph

Consider a scenario with 3 processes and 3 resources:

# Deadlock detection example

rag = ResourceAllocationGraph()

rag.processes = {'P1', 'P2', 'P3'}

rag.resources = {'R1', 'R2', 'R3'}

# Add allocation edges (resource -> process)

rag.add_assignment_edge('R1', 'P1')

rag.add_assignment_edge('R2', 'P2')

rag.add_assignment_edge('R3', 'P3')

# Add request edges (process -> resource)

rag.add_request_edge('P1', 'R2')

rag.add_request_edge('P2', 'R3')

rag.add_request_edge('P3', 'R1')

# Detect deadlock

is_deadlock, cycle = rag.detect_deadlock()

print(f"Deadlock detected: {is_deadlock}")

print(f"Deadlock cycle: {cycle}")

# Output:

# Deadlock detected: True

# Deadlock cycle: ['P1', 'P2', 'P3']

Example 2: Multi-Instance Resource Scenario

Banking system example with multiple resource instances:

# Initialize Banker's Algorithm

processes = ['P0', 'P1', 'P2', 'P3']

resources = ['R0', 'R1', 'R2']

banker = BankersAlgorithm(processes, resources)

# Current allocation matrix

banker.allocation = [

[2, 0, 1], # P0

[1, 1, 0], # P1

[1, 0, 3], # P2

[0, 2, 1] # P3

]

# Maximum need matrix

banker.max_need = [

[3, 2, 2], # P0

[1, 2, 3], # P1

[3, 1, 4], # P2

[2, 2, 2] # P3

]

# Available resources

banker.available = [1, 1, 0]

# Check for safe state

is_safe, sequence = banker.is_safe_state()

print(f"System is in safe state: {is_safe}")

print(f"Safe sequence: {sequence}")

# Output:

# System is in safe state: True

# Safe sequence: ['P1', 'P3', 'P0', 'P2']

Deadlock Recovery Strategies

Process Termination Methods

Abort All Deadlocked Processes

- Simplest approach but expensive

- Terminates all processes in the deadlock cycle

- Requires restarting processes from the beginning

Abort Processes One by One

- More efficient resource utilization

- Requires careful selection criteria

- Tests for deadlock resolution after each termination

class DeadlockRecovery:

def __init__(self, detection_algorithm):

self.detector = detection_algorithm

def calculate_termination_cost(self, process):

# Factors to consider:

# - Process priority

# - Computation time invested

# - Resources currently held

# - Number of processes that would be affected

priority_weight = process.priority * 0.3

time_invested = process.execution_time * 0.4

resource_count = len(process.held_resources) * 0.2

dependency_count = len(process.dependent_processes) * 0.1

return priority_weight + time_invested + resource_count + dependency_count

def abort_minimum_cost_process(self, deadlocked_processes):

min_cost = float('inf')

victim = None

for process in deadlocked_processes:

cost = self.calculate_termination_cost(process)

if cost < min_cost:

min_cost = cost

victim = process

if victim:

self.terminate_process(victim)

return victim

return None

def recover_by_termination(self):

recovery_steps = []

while True:

is_deadlock, cycle = self.detector.detect_deadlock()

if not is_deadlock:

break

victim = self.abort_minimum_cost_process(cycle)

recovery_steps.append(f"Terminated process: {victim.id}")

return recovery_steps

Resource Preemption Strategy

Resource preemption involves temporarily removing resources from processes to break deadlock cycles:

class ResourcePreemption:

def __init__(self):

self.rollback_points = {}

def create_checkpoint(self, process):

# Save process state for rollback

checkpoint = {

'process_id': process.id,

'memory_state': process.memory.copy(),

'register_state': process.registers.copy(),

'file_descriptors': process.open_files.copy(),

'timestamp': time.time()

}

self.rollback_points[process.id] = checkpoint

def preempt_resources(self, victim_process, required_resources):

preempted = []

for resource in required_resources:

if resource in victim_process.held_resources:

# Remove resource from victim

victim_process.held_resources.remove(resource)

resource.holder = None

preempted.append(resource)

return preempted

def rollback_process(self, process):

if process.id in self.rollback_points:

checkpoint = self.rollback_points[process.id]

# Restore process state

process.memory = checkpoint['memory_state']

process.registers = checkpoint['register_state']

process.open_files = checkpoint['file_descriptors']

return True

return False

def recover_by_preemption(self, deadlocked_processes, required_resources):

# Select victim based on rollback cost

victim = min(deadlocked_processes,

key=lambda p: self.calculate_rollback_cost(p))

# Create checkpoint before preemption

self.create_checkpoint(victim)

# Preempt resources

preempted_resources = self.preempt_resources(victim, required_resources)

# Rollback victim process

self.rollback_process(victim)

return victim, preempted_resources

Advanced Detection Optimizations

Incremental Graph Updates

Optimize detection performance by maintaining incremental graph updates instead of full reconstruction:

class IncrementalDeadlockDetector:

def __init__(self):

self.resource_graph = {}

self.process_dependencies = {}

self.last_check_timestamp = 0

def add_resource_request(self, process, resource):

# Update graph incrementally

if process not in self.resource_graph:

self.resource_graph[process] = set()

self.resource_graph[process].add(resource)

# Trigger detection only if potential cycle

if self.could_create_cycle(process, resource):

return self.detect_deadlock_incremental()

return False

def could_create_cycle(self, requesting_process, requested_resource):

# Quick heuristic check

current_holder = self.get_resource_holder(requested_resource)

if current_holder:

return self.has_path(current_holder, requesting_process)

return False

def has_path(self, start, target):

# BFS to check if path exists

visited = set()

queue = [start]

while queue:

current = queue.pop(0)

if current == target:

return True

if current in visited:

continue

visited.add(current)

# Add dependent processes

for resource in self.resource_graph.get(current, []):

holder = self.get_resource_holder(resource)

if holder and holder not in visited:

queue.append(holder)

return False

Distributed Deadlock Detection

For distributed systems, implement edge-chasing algorithms:

class DistributedDeadlockDetector:

def __init__(self, node_id):

self.node_id = node_id

self.local_wait_graph = {}

self.probe_messages = {}

def initiate_detection(self, blocked_process):

# Send probe message to detect distributed deadlock

probe = {

'initiator': self.node_id,

'blocked_process': blocked_process,

'path': [self.node_id]

}

# Send probe to nodes where dependencies exist

for dependency in self.get_external_dependencies(blocked_process):

self.send_probe_message(dependency.node, probe)

def handle_probe_message(self, probe):

current_process = probe['blocked_process']

# Check if probe returned to initiator

if probe['initiator'] == self.node_id:

# Deadlock detected

return self.handle_deadlock_detection(probe['path'])

# Forward probe if process is blocked

if self.is_process_blocked(current_process):

blocking_resource = self.get_blocking_resource(current_process)

holder_node = self.get_resource_holder_node(blocking_resource)

probe['path'].append(self.node_id)

self.send_probe_message(holder_node, probe)

def send_probe_message(self, target_node, probe):

# Simulate network message sending

message = {

'type': 'DEADLOCK_PROBE',

'probe': probe,

'timestamp': time.time()

}

# Network layer would handle actual transmission

pass

Performance Analysis and Comparison

Algorithm Complexity Comparison

| Algorithm | Time Complexity | Space Complexity | Best Use Case |

|---|---|---|---|

| Resource Allocation Graph | O(V + E) | O(V + E) | Single-instance resources |

| Wait-For Graph | O(V²) | O(V²) | Process-centric detection |

| Banker’s Algorithm | O(V × R²) | O(V × R) | Multi-instance resources |

| Edge-Chasing | O(V × E) | O(V + E) | Distributed systems |

Detection Frequency Strategies

Periodic Detection

- Fixed interval checking (e.g., every 10 seconds)

- Low overhead but potential delay in detection

- Suitable for systems with predictable workloads

Event-Driven Detection

- Triggered by resource allocation requests

- Immediate detection but higher overhead

- Optimal for interactive systems

Hybrid Approach

- Combines periodic and event-driven methods

- Adaptive frequency based on system load

- Best balance between overhead and responsiveness

Real-World Implementation Considerations

Database Deadlock Detection

Modern database systems implement sophisticated deadlock detection:

-- PostgreSQL deadlock detection example

BEGIN;

SET deadlock_timeout = '1s';

-- Transaction 1

LOCK TABLE accounts IN SHARE MODE;

UPDATE accounts SET balance = balance - 100 WHERE id = 1;

-- This may trigger deadlock detection

UPDATE accounts SET balance = balance + 100 WHERE id = 2;

COMMIT;

Operating System Integration

Integration points with OS kernel:

- Process Control Blocks (PCB): Track resource dependencies

- Resource Descriptors: Maintain allocation state

- Scheduler Integration: Coordinate with process scheduling

- Memory Management: Handle rollback and checkpointing

Best Practices and Guidelines

Design Principles

- Minimize Detection Overhead: Use incremental updates and smart triggers

- Fast Recovery: Implement efficient rollback mechanisms

- Fairness: Avoid repeatedly selecting the same victim process

- System Stability: Ensure recovery doesn’t introduce new deadlocks

Implementation Tips

- Use priority-based victim selection to minimize system impact

- Implement comprehensive logging for deadlock analysis

- Consider timeout-based detection for real-time systems

- Test recovery mechanisms under various load conditions

Conclusion

Graph-based deadlock detection and recovery algorithms provide robust solutions for maintaining system stability in multi-process environments. The choice between different approaches depends on specific system requirements including resource types, performance constraints, and recovery time objectives.

Modern implementations benefit from combining multiple techniques: using resource allocation graphs for basic detection, incremental updates for performance optimization, and intelligent victim selection for efficient recovery. Understanding these algorithms enables developers to build resilient systems that gracefully handle resource contention scenarios.

The key to successful deadlock management lies in balancing detection frequency, recovery speed, and system overhead while maintaining application correctness and user experience quality.

- Introduction to Deadlock Detection and Recovery

- Understanding Deadlock Fundamentals

- Graph-Based Detection Algorithms

- Deadlock Detection Implementation Examples

- Deadlock Recovery Strategies

- Advanced Detection Optimizations

- Performance Analysis and Comparison

- Real-World Implementation Considerations

- Best Practices and Guidelines

- Conclusion