Zero-downtime migration has become a critical requirement for modern applications where even seconds of unavailability can result in significant business losses. This comprehensive guide explores advanced techniques that enable seamless transitions between systems, databases, and infrastructures without interrupting user services.

Understanding Zero-Downtime Migration

Zero-downtime migration refers to the process of moving applications, databases, or entire systems from one environment to another while maintaining continuous service availability. Unlike traditional maintenance windows that require scheduled downtime, these techniques ensure uninterrupted operation throughout the migration process.

Key Principles

- Redundancy: Maintaining parallel systems during transition

- Graceful Degradation: Ensuring fallback mechanisms are in place

- Data Consistency: Preserving data integrity throughout the process

- Traffic Management: Controlled routing of user requests

- Monitoring: Real-time visibility into migration progress

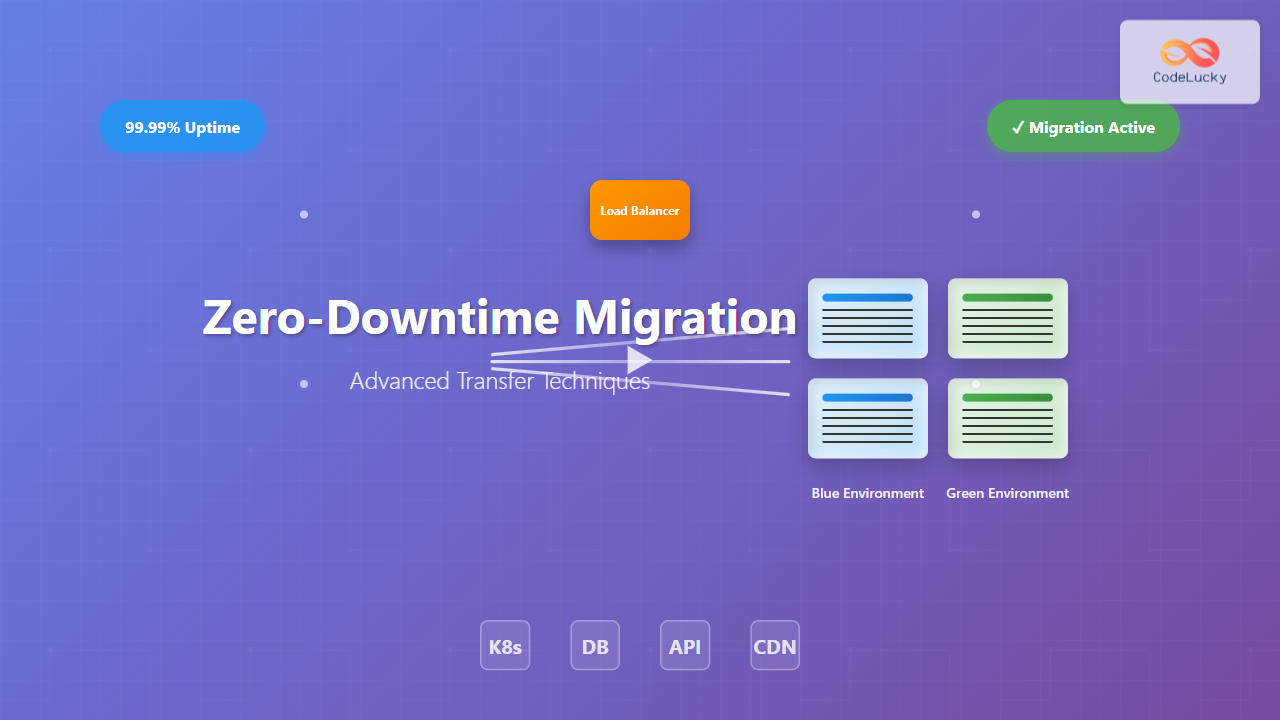

Blue-Green Deployment Strategy

Blue-green deployment is one of the most effective zero-downtime migration techniques, utilizing two identical production environments.

Implementation Steps

1. Environment Preparation

# Create green environment configuration

docker-compose -f docker-compose.green.yml up -d

# Verify green environment health

curl -f http://green-env.example.com/health || exit 1

# Sync database to green environment

pg_dump production_db | psql green_production_db

2. Traffic Switching

# Nginx configuration for gradual traffic shift

upstream backend {

server blue-env.internal:8080 weight=90;

server green-env.internal:8080 weight=10;

}

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

}

}

3. Complete Switchover

# Update load balancer to point to green environment

kubectl patch service app-service -p '{"spec":{"selector":{"version":"green"}}}'

# Verify traffic is flowing to green environment

kubectl get endpoints app-service

Database Migration Techniques

Database migrations present unique challenges due to data consistency requirements and potential schema changes.

Master-Slave Replication Migration

PostgreSQL Streaming Replication Setup

-- On master database

CREATE USER replicator REPLICATION LOGIN CONNECTION LIMIT 1 ENCRYPTED PASSWORD 'secure_password';

-- Configure postgresql.conf

wal_level = replica

max_wal_senders = 3

checkpoint_segments = 8

wal_keep_segments = 8

# On slave server

pg_basebackup -h master_host -D /var/lib/postgresql/data -U replicator -W -v -P

# Configure recovery.conf

standby_mode = 'on'

primary_conninfo = 'host=master_host port=5432 user=replicator'

Schema Evolution Strategy

Managing schema changes during zero-downtime migrations requires careful planning:

-- Phase 1: Add new column (nullable)

ALTER TABLE users ADD COLUMN email_verified BOOLEAN DEFAULT FALSE;

-- Phase 2: Populate data gradually

UPDATE users SET email_verified = TRUE

WHERE email IS NOT NULL AND created_at < NOW() - INTERVAL '30 days';

-- Phase 3: Make column non-nullable (after full population)

ALTER TABLE users ALTER COLUMN email_verified SET NOT NULL;

Rolling Updates for Containerized Applications

Rolling updates allow gradual replacement of application instances without service interruption.

Kubernetes Rolling Update Configuration

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-deployment

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

template:

spec:

containers:

- name: app

image: myapp:v2.0

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 10

periodSeconds: 5

Health Checks and Readiness Probes

// Express.js health check endpoint

app.get('/health', (req, res) => {

// Check database connectivity

db.ping()

.then(() => {

// Check external dependencies

return Promise.all([

checkRedisConnection(),

checkS3Connectivity(),

validateConfiguration()

]);

})

.then(() => {

res.status(200).json({

status: 'healthy',

timestamp: new Date().toISOString(),

version: process.env.APP_VERSION

});

})

.catch(error => {

res.status(503).json({

status: 'unhealthy',

error: error.message,

timestamp: new Date().toISOString()

});

});

});

Advanced Traffic Management

Canary Deployments

Canary deployments gradually shift traffic to new versions, allowing for risk mitigation:

# Istio VirtualService for canary deployment

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: app-canary

spec:

http:

- match:

- headers:

canary:

exact: "true"

route:

- destination:

host: app-service

subset: v2

- route:

- destination:

host: app-service

subset: v1

weight: 90

- destination:

host: app-service

subset: v2

weight: 10

Feature Flags Integration

// Feature flag implementation for gradual rollout

class FeatureManager {

constructor() {

this.flags = new Map();

}

async evaluateFlag(flagKey, userId, defaultValue = false) {

const flag = this.flags.get(flagKey);

if (!flag) return defaultValue;

// Percentage-based rollout

if (flag.rolloutPercentage) {

const userHash = this.hashUserId(userId);

return (userHash % 100) < flag.rolloutPercentage;

}

// User-specific targeting

if (flag.targetUsers && flag.targetUsers.includes(userId)) {

return true;

}

return flag.enabled || defaultValue;

}

hashUserId(userId) {

let hash = 0;

for (let i = 0; i < userId.length; i++) {

const char = userId.charCodeAt(i);

hash = ((hash << 5) - hash) + char;

hash = hash & hash; // Convert to 32-bit integer

}

return Math.abs(hash);

}

}

Data Migration Strategies

Event Sourcing for Migration

// Event-driven migration service

class MigrationService {

constructor(eventStore, targetSystem) {

this.eventStore = eventStore;

this.targetSystem = targetSystem;

this.checkpoint = new Map();

}

async migrateData(entityType, batchSize = 1000) {

let lastProcessedId = this.checkpoint.get(entityType) || 0;

while (true) {

const events = await this.eventStore.getEvents({

entityType,

fromId: lastProcessedId,

limit: batchSize

});

if (events.length === 0) break;

// Process events in batches

await this.processBatch(events);

// Update checkpoint

lastProcessedId = events[events.length - 1].id;

await this.updateCheckpoint(entityType, lastProcessedId);

// Add delay to avoid overwhelming target system

await this.sleep(100);

}

}

async processBatch(events) {

const transformedData = events.map(event =>

this.transformEvent(event)

);

await this.targetSystem.batchInsert(transformedData);

}

}

Dual-Write Strategy

// Dual-write implementation with eventual consistency

class DualWriteService {

constructor(primaryDB, secondaryDB, eventBus) {

this.primaryDB = primaryDB;

this.secondaryDB = secondaryDB;

this.eventBus = eventBus;

}

async writeData(data) {

try {

// Primary write (synchronous)

const result = await this.primaryDB.insert(data);

// Secondary write (asynchronous)

this.eventBus.emit('secondary-write', {

operation: 'insert',

data: data,

primaryId: result.id

});

return result;

} catch (error) {

// Log error and handle gracefully

console.error('Primary write failed:', error);

throw error;

}

}

setupSecondaryWriteHandler() {

this.eventBus.on('secondary-write', async (event) => {

try {

await this.secondaryDB.insert(event.data);

await this.logSuccessfulSync(event.primaryId);

} catch (error) {

await this.scheduleRetry(event);

}

});

}

}

Monitoring and Validation

Real-time Migration Monitoring

// Migration monitoring dashboard

class MigrationMonitor {

constructor(metricsCollector) {

this.metrics = metricsCollector;

this.alerts = [];

}

async validateMigration() {

const checks = await Promise.all([

this.validateDataIntegrity(),

this.checkPerformanceMetrics(),

this.verifyApplicationHealth(),

this.validateUserExperience()

]);

return {

overall: checks.every(check => check.success),

details: checks,

timestamp: new Date().toISOString()

};

}

async validateDataIntegrity() {

const sourceCount = await this.getSourceRecordCount();

const targetCount = await this.getTargetRecordCount();

return {

name: 'Data Integrity',

success: sourceCount === targetCount,

details: { sourceCount, targetCount },

variance: Math.abs(sourceCount - targetCount)

};

}

async checkPerformanceMetrics() {

const metrics = await this.metrics.getLatestMetrics();

return {

name: 'Performance',

success: metrics.responseTime < 500 && metrics.errorRate < 0.01,

details: metrics

};

}

}

Automated Rollback Mechanisms

#!/bin/bash

# Automated rollback script

ROLLBACK_THRESHOLD_ERROR_RATE=0.05

ROLLBACK_THRESHOLD_RESPONSE_TIME=1000

# Monitor key metrics

ERROR_RATE=$(curl -s "http://monitoring/api/error-rate" | jq -r '.value')

RESPONSE_TIME=$(curl -s "http://monitoring/api/response-time" | jq -r '.p95')

# Check thresholds

if (( $(echo "$ERROR_RATE > $ROLLBACK_THRESHOLD_ERROR_RATE" | bc -l) )); then

echo "Error rate exceeded threshold: $ERROR_RATE"

trigger_rollback "high_error_rate"

fi

if (( $(echo "$RESPONSE_TIME > $ROLLBACK_THRESHOLD_RESPONSE_TIME" | bc -l) )); then

echo "Response time exceeded threshold: ${RESPONSE_TIME}ms"

trigger_rollback "high_response_time"

fi

trigger_rollback() {

REASON=$1

echo "Initiating rollback due to: $REASON"

# Switch load balancer back to blue environment

kubectl patch service app-service -p '{"spec":{"selector":{"version":"blue"}}}'

# Wait for traffic to stabilize

sleep 30

# Verify rollback success

curl -f http://app.example.com/health || exit 1

echo "Rollback completed successfully"

# Send alert to operations team

curl -X POST "http://alerts/api/notify" \

-H "Content-Type: application/json" \

-d "{\"message\":\"Automatic rollback triggered: $REASON\",\"severity\":\"critical\"}"

}

Best Practices and Common Pitfalls

Planning and Preparation

- Comprehensive Testing: Test migration procedures in staging environments that mirror production

- Backup Strategies: Ensure robust backup and recovery mechanisms are in place

- Dependency Mapping: Understand all system dependencies and integration points

- Communication Plans: Establish clear communication channels for stakeholders

Common Pitfalls to Avoid

- Insufficient Testing: Skipping thorough testing in production-like environments

- Database Lock Contention: Long-running migrations blocking critical operations

- Resource Underestimation: Not accounting for increased resource usage during migration

- Incomplete Rollback Plans: Lack of tested rollback procedures

- Monitoring Gaps: Insufficient visibility into migration progress and system health

Performance Optimization

// Optimized batch processing with backpressure

class OptimizedMigrator {

constructor(options = {}) {

this.batchSize = options.batchSize || 1000;

this.maxConcurrency = options.maxConcurrency || 5;

this.delayBetweenBatches = options.delay || 100;

}

async migrateWithBackpressure(dataSource, processor) {

const semaphore = new Semaphore(this.maxConcurrency);

let processedCount = 0;

while (true) {

const batch = await dataSource.getNextBatch(this.batchSize);

if (batch.length === 0) break;

await semaphore.acquire();

processor(batch)

.then(() => {

processedCount += batch.length;

console.log(`Processed ${processedCount} records`);

})

.catch(error => {

console.error('Batch processing failed:', error);

})

.finally(() => {

semaphore.release();

});

// Implement backpressure

if (semaphore.availablePermits === 0) {

await new Promise(resolve => setTimeout(resolve, this.delayBetweenBatches));

}

}

}

}

Zero-downtime migration represents a critical capability for modern applications where continuous availability is essential. By implementing these advanced techniques – from blue-green deployments and rolling updates to sophisticated data migration strategies – organizations can achieve seamless transitions while maintaining service quality and user experience. Success requires careful planning, comprehensive testing, robust monitoring, and well-defined rollback procedures. As systems grow in complexity, mastering these migration techniques becomes increasingly important for maintaining competitive advantage and operational excellence.