In the world of database management, keeping your database clean, efficient, and optimized is crucial for maintaining peak performance. One powerful tool in your SQL arsenal for achieving this is the VACUUM command. This article will dive deep into the VACUUM command, exploring its functionality, benefits, and practical applications in real-world scenarios.

What is the VACUUM Command?

The VACUUM command is a maintenance operation in SQL databases, primarily used in PostgreSQL, that helps reclaim storage occupied by dead tuples and makes it available for reuse. It's an essential tool for database administrators to optimize storage space and improve query performance.

🔍 Fun Fact: The term "vacuum" in database context is analogous to vacuuming your carpet – it cleans up the mess and makes everything run more smoothly!

Why is VACUUM Important?

- Space Reclamation: VACUUM frees up space occupied by dead tuples (rows that have been deleted or updated).

- Performance Optimization: It updates statistics used by the query planner, leading to more efficient query execution plans.

- Prevents Transaction ID Wraparound: Regular VACUUMing helps avoid the critical issue of transaction ID wraparound.

How VACUUM Works

When you execute a VACUUM command, it performs several operations:

- Scans the table for dead tuples

- Removes dead tuples and compacts the table

- Updates the visibility map

- Updates the free space map

- Updates statistics for the query planner

Let's look at a simple example to understand this better:

CREATE TABLE employees (

id SERIAL PRIMARY KEY,

name VARCHAR(100),

department VARCHAR(50),

salary INTEGER

);

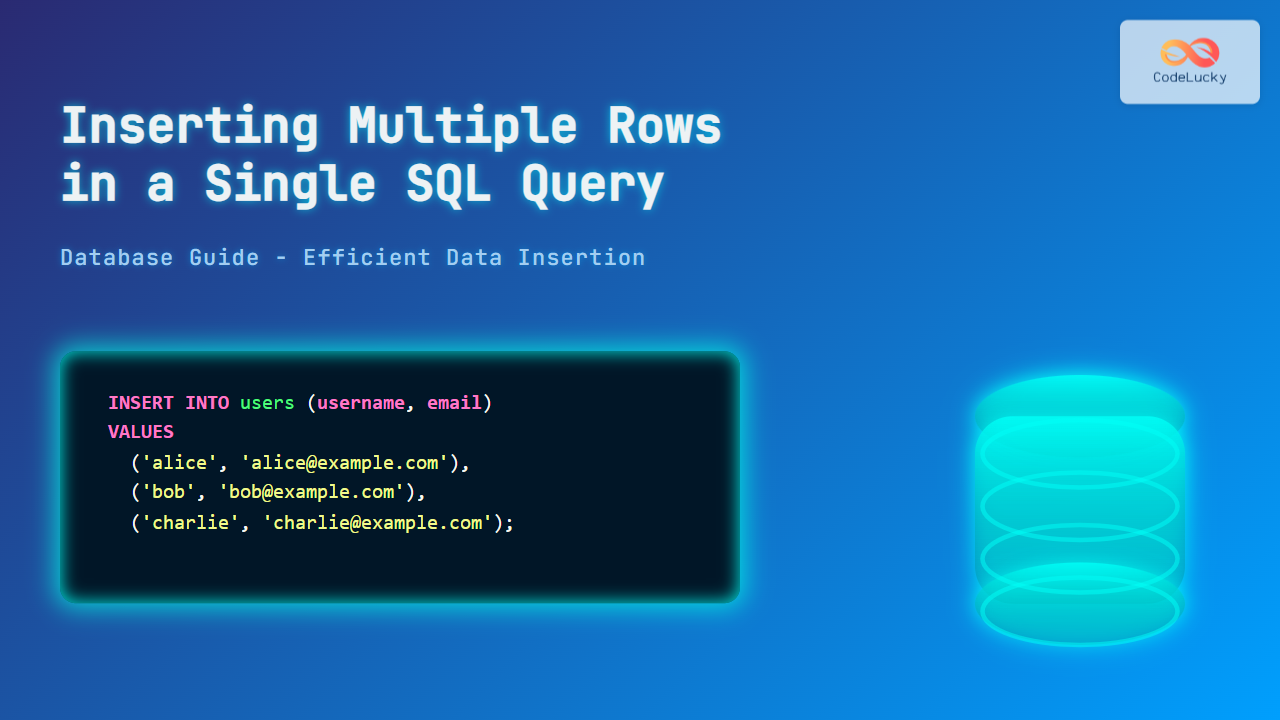

INSERT INTO employees (name, department, salary) VALUES

('John Doe', 'IT', 75000),

('Jane Smith', 'HR', 65000),

('Mike Johnson', 'Sales', 80000),

('Emily Brown', 'Marketing', 70000);

SELECT * FROM employees;

This will create and populate a table that looks like this:

| id | name | department | salary |

|---|---|---|---|

| 1 | John Doe | IT | 75000 |

| 2 | Jane Smith | HR | 65000 |

| 3 | Mike Johnson | Sales | 80000 |

| 4 | Emily Brown | Marketing | 70000 |

Now, let's delete a row and update another:

DELETE FROM employees WHERE id = 2;

UPDATE employees SET salary = 85000 WHERE id = 3;

SELECT * FROM employees;

The table now looks like this:

| id | name | department | salary |

|---|---|---|---|

| 1 | John Doe | IT | 75000 |

| 3 | Mike Johnson | Sales | 85000 |

| 4 | Emily Brown | Marketing | 70000 |

However, the space occupied by the deleted row (id = 2) and the old version of the updated row (id = 3) is still physically present in the table, even though it's not visible. This is where VACUUM comes in:

VACUUM employees;

After running VACUUM, the physical storage is cleaned up, freeing space for future use.

VACUUM Variants

PostgreSQL offers several variants of the VACUUM command to suit different needs:

1. Standard VACUUM

The basic VACUUM command reclaims space and updates statistics:

VACUUM employees;

2. VACUUM FULL

VACUUM FULL rewrites the entire contents of the table into a new disk file with no extra space:

VACUUM FULL employees;

⚠️ Caution: VACUUM FULL requires an exclusive lock on the table and can be very slow for large tables.

3. VACUUM ANALYZE

This variant performs a VACUUM and then updates statistics used by the query planner:

VACUUM ANALYZE employees;

4. VACUUM (VERBOSE)

This option provides detailed progress information about the VACUUM operation:

VACUUM (VERBOSE) employees;

Real-World Scenarios

Let's explore some practical scenarios where VACUUM proves invaluable:

Scenario 1: High-Volume Transaction Processing

Imagine an e-commerce platform processing thousands of orders per hour. The orders table is constantly updated with new orders, status changes, and deletions.

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

customer_id INTEGER,

order_date TIMESTAMP,

status VARCHAR(20),

total_amount DECIMAL(10, 2)

);

-- Simulate a day of transactions

INSERT INTO orders (customer_id, order_date, status, total_amount)

SELECT

floor(random() * 1000 + 1)::int,

now() - interval '1 day' * random(),

CASE WHEN random() < 0.1 THEN 'Cancelled' ELSE 'Completed' END,

random() * 1000

FROM generate_series(1, 10000);

-- Check table statistics

SELECT pg_size_pretty(pg_total_relation_size('orders')) AS total_size,

pg_size_pretty(pg_table_size('orders')) AS table_size,

pg_size_pretty(pg_indexes_size('orders')) AS index_size;

This might output:

| total_size | table_size | index_size |

|---|---|---|

| 1296 kB | 712 kB | 584 kB |

Now, let's simulate some updates and deletions:

-- Update some orders

UPDATE orders SET status = 'Shipped' WHERE random() < 0.5;

-- Delete cancelled orders

DELETE FROM orders WHERE status = 'Cancelled';

-- Check statistics again

SELECT pg_size_pretty(pg_total_relation_size('orders')) AS total_size,

pg_size_pretty(pg_table_size('orders')) AS table_size,

pg_size_pretty(pg_indexes_size('orders')) AS index_size;

You might see something like:

| total_size | table_size | index_size |

|---|---|---|

| 1456 kB | 872 kB | 584 kB |

Notice how the table size has increased despite deleting rows. This is where VACUUM comes in:

VACUUM VERBOSE orders;

After running VACUUM, check the statistics again:

SELECT pg_size_pretty(pg_total_relation_size('orders')) AS total_size,

pg_size_pretty(pg_table_size('orders')) AS table_size,

pg_size_pretty(pg_indexes_size('orders')) AS index_size;

You should see a reduction in size:

| total_size | table_size | index_size |

|---|---|---|

| 1304 kB | 720 kB | 584 kB |

Scenario 2: Data Warehousing

In a data warehousing scenario, you might have large fact tables that are periodically updated with new data, while old data is archived or deleted.

CREATE TABLE sales_fact (

sale_id SERIAL PRIMARY KEY,

date DATE,

product_id INTEGER,

customer_id INTEGER,

quantity INTEGER,

total_price DECIMAL(10, 2)

);

-- Insert sample data

INSERT INTO sales_fact (date, product_id, customer_id, quantity, total_price)

SELECT

current_date - (random() * 365)::integer,

floor(random() * 1000 + 1)::int,

floor(random() * 10000 + 1)::int,

floor(random() * 10 + 1)::int,

(random() * 1000)::decimal(10, 2)

FROM generate_series(1, 1000000);

-- Check initial size

SELECT pg_size_pretty(pg_total_relation_size('sales_fact')) AS total_size;

This might output:

| total_size |

|---|

| 124 MB |

Now, let's simulate a data archiving process:

-- Delete old data

DELETE FROM sales_fact WHERE date < current_date - interval '1 year';

-- Check size after deletion

SELECT pg_size_pretty(pg_total_relation_size('sales_fact')) AS total_size;

You might see:

| total_size |

|---|

| 124 MB |

The size hasn't changed because the space occupied by deleted rows hasn't been reclaimed. Let's use VACUUM FULL:

VACUUM FULL VERBOSE sales_fact;

-- Check size after VACUUM FULL

SELECT pg_size_pretty(pg_total_relation_size('sales_fact')) AS total_size;

Now you should see a significant reduction:

| total_size |

|---|

| 92 MB |

Best Practices for Using VACUUM

-

Regular Maintenance: Schedule regular VACUUM operations, especially for tables with frequent updates or deletes.

-

Autovacuum: Enable and configure PostgreSQL's autovacuum feature for automatic maintenance.

-

VACUUM vs. VACUUM FULL: Use standard VACUUM for routine maintenance and VACUUM FULL only when necessary, as it's more resource-intensive.

-

Monitoring: Regularly monitor your database's bloat levels to determine when VACUUM is needed.

-

Off-Peak Hours: Schedule intensive VACUUM operations during off-peak hours to minimize impact on performance.

-

ANALYZE: Combine VACUUM with ANALYZE to update statistics for the query planner.

-

Partitioning: For very large tables, consider partitioning to make VACUUM operations more manageable.

Conclusion

The VACUUM command is a powerful tool in the SQL database administrator's toolkit, especially for PostgreSQL users. By reclaiming space, updating statistics, and preventing transaction ID wraparound, VACUUM plays a crucial role in maintaining database health and performance.

Remember, while VACUUM is essential, it's not a magic solution for all database performance issues. It should be part of a comprehensive database maintenance strategy that includes proper indexing, query optimization, and regular monitoring.

By understanding and effectively using the VACUUM command, you can ensure your databases remain efficient, performant, and ready to handle your application's data management needs.

🔧 Pro Tip: Always test VACUUM operations in a staging environment before applying them to production databases, especially when dealing with large tables or using VACUUM FULL.