In today’s rapidly evolving digital landscape, efficient scalability planning is essential for businesses aiming to sustain performance, optimize costs, and seamlessly manage growing user demands. Scalability planning empowers technical teams to architect infrastructure with foresight, ensuring that systems can expand without compromising reliability or speed. This article dives deep into scalability planning strategies, examples, and architectural best practices to help developers, DevOps engineers, and technical managers build future-ready systems.

Understanding Scalability: What It Really Means

Scalability is the capability of a system to handle a growing amount of work or its potential to accommodate growth gracefully. It is not just about adding more resources but doing so efficiently, cost-effectively, and without notable performance degradation. Scalability comes in two primary forms:

- Vertical Scaling (Scaling Up): Increasing capacity by adding more power to existing machines — for example, upgrading CPU, memory, or storage.

- Horizontal Scaling (Scaling Out): Adding more machines or instances to distribute the load.

Example: Vertical vs Horizontal Scaling

Imagine a web application experiencing high traffic. If it is vertically scaled, the upgrade involves boosting the CPU or RAM of the existing server. With horizontal scaling, additional servers or containers are launched to share the workload.

Why Scalability Planning is Crucial

Failing to plan scalability can lead to costly downtime, poor user experience, and excessive infrastructure spend. Key benefits of effective scalability planning include:

- Performance consistency: Maintain response times under peak loads.

- Cost efficiency: Avoid overprovisioning resources prematurely and scale aligned with demand.

- High availability & fault tolerance: Minimize service disruption through distributed systems.

- Future-proofing: Prepare your system architecture to adapt to evolving requirements.

Core Concepts in Scalability Planning

Load Balancing

Distributing incoming network traffic across multiple servers prevents any single server from becoming a bottleneck. Load balancers route requests intelligently based on methods such as round-robin or least connections.

Caching Strategies

Cache frequently requested data at various layers — client, edge (CDN), application, and database — to reduce load and accelerate response times.

Database Scalability

Databases often pose significant scaling challenges. Strategies include:

- Read Replicas: Separate read queries to replicas to reduce database load.

- Sharding: Partition data across multiple database servers by key ranges or hashing.

- NoSQL Databases: Use horizontally scalable NoSQL options for high write throughput and flexible schemas.

Planning Your Infrastructure Scalability: Step-by-Step

- Assess current and projected load: Use monitoring tools to analyze traffic patterns, peak loads, and usage growth trends.

- Choose scaling strategies: Decide when and how to scale vertically or horizontally based on application architecture.

- Design modular architecture: Microservices and containerization enable independent scaling of components.

- Implement automation: Leverage Infrastructure as Code (IaC) and auto-scaling policies for on-demand resource provisioning.

- Test scalability: Conduct load and stress tests to identify bottlenecks and optimize the system.

Real-World Example: Auto-Scaling with Cloud Providers

Most cloud providers offer robust auto-scaling services that dynamically adjust compute resources based on predefined metrics such as CPU utilization or request count. For instance, an e-commerce site can automatically add more instances during holiday sales and scale down during off-peak times to optimize cost.

# Example AWS Auto Scaling Group policy snippet

AutoScalingGroupName: webServerGroup

MinSize: 2

MaxSize: 10

DesiredCapacity: 3

ScalingPolicies:

- PolicyName: scaleOutPolicy

Metric: CPUUtilization

Threshold: 70%

Adjustment: +1 instance

- PolicyName: scaleInPolicy

Metric: CPUUtilization

Threshold: 30%

Adjustment: -1 instance

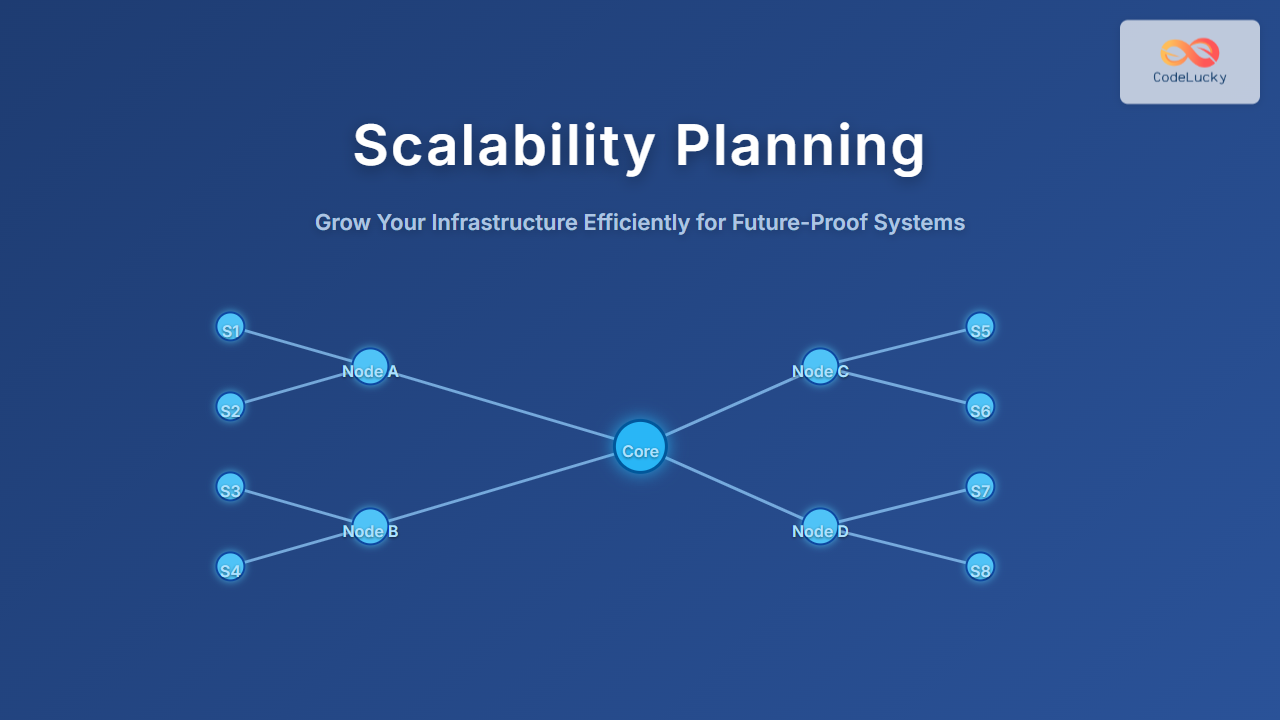

Visual Architecture: Scalable Microservices with Kubernetes

This architecture enables individual microservices to scale independently depending on demand, facilitated by Kubernetes’ pod autoscaling capabilities and service mesh routing.

Interactive Consideration: Load Testing Tools

To validate scalability plans, interactive load testing tools like Locust or Apache JMeter can simulate user traffic patterns. These tools provide real-time performance metrics and visualization dashboards to identify bottlenecks prior to production deployment.

Common Pitfalls and How to Avoid Them

- Over-reliance on vertical scaling: Can lead to cost inefficiencies and hardware limits.

- Ignoring monitoring: Without real-time monitoring and alerting, scaling decisions can be delayed or misguided.

- Monolithic architecture: Limits scaling flexibility and increases system fragility.

- Not testing scaling boundaries: Leads to unexpected failures under real load.

Summary & Best Practices

- Plan scalability from the start, integrating it into system architecture design.

- Favor horizontal scaling combined with robust load balancing and caching.

- Utilize cloud-native auto-scaling tools to optimize resource utilization.

- Employ continuous monitoring, load testing, and metrics analysis.

- Adopt modular design like microservices for independent component growth.

By thoughtfully implementing scalability planning, engineering teams can future-proof infrastructure, ensuring resilient, high-performance applications capable of meeting growing user demands efficiently and cost-effectively.