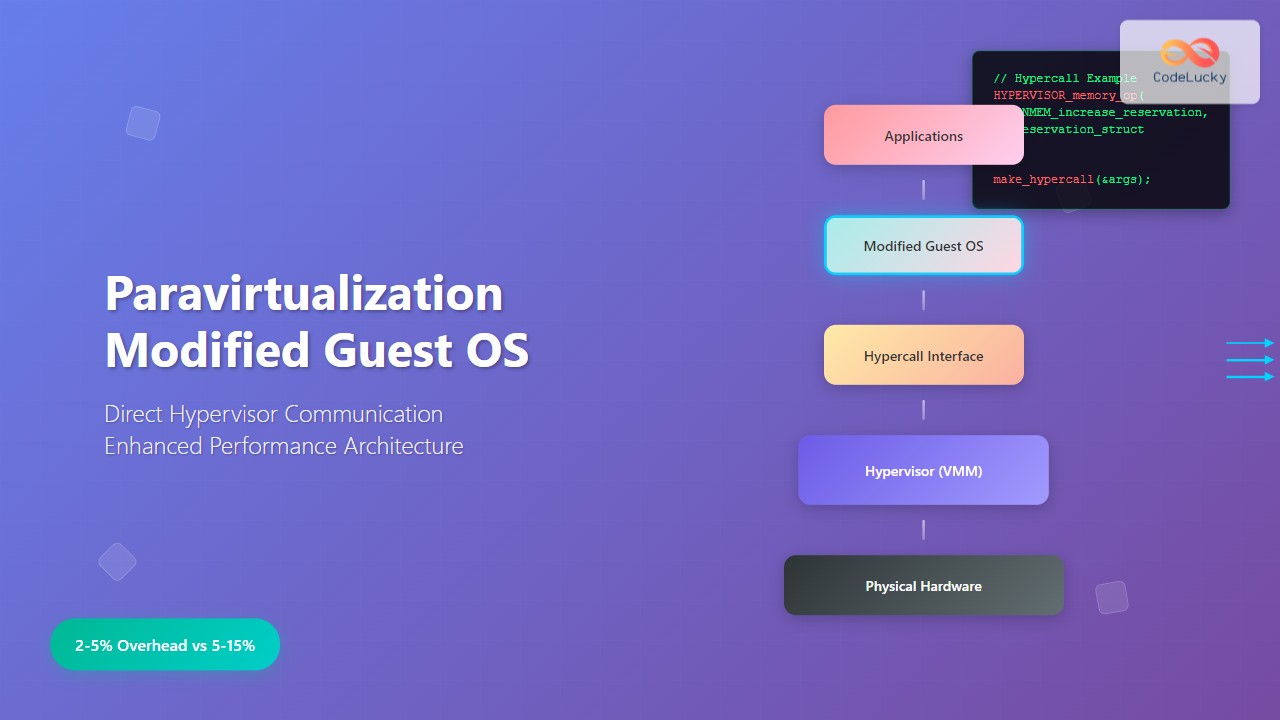

What is Paravirtualization?

Paravirtualization is a virtualization technique where the guest operating system is modified to be aware that it’s running in a virtualized environment. Unlike full virtualization, where the guest OS remains unchanged and unaware of the hypervisor, paravirtualization requires explicit modifications to the guest kernel to communicate directly with the hypervisor through well-defined APIs.

This approach eliminates the need for complex instruction translation and trap-and-emulate mechanisms, resulting in near-native performance while maintaining isolation between virtual machines.

Core Architecture Components

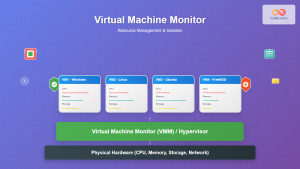

Hypervisor Layer

The hypervisor (also called Virtual Machine Monitor) sits directly on the hardware and manages multiple guest operating systems. In paravirtualization, it exposes a clean, well-defined interface that guest OSes can call directly.

// Example hypercall interface

struct hypercall_args {

unsigned long op;

unsigned long arg1;

unsigned long arg2;

unsigned long arg3;

};

long make_hypercall(struct hypercall_args *args) {

long result;

asm volatile (

"movq %1, %%rax\n"

"movq %2, %%rdi\n"

"vmcall\n"

"movq %%rax, %0"

: "=m" (result)

: "m" (args->op), "m" (args)

: "rax", "rdi"

);

return result;

}Modified Guest Kernel

The guest operating system kernel is modified to replace privileged instructions with hypercalls. These modifications include:

- Memory management: Page table operations

- Interrupt handling: Virtual interrupt controllers

- I/O operations: Device access through hypervisor

- CPU scheduling: Yielding control back to hypervisor

Paravirtualization vs Full Virtualization

| Aspect | Full Virtualization | Paravirtualization |

|---|---|---|

| Guest OS Modification | None required | Kernel modifications needed |

| Performance Overhead | 5-15% typical | 2-5% typical |

| Hardware Requirements | VT-x/AMD-V support | Standard x86 processors |

| Guest OS Compatibility | Any OS | Modified versions only |

| Implementation Complexity | High (trap-and-emulate) | Moderate (API design) |

Key Implementation Techniques

Hypercalls

Hypercalls are the primary mechanism for guest-to-hypervisor communication. They replace privileged instructions that would normally trap to the hypervisor.

// Memory mapping hypercall example

int pv_map_page(unsigned long guest_pfn, unsigned long machine_pfn) {

struct hypercall_args args;

args.op = HYPERCALL_MAP_PAGE;

args.arg1 = guest_pfn;

args.arg2 = machine_pfn;

args.arg3 = PAGE_PRESENT | PAGE_WRITABLE;

return make_hypercall(&args);

}

// Usage in guest kernel

void *map_physical_memory(phys_addr_t addr, size_t size) {

unsigned long pfn = addr >> PAGE_SHIFT;

void *vaddr = get_vm_area(size);

for (int i = 0; i < (size >> PAGE_SHIFT); i++) {

pv_map_page(virt_to_pfn(vaddr + (i << PAGE_SHIFT)), pfn + i);

}

return vaddr;

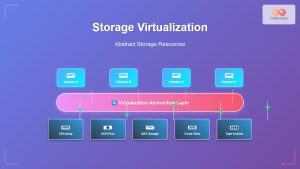

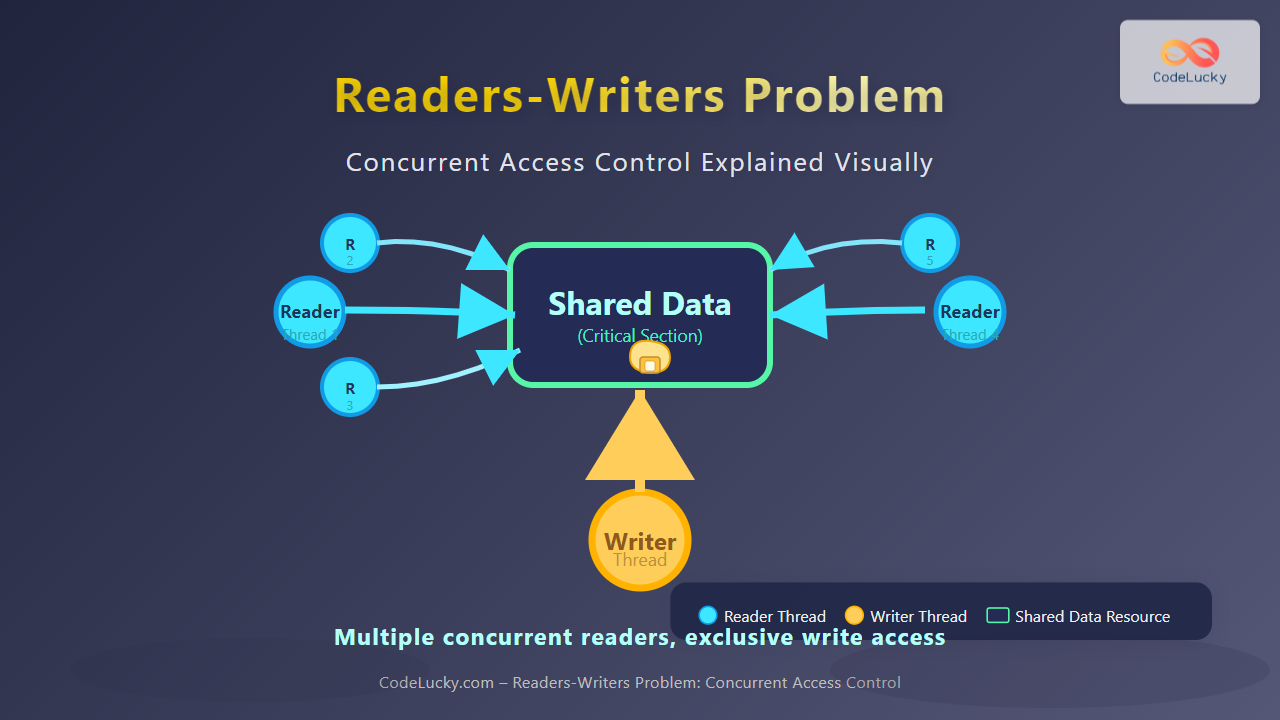

}Shared Memory Communication

Paravirtualized systems often use shared memory regions for efficient data exchange between guest and hypervisor.

// Shared info structure

struct shared_info {

struct vcpu_info vcpu_info[MAX_VCPUS];

unsigned long evtchn_pending[64];

unsigned long evtchn_mask[64];

uint64_t system_time;

uint32_t time_version;

// ... other shared data

};

// Accessing shared information

static inline uint64_t get_system_time(void) {

struct shared_info *s = hypervisor_shared_info;

uint64_t time;

uint32_t version;

do {

version = s->time_version;

rmb(); // memory barrier

time = s->system_time;

rmb();

} while (version != s->time_version || (version & 1));

return time;

}Popular Paravirtualization Implementations

Xen Hypervisor

Xen is one of the most prominent paravirtualization platforms. It uses a microkernel design where Domain 0 (dom0) has direct hardware access and manages other domains.

// Xen grant table operations

grant_ref_t grant_foreign_access(domid_t domid, unsigned long frame, int readonly) {

grant_ref_t ref = get_free_grant_reference();

struct grant_entry *entry = &grant_table[ref];

entry->frame = frame;

entry->domid = domid;

entry->flags = GTF_permit_access | (readonly ? GTF_readonly : 0);

return ref;

}

// Event channel communication

void send_event_to_domain(domid_t remote_domain, int remote_port) {

struct evtchn_send send;

send.port = remote_port;

HYPERVISOR_event_channel_op(EVTCHNOP_send, &send);

}VMware Paravirtualization

VMware implements paravirtualization through VMware Tools and paravirtualized device drivers.

// VMware balloon driver example

struct vmballoon {

struct page **pages;

unsigned int size;

unsigned int target;

};

static void vmballoon_inflate(struct vmballoon *b, unsigned int pages) {

for (int i = 0; i < pages; i++) {

struct page *page = alloc_page(GFP_KERNEL);

if (!page) break;

// Notify hypervisor about ballooned page

vmware_hypercall(VMWARE_CMD_BALLOON_LOCK,

page_to_pfn(page), 0, 0);

b->pages[b->size++] = page;

}

}Memory Management in Paravirtualization

Shadow Page Tables vs Direct Page Tables

Paravirtualization allows direct manipulation of page tables through hypercalls, eliminating the overhead of shadow page tables used in full virtualization.

// Direct page table manipulation

int pv_set_pte(pte_t *ptep, pte_t pteval) {

struct mmu_update update;

update.ptr = (unsigned long)ptep | MMU_NORMAL_PT_UPDATE;

update.val = pte_val(pteval);

return HYPERVISOR_mmu_update(&update, 1, NULL, DOMID_SELF);

}

// Batch page table updates for efficiency

void pv_set_pte_batch(struct mmu_update *updates, int count) {

int processed = 0;

while (processed < count) {

int batch_size = min(count - processed, MAX_BATCH_SIZE);

int ret = HYPERVISOR_mmu_update(updates + processed,

batch_size, NULL, DOMID_SELF);

if (ret < 0) break;

processed += batch_size;

}

}Device I/O Paravirtualization

Virtual Device Interfaces

Paravirtualized I/O uses high-level, efficient interfaces instead of emulating physical hardware devices.

// Paravirtualized network interface

struct netfront_info {

struct net_device *netdev;

struct napi_struct napi;

grant_ref_t *grant_tx_ref;

grant_ref_t *grant_rx_ref;

unsigned int evtchn;

unsigned int irq;

struct xen_netif_tx_ring tx;

struct xen_netif_rx_ring rx;

};

// Transmit packet using shared ring

static int xennet_start_xmit(struct sk_buff *skb, struct net_device *dev) {

struct netfront_info *np = netdev_priv(dev);

struct xen_netif_tx_request *tx;

grant_ref_t ref;

// Get next available slot

tx = RING_GET_REQUEST(&np->tx, np->tx.req_prod_pvt);

// Grant access to packet data

ref = grant_foreign_access(0, virt_to_mfn(skb->data), 1);

tx->gref = ref;

tx->size = skb->len;

tx->flags = 0;

np->tx.req_prod_pvt++;

RING_PUSH_REQUESTS(&np->tx);

// Notify backend

notify_remote_via_evtchn(np->evtchn);

return NETDEV_TX_OK;

}Performance Optimization Strategies

Batching Operations

Batching multiple operations reduces the number of expensive hypervisor transitions.

// Batch interrupt handling

void process_pending_events(void) {

struct shared_info *s = HYPERVISOR_shared_info;

struct vcpu_info *vcpu = &s->vcpu_info[smp_processor_id()];

unsigned long pending_words[64];

// Atomically read and clear pending events

memcpy(pending_words, s->evtchn_pending, sizeof(pending_words));

memset(s->evtchn_pending, 0, sizeof(s->evtchn_pending));

// Process all pending events in batch

for (int word = 0; word < 64; word++) {

unsigned long pending = pending_words[word];

int bit;

while ((bit = __ffs(pending)) != -1) {

int port = (word * 64) + bit;

handle_event_channel(port);

pending &= ~(1UL << bit);

}

}

}Lazy State Synchronization

Minimize synchronization overhead by updating shared state only when necessary.

// Lazy FPU state management

void pv_fpu_lazy_restore(void) {

if (current->flags & PF_USED_MATH) {

// FPU state already loaded

return;

}

// Defer FPU restore until actually needed

HYPERVISOR_fpu_taskswitch(1); // Disable FPU

current->flags |= PF_USED_ASYNC;

}

void pv_fpu_restore_on_demand(void) {

if (!(current->flags & PF_USED_MATH)) {

HYPERVISOR_fpu_taskswitch(0); // Enable FPU

restore_fpu_state(¤t->thread.fpu);

current->flags |= PF_USED_MATH;

}

}Security Considerations

Hypercall Validation

The hypervisor must carefully validate all hypercalls to prevent security vulnerabilities.

// Hypercall input validation

long hypercall_memory_op(int cmd, void __user *arg) {

struct xen_memory_reservation reservation;

switch (cmd) {

case XENMEM_increase_reservation:

if (copy_from_user(&reservation, arg, sizeof(reservation)))

return -EFAULT;

// Validate domain ID

if (reservation.domid != DOMID_SELF &&

!is_privileged_domain(current->domain))

return -EPERM;

// Validate extent count

if (reservation.nr_extents > MAX_RESERVATION_EXTENTS)

return -EINVAL;

return increase_reservation(&reservation);

default:

return -ENOSYS;

}

}Advantages and Limitations

Advantages

- Superior Performance: Reduced overhead compared to full virtualization

- Efficient Resource Usage: Direct communication eliminates translation layers

- Better Scalability: Lower CPU overhead allows more VMs per host

- Hardware Independence: Doesn’t require specific CPU virtualization features

Limitations

- Guest OS Modification Required: Limits OS compatibility

- Maintenance Overhead: Updates needed for new OS versions

- Vendor Lock-in: Different hypervisors use incompatible interfaces

- Security Surface: Hypercall interface increases attack surface

Future Trends and Evolution

Modern virtualization increasingly uses hardware-assisted virtualization (Intel VT-x, AMD-V) which provides near-paravirtualization performance without guest OS modifications. However, paravirtualization concepts continue to influence:

- Container Technologies: Similar performance benefits through OS-level virtualization

- Hybrid Approaches: Paravirtualized drivers in fully virtualized environments

- Cloud Computing: Optimized guest kernels for cloud workloads

- Microkernel Systems: Direct inspiration for modern microkernel architectures

Understanding paravirtualization principles remains crucial for system architects, as these concepts continue to shape modern virtualization technologies and inform decisions about performance optimization in virtualized environments.