What is Paging in Operating Systems?

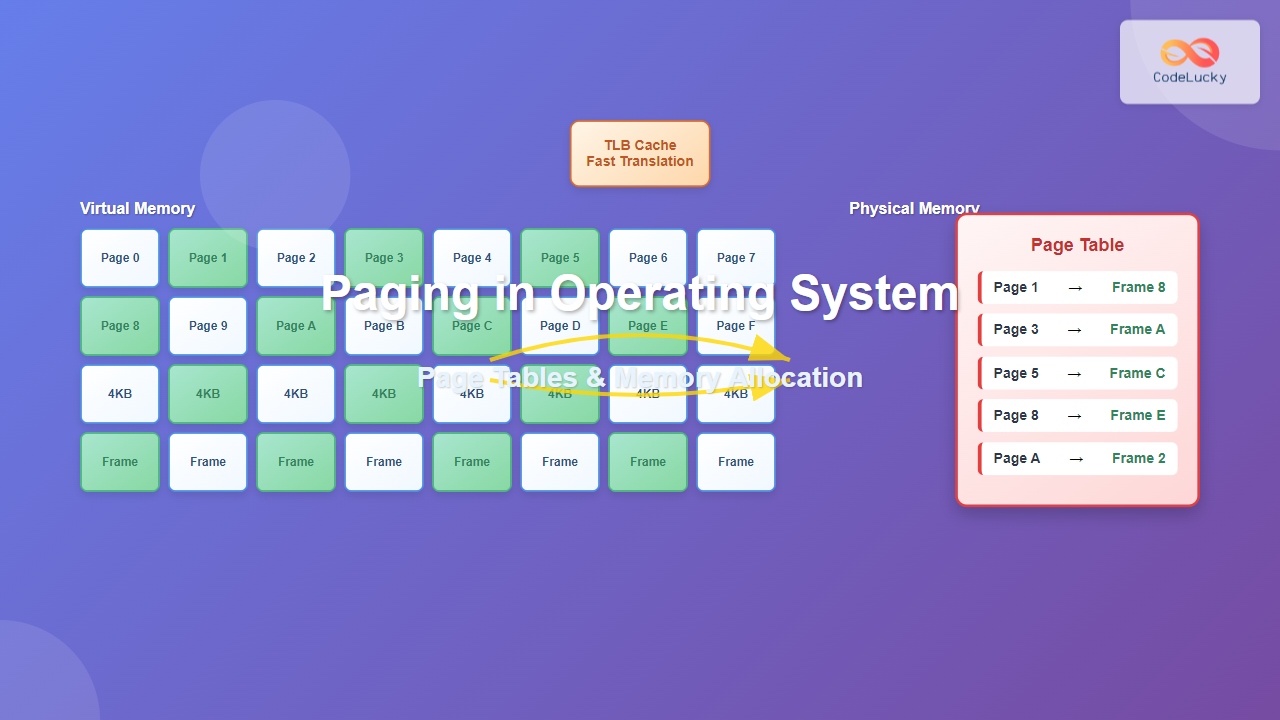

Paging is a memory management scheme that allows the operating system to store and retrieve data from secondary storage for use in main memory. It divides both virtual memory and physical memory into fixed-size blocks called pages (virtual memory) and frames (physical memory), enabling non-contiguous memory allocation and efficient memory utilization.

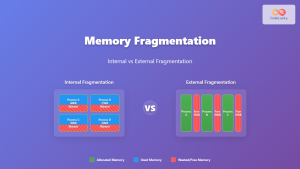

Unlike segmentation, which uses variable-sized memory blocks, paging uses uniform page sizes (typically 4KB, 8KB, or 16KB) to eliminate external fragmentation while providing a clean abstraction layer between logical and physical addresses.

Core Components of Paging System

Pages and Frames

Pages are fixed-size blocks of virtual memory, while frames are corresponding fixed-size blocks of physical memory. The operating system maintains a one-to-one mapping between pages and frames through page tables.

- Page Size: Common sizes include 4KB (4,096 bytes), 8KB, and 16KB

- Page Number: Higher-order bits of virtual address

- Page Offset: Lower-order bits indicating position within page

- Frame Number: Physical memory frame identifier

Address Translation Process

Virtual addresses are translated to physical addresses through a systematic process:

Virtual Address = [Page Number | Page Offset]

Physical Address = [Frame Number | Page Offset]For a 32-bit system with 4KB pages:

Page Size = 4KB = 4,096 bytes = 2^12 bytes

Page Offset = 12 bits (0-11)

Page Number = 20 bits (12-31)

Total Virtual Address Space = 2^32 = 4GBPage Table Structure and Implementation

Single-Level Page Tables

The simplest page table implementation uses a single array where the page number serves as an index. Each entry contains the corresponding frame number and control bits.

Page Table Entry Structure

Each page table entry typically contains:

| Bit Field | Purpose | Description |

|---|---|---|

| Frame Number | Address Translation | Physical frame number for mapping |

| Valid Bit | Presence Check | Indicates if page is in physical memory |

| Dirty Bit | Modification Flag | Set when page has been written to |

| Reference Bit | Access Tracking | Set when page is accessed |

| Protection Bits | Access Control | Read, Write, Execute permissions |

| Caching Bits | Cache Control | Cache disable, write-through flags |

Multi-Level Page Tables

Large address spaces require hierarchical page tables to reduce memory overhead. A two-level system divides the page number into multiple parts:

32-bit Address with 4KB pages, 2-level paging:

- Page Directory Index: 10 bits (bits 22-31)

- Page Table Index: 10 bits (bits 12-21)

- Page Offset: 12 bits (bits 0-11)Memory Allocation Strategies

Demand Paging

Demand paging loads pages into memory only when they are accessed, reducing initial memory requirements and startup time. When a process attempts to access a page not in memory, a page fault occurs.

Page Fault Handling Process:

- Trap Generation: CPU generates trap when accessing invalid page

- Page Fault Handler: OS kernel handles the interrupt

- Page Location: OS locates page in secondary storage

- Frame Allocation: Free frame is allocated or victim page is evicted

- Page Loading: Page is loaded from storage to memory

- Table Update: Page table entry is updated with new frame number

- Restart Instruction: Faulting instruction is restarted

Page Replacement Algorithms

When physical memory is full, the OS must select victim pages for replacement:

First-In-First-Out (FIFO)

Reference String: 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2

Frames = 3

Frame 1: [7] [7] [7] [2] [2] [2] [4] [4] [4] [0] [0] [0] [0]

Frame 2: [-] [0] [0] [0] [0] [3] [3] [3] [2] [2] [2] [3] [3]

Frame 3: [-] [-] [1] [1] [1] [1] [0] [0] [0] [3] [3] [3] [2]

Page Faults: 7 0 1 2 3 4 2 3 0 3 2

Total Page Faults: 9Least Recently Used (LRU)

Reference String: 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2

Frames = 3

Frame 1: [7] [7] [7] [2] [2] [2] [0] [4] [4] [4] [0] [0] [0]

Frame 2: [-] [0] [0] [0] [0] [3] [3] [3] [2] [2] [2] [3] [3]

Frame 3: [-] [-] [1] [1] [1] [1] [1] [1] [1] [3] [3] [3] [2]

Page Faults: 7 0 1 2 3 0 4 2 3 0 2

Total Page Faults: 8Translation Lookaside Buffer (TLB)

The Translation Lookaside Buffer is a high-speed cache that stores recent virtual-to-physical address translations, significantly reducing memory access time by avoiding page table lookups.

TLB Performance Metrics

TLB effectiveness is measured by hit ratio and effective access time:

Effective Access Time = (TLB Hit Time × Hit Ratio) +

(Page Table Access Time + Memory Access Time) × Miss Ratio

Example:

- TLB Hit Time: 1 ns

- Memory Access Time: 100 ns

- TLB Hit Ratio: 98%

Effective Access Time = (1 × 0.98) + (100 + 100) × 0.02

= 0.98 + 4 = 4.98 nsAdvanced Paging Concepts

Copy-on-Write (COW)

Copy-on-Write is an optimization technique where multiple processes share the same physical pages until one process modifies the data. This reduces memory usage and improves process creation performance.

COW Implementation Example:

Process Creation:

1. Parent process has pages marked as read-only

2. Child process shares same physical pages

3. On write attempt:

- Page fault occurs

- OS allocates new physical frame

- Original page is copied to new frame

- Page tables updated for writing process

- Write operation proceedsMemory-Mapped Files

Memory-mapped files allow file I/O operations through memory access, treating file contents as virtual memory pages.

// C Example: Memory-mapped file

#include <sys/mman.h>

#include <fcntl.h>

int fd = open("data.txt", O_RDWR);

void *ptr = mmap(NULL, file_size, PROT_READ | PROT_WRITE,

MAP_SHARED, fd, 0);

// Access file through memory operations

*(char*)ptr = 'A'; // Modifies first byte of file

munmap(ptr, file_size);Paging vs Other Memory Management Schemes

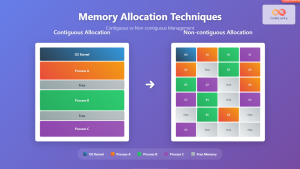

| Aspect | Paging | Segmentation | Continuous Allocation |

|---|---|---|---|

| Fragmentation | Internal only | External possible | Both types |

| Memory Utilization | High | Variable | Low |

| Address Translation | Simple arithmetic | Base + limit | Base register |

| Sharing Support | Page-level | Segment-level | Process-level |

| Protection | Page-granular | Segment-granular | Process-granular |

Performance Optimization Techniques

Page Size Selection

Page size affects system performance through multiple factors:

- Small pages: Reduce internal fragmentation, increase page table size

- Large pages: Reduce page table overhead, increase internal fragmentation

- Optimal size: Balance between fragmentation and table overhead

Prefetching Strategies

Predictive page loading can reduce page fault penalties:

Sequential Prefetching:

- Load next N pages when accessing page P

- Effective for sequential access patterns

Spatial Prefetching:

- Load nearby pages based on locality

- Uses working set analysisImplementation Examples

Simplified Page Table Implementation

// Basic page table structure in C

#define PAGE_SIZE 4096

#define PAGE_BITS 12

#define PAGES_PER_TABLE 1024

typedef struct page_entry {

unsigned int frame_number : 20; // Physical frame number

unsigned int valid : 1; // Present in memory

unsigned int dirty : 1; // Modified since load

unsigned int accessed : 1; // Recently accessed

unsigned int user : 1; // User accessible

unsigned int writable : 1; // Write permission

unsigned int reserved : 7; // Reserved bits

} page_entry_t;

// Address translation function

unsigned long translate_address(unsigned long virtual_addr,

page_entry_t *page_table) {

unsigned long page_num = virtual_addr >> PAGE_BITS;

unsigned long offset = virtual_addr & (PAGE_SIZE - 1);

page_entry_t *entry = &page_table[page_num];

if (!entry->valid) {

// Page fault - handle page loading

handle_page_fault(page_num);

return 0; // Will retry after page load

}

entry->accessed = 1; // Set access bit

return (entry->frame_number << PAGE_BITS) | offset;

}Page Replacement Algorithm Implementation

// LRU page replacement using counter method

typedef struct lru_entry {

unsigned int frame_number;

unsigned int counter;

struct lru_entry *next;

} lru_entry_t;

static unsigned int global_counter = 0;

int lru_replace_page(lru_entry_t *lru_list, int num_frames) {

lru_entry_t *current = lru_list;

lru_entry_t *victim = lru_list;

unsigned int min_counter = current->counter;

// Find page with minimum counter value

while (current != NULL) {

if (current->counter < min_counter) {

min_counter = current->counter;

victim = current;

}

current = current->next;

}

int victim_frame = victim->frame_number;

// Update counter for new page

victim->counter = ++global_counter;

return victim_frame;

}Common Challenges and Solutions

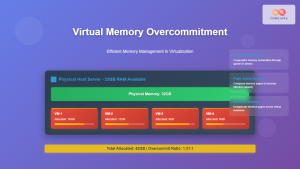

Thrashing Prevention

Thrashing occurs when excessive paging activity degrades system performance. Prevention strategies include:

- Working Set Model: Maintain pages frequently used by process

- Page Fault Frequency: Monitor and control fault rates

- Load Control: Reduce multiprogramming degree when thrashing detected

Memory Overhead Reduction

Large page tables consume significant memory. Solutions include:

- Inverted Page Tables: One entry per physical frame

- Hashed Page Tables: Hash function maps virtual pages

- Clustered Page Tables: Group related page table entries

Modern Implementations

64-bit Architecture Considerations

64-bit systems require sophisticated paging schemes due to massive address spaces:

x86-64 Paging Structure:

- 4-level page tables (PML4, PDPT, PD, PT)

- 48-bit virtual addresses (256 TB virtual space)

- Sign extension for higher 16 bits

- 2MB and 1GB huge pages supportNUMA-Aware Paging

Non-Uniform Memory Access systems require locality-aware page allocation:

- Local allocation: Prefer memory on same NUMA node

- Migration policies: Move pages closer to accessing threads

- Interleaving: Distribute pages across nodes for bandwidth

Paging remains fundamental to modern operating system design, enabling efficient memory utilization, process isolation, and virtual memory capabilities. Understanding these concepts is crucial for system programmers, kernel developers, and anyone working with memory-intensive applications.

- What is Paging in Operating Systems?

- Core Components of Paging System

- Page Table Structure and Implementation

- Memory Allocation Strategies

- Translation Lookaside Buffer (TLB)

- Advanced Paging Concepts

- Paging vs Other Memory Management Schemes

- Performance Optimization Techniques

- Implementation Examples

- Common Challenges and Solutions

- Modern Implementations