The Map-Reduce algorithm revolutionized big data processing by providing an elegant, scalable, and fault-tolerant approach to distributed computing. Originally popularized by Google, Map-Reduce underpins many modern data processing frameworks like Apache Hadoop and Apache Spark. This article offers an in-depth look into the Map-Reduce algorithm, its architecture, working principles, and practical examples with clear visual aids to foster deep understanding.

What is Map-Reduce?

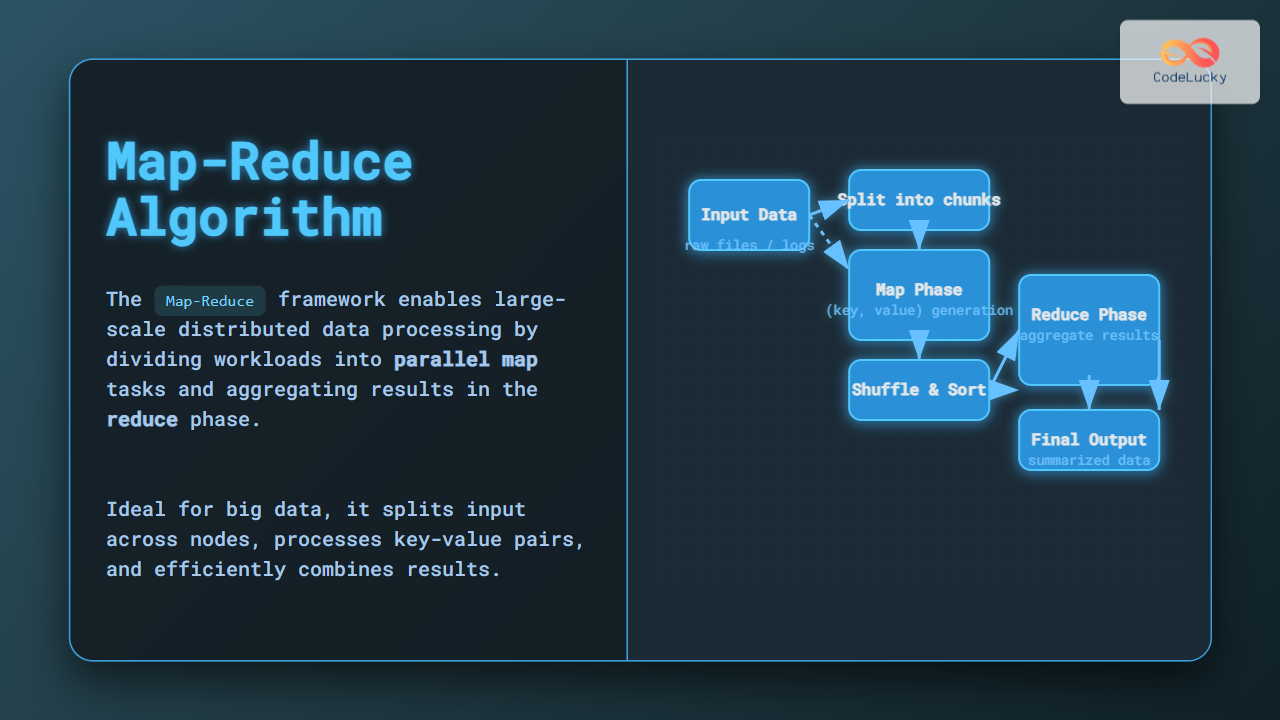

Map-Reduce is a programming model and associated implementation for processing and generating large data sets with a parallel, distributed algorithm on a cluster. It simplifies processing by decomposing the task into two primary functions: Map and Reduce. The Map function filters and sorts data, while the Reduce function aggregates the results.

Core Concepts of Map-Reduce

The fundamental flow of the Map-Reduce algorithm involves three stages:

- Map phase: Data is divided into smaller chunks and processed in parallel through the Map function that emits intermediate key-value pairs.

- Shuffle and Sort phase: Intermediate pairs are grouped by keys and distributed to reducers.

- Reduce phase: The Reduce function merges all values associated with the same key to produce a summarized output.

Map-Reduce Architecture

The architecture typically comprises three components:

- Job Tracker: Coordinates the job, assigns map and reduce tasks across nodes.

- Task Tracker (Workers): Executes individual Map and Reduce tasks.

- Distributed File System (DFS): For storing input data and intermediate/final outputs, e.g., HDFS.

This design ensures high fault tolerance and scalability, handling enormous data volumes by distributing the workload across many nodes.

Detailed Walkthrough of Map-Reduce Process

Suppose we want to count the frequency of words in a large collection of documents. The Map-Reduce algorithm processes as follows:

Map Function

Processes each document line or chunk, emitting (word, 1) for every word encountered.

function map(String document):

for each word in document:

emit(word, 1)

Shuffle and Sort Phase

Groups all the (word, 1) pairs across data splits into (word, list_of_ones) by key (word).

Reduce Function

Aggregates the counts for each word:

function reduce(String word, Iterable counts):

sum = 0

for count in counts:

sum += count

emit(word, sum)

Illustrative Example Output

Input paragraphs:

"apple banana apple" "banana orange apple"

Map outputs (key-value pairs):

- (apple, 1), (banana, 1), (apple, 1)

- (banana, 1), (orange, 1), (apple, 1)

After shuffle and sort:

- (apple, [1, 1, 1])

- (banana, [1, 1])

- (orange, [1])

Reduced output:

- (apple, 3)

- (banana, 2)

- (orange, 1)

Advantages of Map-Reduce

- Scalability: Easily processes petabytes of data across thousands of machines.

- Fault Tolerance: Automatic recovery from failures with task re-execution.

- Simplicity: Allows developers to focus on logic without managing parallelization intricacies.

- Cost Efficiency: Utilizes commodity hardware effectively.

Common Real-World Use Cases

- Log analysis and aggregation

- Indexing large document collections for search engines

- Data transformation and cleaning in ETL pipelines

- Recommendation systems and machine learning preprocessing

Interactive Visualization: Word Count Example

The following interactive example simulates the basic Map-Reduce word count process:

Summary

The Map-Reduce algorithm is a foundational paradigm enabling efficient processing of vast datasets by dividing computational work across distributed nodes. Its simplicity, scalability, and fault tolerance have made it a cornerstone of big data frameworks. Understanding the Map and Reduce phases along with data flow provides a practical grasp for designing distributed applications.

In contemporary computing, Map-Reduce remains a vital concept with adaptations and enhancements continuing in modern data processing ecosystems.