Introduction to Machine Learning Algorithms

Machine learning algorithms form the core of artificial intelligence (AI) and data science, enabling computers to learn from data and make informed decisions without explicit programming. These algorithms analyze patterns, make predictions, and uncover insights across many domains, from healthcare to finance and beyond. Understanding these foundational algorithms is essential for grasping how AI systems function and evolve.

This article offers a detailed exploration of popular machine learning algorithms, categorized by learning style, accompanied by examples and visual diagrams to illuminate their inner workings. Whether you are a data scientist, AI enthusiast, or software developer, this guide will enhance your grasp of these essential tools.

Types of Machine Learning Algorithms

Machine learning algorithms are broadly categorized into three learning paradigms:

- Supervised Learning: Learning from labeled data to predict outputs.

- Unsupervised Learning: Discovering hidden patterns or data groupings without labeled outputs.

- Reinforcement Learning: Learning optimal actions via trial and error to maximize rewards.

Supervised Learning Algorithms

Supervised learning models work with labeled datasets, meaning each training example has an associated output label. The goal is to learn a function that maps inputs to desired outputs for new unseen data.

1. Linear Regression

A fundamental algorithm for predicting continuous numeric values, such as house prices or temperature forecasts. It fits a linear equation to the observed data.

y = β₀ + β₁x₁ + ... + βₙxₙ + εwhere \(y\) is the target, \(x\) are feature variables, and \(\beta\) are coefficients.

Example: Predicting house prices based on area size.

Features (Area in sqft): [1000, 1500, 2000, 2500]

Prices (in $): [300000, 400000, 500000, 600000]

Linear regression models the price as proportional to the size.

2. Logistic Regression

Used for binary classification problems, such as spam detection or disease diagnosis. It outputs probabilities by applying the logistic function to a linear combination of inputs.

p = 1 / (1 + e^{-z}), z = β₀ + β₁x₁ + ... + βₙxₙClass prediction is based on whether the probability exceeds a threshold (commonly 0.5).

3. Decision Trees

Decision trees split data based on feature conditions, producing a tree of decisions for classification or regression tasks. They are intuitive and interpretable.

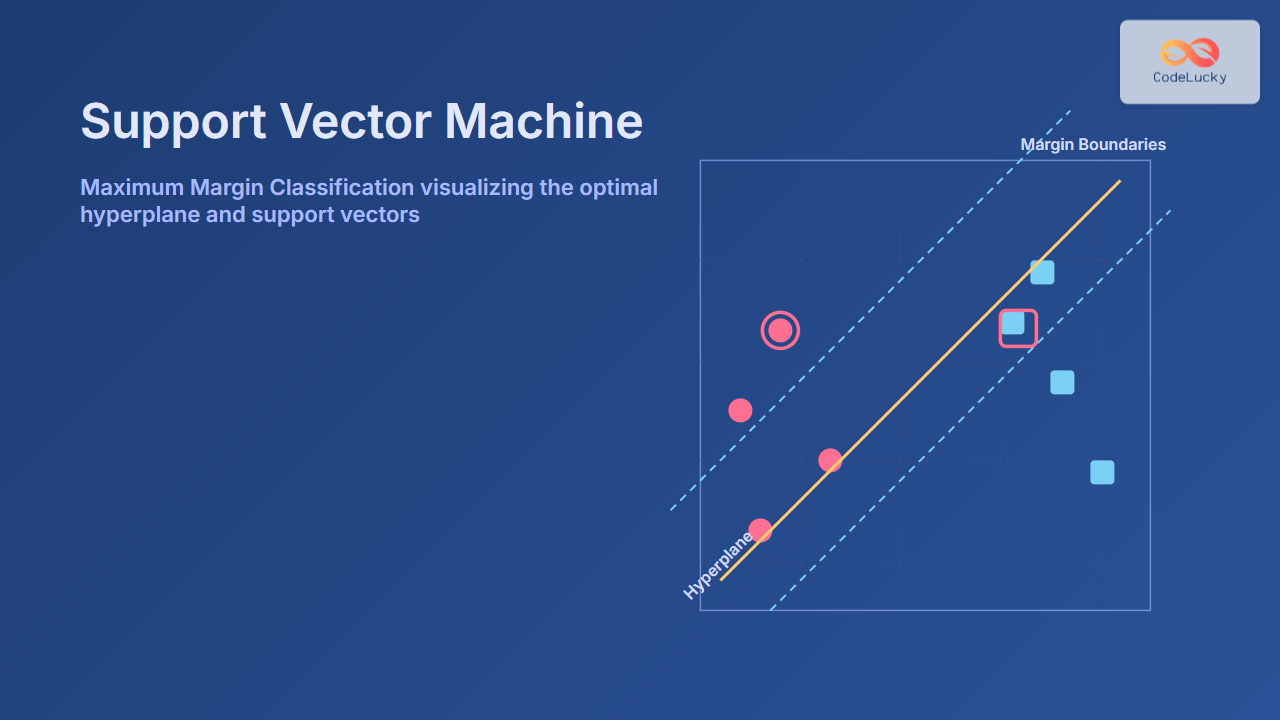

4. Support Vector Machines (SVM)

SVMs find a hyperplane in multi-dimensional space that best separates classes by maximizing the margin between data points of different classes.

Unsupervised Learning Algorithms

Unsupervised learning deals with unlabeled data, where the model tries to identify structure or categories in the dataset without predefined outputs.

1. K-Means Clustering

An iterative clustering algorithm that partitions data into K clusters based on feature similarity (usually Euclidean distance). The algorithm updates cluster centroids until convergence.

Example: Segmenting customers into distinct groups based on purchasing behavior.

2. Principal Component Analysis (PCA)

A dimensionality reduction technique that transforms features into new orthogonal components capturing the maximum variance. Useful for visualization and noise reduction.

Reinforcement Learning Algorithms

Reinforcement learning (RL) enables an agent to learn optimal policies through interactions with an environment by receiving rewards or penalties.

1. Q-Learning

A value-based off-policy algorithm that learns the expected utility of actions in states. The Q-values guide the agent’s decision policy.

Q(s, a) ← Q(s, a) + α [r + γ maxᵃ' Q(s', a') - Q(s, a)]where \(α\) is learning rate, \(γ\) is discount factor, \(r\) is immediate reward.

2. Markov Decision Process (MDP)

A mathematical framework modeling decision-making in states with probabilistic transitions and rewards, forming the theoretical base for RL algorithms.

Practical Example: Predicting Iris Species with Logistic Regression

The Iris dataset is a classic multiclass classification problem where the goal is to classify iris flowers into three species based on petal and sepal measurements.

Logistic regression can be adapted using a one-vs-rest approach for the three classes.

This example demonstrates how supervised learning models map features to discrete categories, supporting diverse classification needs.

Summary and Key Takeaways

- Supervised Learning leverages labeled data for prediction and classification with famous algorithms like linear regression, decision trees, and SVM.

- Unsupervised Learning uncovers hidden data structures using clustering (K-means) and dimensionality reduction (PCA).

- Reinforcement Learning trains agents to make sequences of decisions to maximize long-term rewards.

- Choosing the right algorithm depends on the problem type, data availability, and the desired outcome — prediction, grouping, or decision-making.

Mastering these foundational machine learning algorithms enables practical AI development and insightful data science applications.