In modern web architectures, ensuring that applications handle large volumes of traffic smoothly is critical. Load balancing is the core technique used to distribute incoming network or application traffic across several servers, optimizing resource use, maximizing throughput, minimizing response time, and avoiding overload on any single resource. This article covers comprehensive details on load balancing — its types, benefits, implementation methods, and practical examples — to help you architect highly available and scalable systems.

What Is Load Balancing?

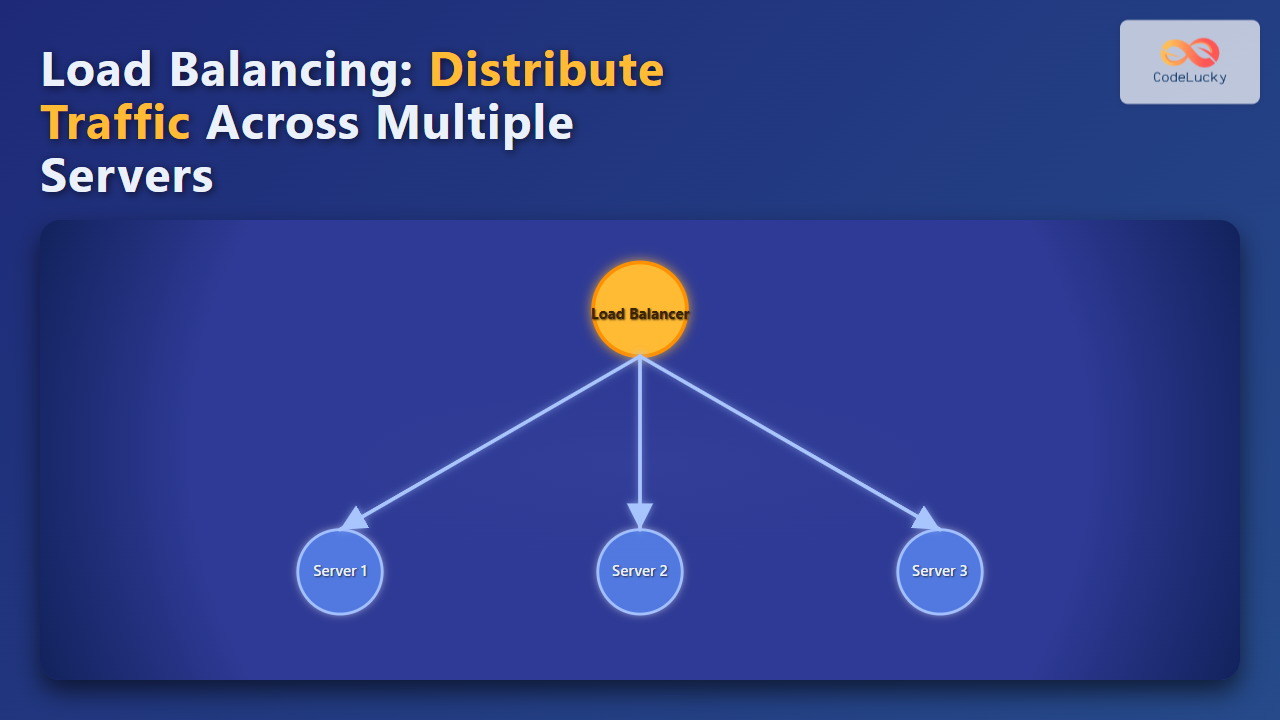

Load balancing is the process of distributing client requests or network load efficiently across multiple backend servers or resources. This helps servers share traffic load, prevents any single server from becoming a bottleneck, and improves overall client experience with higher availability and reliability.

In essence, a load balancer acts as a traffic manager or a reverse proxy that receives incoming requests and forwards them to one of many servers based on predefined algorithms or health checks.

Benefits of Load Balancing

- Improved reliability and fault tolerance: If one server fails, the load balancer redirects traffic to healthy servers without downtime.

- Scalability: Easily add or remove servers as traffic demands grow or shrink.

- Optimal resource utilization: Distributes workload evenly, preventing some servers from being overwhelmed.

- Reduced latency and faster response time: Directs traffic to the least busy or nearest server.

Types of Load Balancing Algorithms

Load balancers use different methods for distributing traffic. Common algorithms include:

- Round Robin: Requests are distributed sequentially to each server in the pool.

- Least Connections: Sends traffic to the server with the fewest active connections.

- IP Hash: Uses the client’s IP address to determine which server receives the traffic, ensuring session persistence.

- Weighted Round Robin / Weighted Least Connections: Assigns weights to servers based on their capacity.

Load Balancer Types

Load balancers can be categorized based on where they operate in the network stack:

- Layer 4 (Transport Layer) Load Balancer: Balances traffic based on IP address, TCP, UDP ports without inspecting the content.

- Layer 7 (Application Layer) Load Balancer: Routes traffic based on content of the request (HTTP headers, cookies, URL path), providing advanced routing.

Practical Example: Round Robin Load Balancing with Nginx

Nginx, a popular web server and reverse proxy, can be configured as a simple load balancer using the Round Robin algorithm. Here’s an example configuration distributing HTTP requests across three backend servers:

http {

upstream backend {

server 192.168.1.101;

server 192.168.1.102;

server 192.168.1.103;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}This configuration directs all HTTP requests it receives to the “backend” group, balancing requests sequentially among the specified servers. The result is better traffic distribution and fault tolerance.

Interactive Concept: Simulating Load Balancing

To visualize how load balancing distributes connections, imagine three servers hosting the same application behind a load balancer. When several clients send requests, the load balancer uses Round Robin scheduling to assign each request in turn to a server, cycling through servers continuously.

Request 1 -> Server 1

Request 2 -> Server 2

Request 3 -> Server 3

Request 4 -> Server 1

...This cycle repeats, ensuring no single server is overburdened. Session persistence or smarter algorithms could modify this behavior for stateful applications.

Common Load Balancing Scenarios

Load balancers are widely used in:

- Web Servers: To handle high visitor counts for websites and APIs.

- Database Queries: Distributing read requests across read replicas.

- Cloud Services: Auto-scaling groups for on-demand server capacity.

Conclusion

Load balancing is an essential technique for maintaining high availability, performance, and scalability in distributed systems. Understanding algorithms and proper implementation methods empowers engineers to design robust infrastructure that gracefully handles traffic surges and server failures.

Whether using software solutions like Nginx or dedicated hardware appliances, well-implemented load balancing ensures seamless user experiences and efficient resource utilization.