Introduction to K-Nearest Neighbors (KNN) Algorithm

The K-Nearest Neighbors (KNN) algorithm is a fundamental instance-based learning method widely used in supervised machine learning tasks, especially in classification and regression problems. It works by finding the closest data points (neighbors) in the feature space to a given query point and making predictions based on these neighbors’ known labels or values. As a non-parametric, lazy learner, KNN does not build an explicit model but directly uses training data during prediction, offering simplicity and flexibility.

How KNN Works: Core Concept

KNN classifies or predicts based on the majority voting or averaging of nearest neighbors. The algorithm follows these steps:

- Choose the number of neighbors,

k. - Calculate the distance between the query point and all training points.

- Sort the distances and select the

knearest neighbors. - For classification, assign the class with the majority vote among neighbors; for regression, take the average value.

Distance metrics commonly include Euclidean, Manhattan, or Minkowski distances depending on data and context.

Choosing the Right Value of k

Selecting k is crucial: a small k may lead to noisy predictions (overfitting), while a large k can smooth out distinctions (underfitting). Cross-validation techniques help in choosing an optimal k.

Distance Metrics Explained

Different distance metrics affect neighbor selection:

- Euclidean distance: Straight-line distance in multidimensional space. Formula:

\[

d(p, q) = \sqrt{\sum_{i=1}^{n}(p_i – q_i)^2}

\] - Manhattan distance: Sum of absolute differences. Formula:

\[

d(p, q) = \sum_{i=1}^{n}|p_i – q_i|

\] - Minkowski distance: Generalized form that includes Euclidean and Manhattan as special cases:

\[

d(p, q) = \left(\sum_{i=1}^n |p_i – q_i|^m\right)^{1/m}

\]

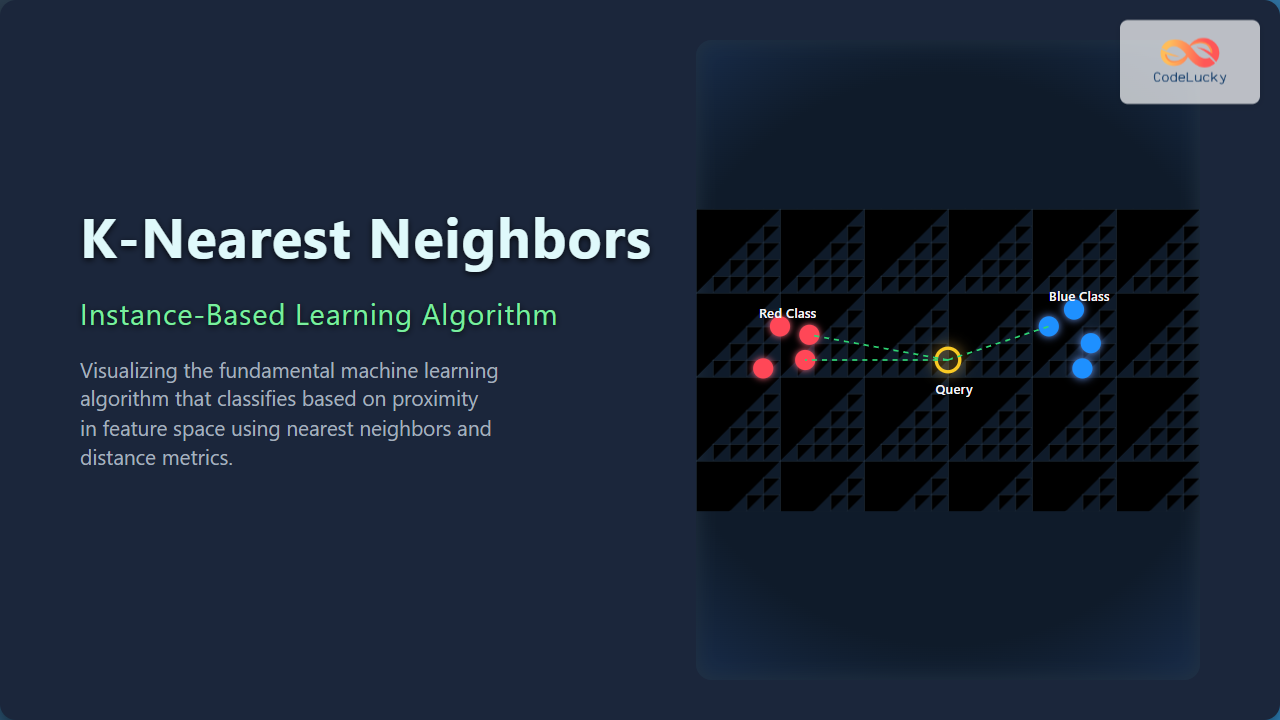

Example: KNN Classification with 2D Points

Consider a dataset with two classes, labeled Red and Blue. A new point (shown as Query) needs classification.

In this example with k=3, the nearest neighbors to the Query point are two red points and one blue point. With majority voting, the Query is classified as Red.

Interactive Example (Conceptual)

An interactive visualization would allow users to dynamically add points, adjust k, and see how classification changes, enhancing understanding. Though actual interactivity isn’t implemented here, the concept is vital for learning KNN.

Pros and Cons of KNN

| Advantages | Disadvantages |

|---|---|

|

|

Improving KNN Performance

- Feature Scaling: Normalize or standardize features to improve distance calculations.

- Dimensionality Reduction: Use PCA or other methods to reduce noise and dimensionality.

- Weighted Voting: Weight neighbors by inverse distance for refined prediction.

- Data Structure Optimization: Use KD-Trees or Ball Trees for faster nearest neighbor search.

Summary

K-Nearest Neighbors is a core instance-based learning algorithm offering a straightforward yet powerful approach to classification and regression problems. Its reliance on distance metrics and neighborhood voting makes it intuitive, while its lazy learning nature avoids heavy model training. Understanding and selecting appropriate parameters like k and distance functions, along with preprocessing, significantly impact KNN’s effectiveness.

This detailed guide on KNN provides foundational knowledge, practical examples with graphical explanation, and insights into best practices for utilization in real-world machine learning workflows.