Introduction to Page Replacement Algorithms

Page replacement algorithms are fundamental components of virtual memory systems in modern operating systems. When physical memory becomes full and a new page needs to be loaded, the operating system must decide which existing page to remove (or “replace”) to make room. The choice of which page to evict can significantly impact system performance, making the study of these algorithms crucial for system optimization.

Virtual memory allows programs to use more memory than physically available by storing some pages on disk and swapping them in and out as needed. When a page fault occurs and all physical memory frames are occupied, a page replacement algorithm determines the victim page to be replaced.

Understanding Page Faults and Memory Frames

Before diving into specific algorithms, let’s establish key concepts:

- Page Fault: Occurs when a program tries to access a page not currently in physical memory

- Memory Frame: A fixed-size block of physical memory that can hold one page

- Reference String: The sequence of page numbers accessed by a program

- Hit Ratio: Percentage of memory accesses that don’t cause page faults

- Page Fault Rate: Number of page faults per total memory accesses

FIFO (First-In-First-Out) Algorithm

The FIFO algorithm is the simplest page replacement strategy. It maintains the order in which pages were loaded into memory and always replaces the oldest page first. This algorithm treats memory frames like a queue – the first page loaded is the first to be replaced.

FIFO Algorithm Characteristics

- Implementation: Simple queue-based structure

- Time Complexity: O(1) for replacement decision

- Space Complexity: O(n) where n is the number of frames

- Advantage: Easy to implement and understand

- Disadvantage: Doesn’t consider page usage patterns

FIFO Example Walkthrough

Let’s trace through an example with 3 memory frames and the reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

Step-by-Step FIFO Execution

| Step | Page Reference | Frame 0 | Frame 1 | Frame 2 | Page Fault? |

|---|---|---|---|---|---|

| 1 | 1 | 1 | – | – | Yes |

| 2 | 2 | 1 | 2 | – | Yes |

| 3 | 3 | 1 | 2 | 3 | Yes |

| 4 | 4 | 4 | 2 | 3 | Yes |

| 5 | 1 | 4 | 1 | 3 | Yes |

| 6 | 2 | 4 | 1 | 2 | Yes |

Legend: Green = New page loaded | Red = Page replaced

Belady’s Anomaly in FIFO

FIFO suffers from a counterintuitive phenomenon called Belady’s Anomaly, where increasing the number of memory frames can actually increase the number of page faults. This occurs because FIFO doesn’t consider the future usage of pages.

Example of Belady’s Anomaly

Reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5

- With 3 frames: 9 page faults

- With 4 frames: 10 page faults

LRU (Least Recently Used) Algorithm

The LRU algorithm is based on the principle of temporal locality – recently accessed pages are more likely to be accessed again soon. It replaces the page that has been unused for the longest period of time.

LRU Algorithm Characteristics

- Implementation: Requires tracking access times or maintaining access order

- Time Complexity: O(1) with proper data structures (doubly-linked list + hash map)

- Space Complexity: O(n) for tracking structures

- Advantage: Better performance than FIFO, respects temporal locality

- Disadvantage: More complex implementation, overhead for tracking

LRU Implementation Approaches

There are several ways to implement LRU:

- Counter Method: Associate a counter with each page, increment on access

- Stack Method: Maintain a stack of page numbers, move accessed page to top

- Doubly Linked List + Hash Map: Most efficient O(1) implementation

LRU Example Walkthrough

Using the same reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 with 3 frames:

| Step | Page Reference | Memory State | LRU Order | Page Fault? |

|---|---|---|---|---|

| 1 | 1 | [1, -, -] | 1 (most recent) | Yes |

| 2 | 2 | [1, 2, -] | 2, 1 | Yes |

| 3 | 3 | [1, 2, 3] | 3, 2, 1 | Yes |

| 4 | 4 | [4, 2, 3] | 4, 3, 2 (1 removed) | Yes |

| 5 | 1 | [4, 1, 3] | 1, 4, 3 (2 removed) | Yes |

| 6 | 2 | [4, 1, 2] | 2, 1, 4 (3 removed) | Yes |

Optimal Page Replacement Algorithm

The Optimal algorithm, also known as Farthest-in-Future or MIN, replaces the page that will be accessed farthest in the future. If a page will never be accessed again, it’s the perfect candidate for replacement.

Optimal Algorithm Characteristics

- Implementation: Requires knowledge of future page accesses

- Time Complexity: O(n×m) where n is reference string length, m is number of frames

- Practicality: Not implementable in real systems

- Purpose: Theoretical benchmark for comparison

- Advantage: Minimum possible page fault rate

- Disadvantage: Requires future knowledge (impossible in practice)

Optimal Algorithm Example

Using the reference string: 1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5 with 3 frames:

| Step | Page Reference | Memory State | Future Accesses | Page Fault? |

|---|---|---|---|---|

| 4 | 4 | [1, 2, 3] → [4, 2, 3] | 1→next at pos 5, 2→next at pos 6, 3→next at pos 10 | Yes (replace 3) |

| 7 | 5 | [4, 2, 1] → [5, 2, 1] | 4→next at pos 11, 2→next at pos 8, 1→next at pos 8 | Yes (replace 4) |

Comparison of Page Replacement Algorithms

| Algorithm | Page Faults (Example) | Implementation Complexity | Overhead | Belady’s Anomaly |

|---|---|---|---|---|

| FIFO | 9 | Very Low | Minimal | Yes |

| LRU | 8 | Medium | Moderate | No |

| Optimal | 6 | Impossible | N/A | No |

Advanced Page Replacement Techniques

Clock Algorithm (Second Chance)

The Clock algorithm is a practical approximation of LRU that uses a reference bit for each page. It’s also known as the Second Chance algorithm.

- Mechanism: Circular list with reference bits

- Operation: Give pages a “second chance” if referenced recently

- Advantage: Better than FIFO, simpler than LRU

- Use case: Many real operating systems

Enhanced Second Chance Algorithm

This algorithm considers both reference and modify bits, creating four categories:

- (0,0): Not recently used, not modified – best candidate

- (0,1): Not recently used, but modified – requires write to disk

- (1,0): Recently used, not modified – give second chance

- (1,1): Recently used and modified – give second chance

Performance Analysis and Metrics

Key Performance Indicators

Factors Affecting Performance

- Reference String Patterns: Sequential vs. random access

- Working Set Size: Number of pages actively used

- Memory Frame Count: More frames generally reduce page faults

- Page Size: Larger pages reduce fault frequency but waste space

- Temporal Locality: Recent pages likely to be accessed again

- Spatial Locality: Nearby pages likely to be accessed together

Implementation Considerations

Hardware Support Requirements

Different algorithms require varying levels of hardware support:

- FIFO: No special hardware needed

- LRU: Reference bits or counters for tracking access order

- Clock: Single reference bit per page

- Enhanced Second Chance: Reference and modify bits

Software Implementation Strategies

LRU Implementation with Doubly Linked List

struct PageNode {

int pageNumber;

struct PageNode* prev;

struct PageNode* next;

};

struct LRUCache {

int capacity;

int size;

struct PageNode* head; // Most recently used

struct PageNode* tail; // Least recently used

HashMap* pageMap; // Page number -> Node mapping

};

void accessPage(struct LRUCache* cache, int pageNumber) {

if (pageExists(cache, pageNumber)) {

// Move to front (most recently used)

moveToHead(cache, pageNumber);

} else {

if (cache->size >= cache->capacity) {

// Remove LRU page

removeTail(cache);

}

// Add new page to head

addToHead(cache, pageNumber);

}

}Real-World Applications

Operating System Implementations

- Linux: Uses Clock algorithm variants (2Q, ARC)

- Windows: Working Set Clock algorithm

- macOS: Modified Clock with aging

- Unix variants: Different Clock implementations

Database Buffer Management

Database systems also use page replacement algorithms for buffer pool management:

- LRU-K: Considers K previous references

- ARC (Adaptive Replacement Cache): Adapts between LRU and LFU

- 2Q: Two-queue strategy for better performance

Optimization Techniques

Working Set Model

The working set model defines the set of pages a process references during a time window. Understanding working sets helps optimize page replacement decisions.

Prefetching Strategies

- Sequential Prefetching: Load next pages in sequence

- Spatial Prefetching: Load nearby pages

- Temporal Prefetching: Load previously co-accessed pages

Adaptive Algorithms

Modern systems often use adaptive algorithms that adjust their behavior based on observed access patterns:

- ARC (Adaptive Replacement Cache): Balances between recency and frequency

- CAR (Clock with Adaptive Replacement): Low-overhead adaptive approach

- LIRS (Low Inter-Reference Recency Set): Distinguishes hot and cold pages

Performance Tuning Guidelines

Choosing the Right Algorithm

Decision Framework

- Use FIFO when: Simplicity is priority, minimal overhead required

- Use LRU when: Good performance needed, moderate overhead acceptable

- Use Clock when: Balance between performance and simplicity required

- Use Adaptive when: Workload patterns vary significantly

System Tuning Parameters

- Memory Pool Size: Balance between memory usage and performance

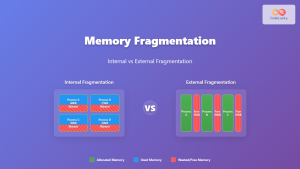

- Page Size: Consider internal vs. external fragmentation

- Replacement Frequency: Batch replacements for efficiency

- Prefetch Distance: How many pages to prefetch ahead

Conclusion

Page replacement algorithms are crucial for virtual memory system performance. While the Optimal algorithm provides the theoretical minimum page fault rate, practical systems rely on algorithms like LRU and Clock that approximate optimal behavior with reasonable overhead.

The choice between algorithms depends on:

- Performance requirements: How much overhead is acceptable

- Hardware capabilities: Available reference and modify bits

- Workload characteristics: Access patterns and temporal locality

- System constraints: Memory, CPU, and implementation complexity

FIFO remains valuable for its simplicity, LRU offers better performance for most workloads, and adaptive algorithms provide the best results for varying access patterns. Understanding these algorithms enables system administrators and developers to make informed decisions about memory management optimization.

Modern systems increasingly use hybrid approaches that combine multiple strategies, adjusting their behavior based on runtime analysis of access patterns. As memory hierarchies become more complex with technologies like persistent memory and storage-class memory, page replacement algorithms continue to evolve to meet new performance challenges.