In the era of big data and digital communication, the need for efficient data compression techniques is critical. Huffman Coding Algorithm stands out as an essential method for lossless data compression, achieving minimal average code length by assigning variable-length codes according to symbol frequencies. This article dives deep into the workings, construction, benefits, and applications of Huffman coding, reinforced with examples and visual diagrams to enhance understanding.

What is Huffman Coding Algorithm?

Huffman Coding is a popular greedy algorithm invented by David A. Huffman in 1952, designed to compress data by generating optimal prefix codes. It assigns shorter codes to more frequent symbols and longer codes to less frequent ones, thereby reducing the overall bit-length required to encode data.

The key characteristic is that the codes are prefix-free, meaning no code is a prefix of another, ensuring unambiguous decoding.

Why Huffman Coding is Optimal?

The algorithm produces a variable-length prefix code that minimizes the average code length based on the frequencies of symbols. This enables it to get close to or achieve the theoretical lower bound of compression described by the entropy of the source.

This optimality is proven mathematically and widely accepted in information theory for lossless compression.

How Huffman Coding Works: Step-by-Step

The Huffman Coding Algorithm can be broken down into these steps:

- Count frequencies: Calculate the frequency of each symbol in the input dataset.

- Build a priority queue: Create a min-heap or priority queue where each element is a tree node containing a symbol and its frequency.

- Build the Huffman Tree: Repeat until only one node remains:

- Remove the two nodes with the smallest frequencies from the queue.

- Create a new internal node with these two nodes as children and frequency equal to their sum.

- Insert the new node back into the queue.

- Generate codes: Traverse the tree from the root to assign binary codes: ‘0’ for the left branch and ‘1’ for the right branch.

- Encode data: Replace each symbol in the input with its corresponding code.

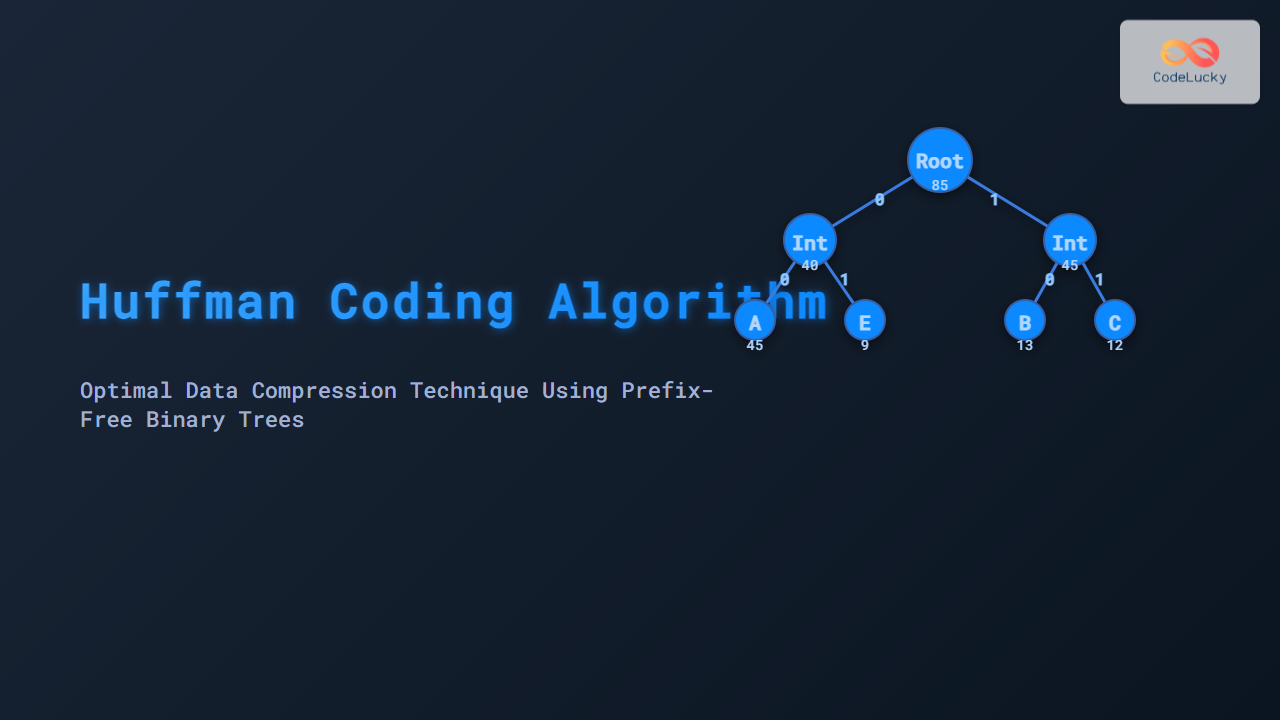

Example of Huffman Coding

Consider the symbol set: A, B, C, D, E with the following frequencies:

- A: 45

- B: 13

- C: 12

- D: 16

- E: 9

Stepwise, the two smallest frequencies, E(9) and C(12), combine to form a node of frequency 21. This is then combined with B(13) for node 34; then D(16) merges for 50, and finally with A(45) to create the root node (85).

By traversing the final tree, we assign codes to each symbol. For example:

- A: 0

- B: 101

- C: 100

- D: 111

- E: 110

Constructing Huffman Codes: Visual Tree and Code Assignment

From the root, left edges assign ‘0’ and right edges ‘1’. By following edges to leaves, codes are constructed as above, guaranteeing prefix-free encoding.

Encoding and Decoding Example

Using the codes assigned, an input string like "ABACAB" would be encoded as:

A (0) B (101) A (0) C (100) A (0) B (101) => 0 101 0 100 0 101This binary stream can be decoded uniquely by traversing the Huffman tree until a leaf node is reached for every symbol.

Advantages of Huffman Coding

- Optimality: Produces the lowest possible average code length for a given symbol distribution.

- Lossless: No data loss during compression and decompression.

- Simple Implementation: Relatively easy to implement with min-heap data structures.

Limitations

- Requires knowledge of symbol frequencies beforehand (offline coding) or two passes for adaptive implementation.

- Not efficient for small alphabets with uniform frequencies, where fixed-length coding might suffice.

- Overhead of storing the Huffman tree is sometimes non-trivial in very small data sets.

Applications of Huffman Coding

Huffman coding is widely used in many compression standards and algorithms, including:

- JPEG image compression (for entropy coding).

- MP3 audio compression.

- DEFLATE algorithm (in ZIP, gzip).

- General purpose lossless data compression tools and file formats.

Interactive Visualization

Below is a simple interactive snippet where users can input symbol frequencies, and the algorithm generates corresponding Huffman codes:

Conclusion

Huffman Coding remains a foundational data compression algorithm due to its simplicity, efficiency, and optimality in encoding symbols based on frequency. Understanding its construction, tree representation, and practical encoding helps in grasping how modern compression techniques achieve lossless data reduction. The algorithm’s versatility makes it a must-know for computer scientists, software engineers, and data enthusiasts.