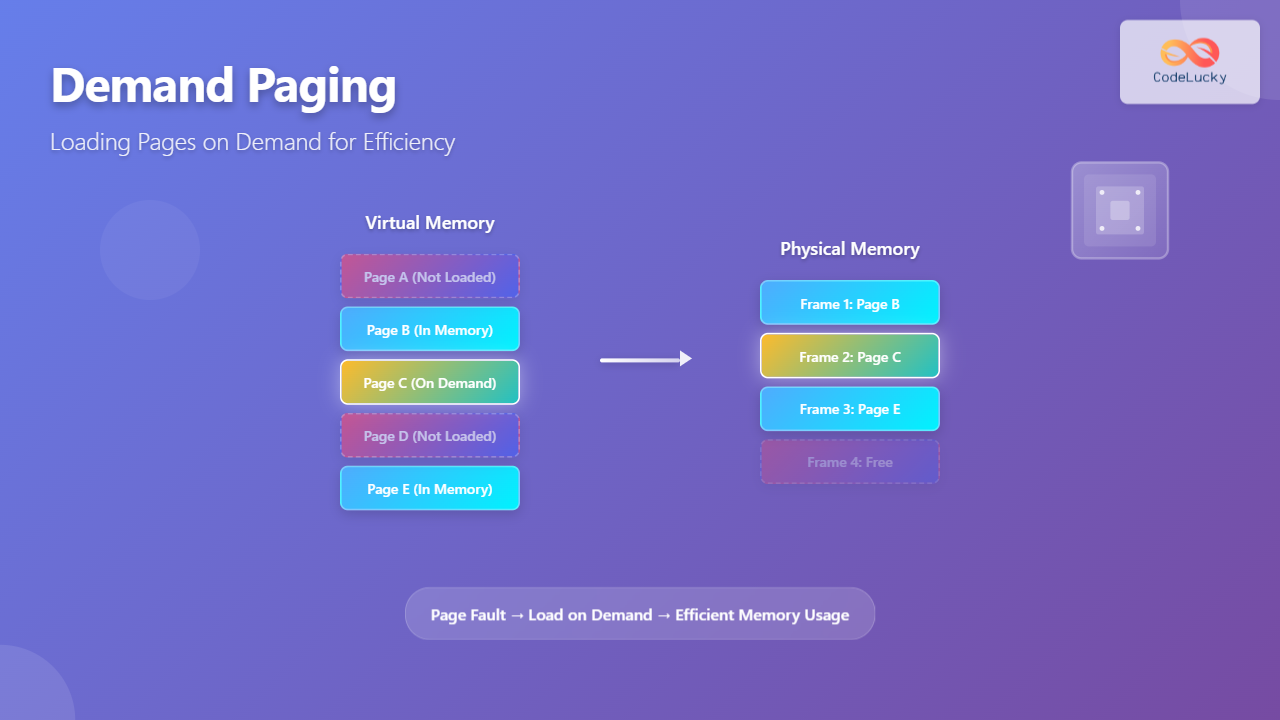

Introduction to Demand Paging

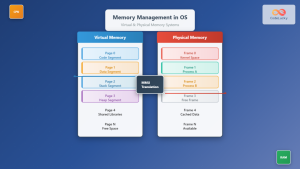

Demand paging is a sophisticated memory management technique used by modern operating systems to optimize memory usage and improve system performance. Instead of loading entire programs into physical memory at once, demand paging loads pages into memory only when they are actually needed during program execution.

This lazy loading approach allows systems to run programs that are larger than the available physical memory, creating an illusion of unlimited memory space through virtual memory management. When a program references a page that isn’t currently in memory, the operating system handles the request transparently, loading the required page from storage.

How Demand Paging Works

The demand paging mechanism operates on the principle of locality of reference – the observation that programs typically access only a small portion of their total memory space at any given time. This behavior makes demand paging highly effective for memory optimization.

Key Components of Demand Paging

Several critical components work together to implement demand paging effectively:

- Page Table: Maps virtual addresses to physical addresses and tracks page status

- Valid/Invalid Bits: Indicate whether a page is currently in memory

- Page Fault Handler: Operating system routine that loads missing pages

- Secondary Storage: Backing store where pages are kept when not in memory

- Frame Allocation: Management of available physical memory frames

Page Fault Handling Process

When a program attempts to access a page that isn’t in memory, a page fault occurs. This hardware-generated interrupt triggers the operating system’s page fault handler to resolve the situation.

Types of Page Faults

Page faults can be categorized into different types based on their cause:

- Hard Page Fault: Page must be loaded from secondary storage

- Soft Page Fault: Page is in memory but not marked as present

- Invalid Page Fault: Access to unmapped or protected memory region

Advantages of Demand Paging

Demand paging provides numerous benefits that make it essential for modern operating systems:

Memory Efficiency

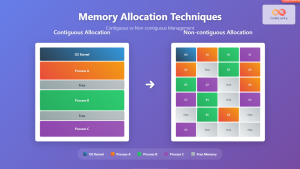

Reduced Memory Requirements: Programs use only the memory they actually need, allowing more programs to run simultaneously. A typical program might use only 10-20% of its total allocated memory space during execution.

Improved System Performance

By loading pages on demand, the system reduces:

- Initial program loading time

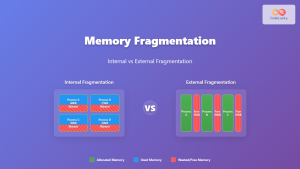

- Memory fragmentation

- Unnecessary I/O operations

- Overall system startup time

Support for Large Programs

Programs larger than physical memory can execute seamlessly, as only active portions need to be in memory simultaneously.

Implementation Example

Let’s examine a practical implementation of demand paging concepts:

// Simplified page table entry structure

struct page_table_entry {

unsigned int present : 1; // Page in memory?

unsigned int writable : 1; // Write permission

unsigned int user : 1; // User mode access

unsigned int accessed : 1; // Recently accessed

unsigned int dirty : 1; // Modified since load

unsigned int frame : 20; // Physical frame number

};

// Page fault handler (simplified)

void handle_page_fault(unsigned long virtual_addr) {

struct page_table_entry *pte;

unsigned long page_num;

unsigned long frame_num;

// Extract page number from virtual address

page_num = virtual_addr >> PAGE_SHIFT;

// Get page table entry

pte = &page_table[page_num];

// Check if page is valid but not present

if (!pte->present) {

// Allocate physical frame

frame_num = allocate_frame();

// Load page from storage

load_page_from_disk(page_num, frame_num);

// Update page table entry

pte->frame = frame_num;

pte->present = 1;

pte->accessed = 1;

// Flush TLB entry

flush_tlb_entry(virtual_addr);

}

}

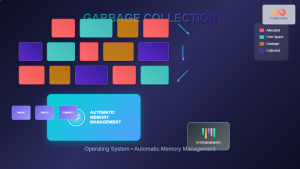

Page Replacement Algorithms

When physical memory becomes full, the operating system must decide which pages to evict to make room for new pages. Various algorithms handle this decision:

Least Recently Used (LRU) Algorithm

LRU is one of the most effective page replacement algorithms, based on the principle that recently used pages are more likely to be used again soon.

class LRUPageReplacer:

def __init__(self, capacity):

self.capacity = capacity

self.pages = {}

self.access_order = []

def access_page(self, page_num):

if page_num in self.pages:

# Update access order

self.access_order.remove(page_num)

self.access_order.append(page_num)

return "HIT"

else:

# Page fault occurred

if len(self.pages) >= self.capacity:

# Replace least recently used page

lru_page = self.access_order.pop(0)

del self.pages[lru_page]

print(f"Evicted page {lru_page}")

# Load new page

self.pages[page_num] = True

self.access_order.append(page_num)

return "MISS"

# Example usage

replacer = LRUPageReplacer(3)

accesses = [1, 2, 3, 4, 1, 2, 5, 1, 2, 3, 4, 5]

for page in accesses:

result = replacer.access_page(page)

print(f"Page {page}: {result}")

Performance Considerations

The effectiveness of demand paging depends on several factors that impact system performance:

Page Fault Rate

The effective access time can be calculated using the formula:

Effective Access Time = (1 - p) × Memory Access Time + p × Page Fault Time

Where p is the page fault rate. For optimal performance, the page fault rate should be kept as low as possible.

Working Set Size

The working set represents the set of pages a process actively uses during a time window. Proper working set management prevents thrashing – a condition where the system spends more time handling page faults than executing useful work.

Advanced Demand Paging Techniques

Prepaging

Prepaging attempts to predict which pages will be needed soon and loads them proactively. This technique reduces page faults but requires sophisticated prediction algorithms.

Copy-on-Write (COW)

COW optimization delays copying pages until they are actually modified, significantly improving the performance of process creation and memory sharing.

// Copy-on-Write page handling

void handle_cow_fault(struct vm_area *vma, unsigned long addr) {

struct page *old_page, *new_page;

// Get the current page

old_page = get_page(addr);

// Check if page is shared

if (page_count(old_page) > 1) {

// Allocate new page

new_page = alloc_page();

// Copy page contents

copy_page_data(old_page, new_page);

// Update page table to point to new page

update_page_table(addr, new_page);

// Mark as writable

set_page_writable(addr);

// Decrease reference count of old page

put_page(old_page);

} else {

// Just mark as writable

set_page_writable(addr);

}

}

Real-World Applications

Demand paging is crucial in various computing scenarios:

Database Management Systems

Database systems use demand paging to manage large datasets efficiently, loading only the required data pages into memory buffers.

Virtual Machines

Hypervisors employ demand paging to allocate memory to virtual machines dynamically, enabling memory overcommitment.

Mobile Operating Systems

Mobile devices with limited memory rely heavily on demand paging to run multiple applications simultaneously.

Monitoring and Optimization

System administrators can monitor demand paging performance using various tools:

# Monitor page fault statistics on Linux

vmstat 1

# View detailed memory information

cat /proc/meminfo

# Check page fault rates per process

ps -eo pid,comm,maj_flt,min_flt

# Monitor swap usage

swapon -s

Optimization Strategies

- Increase Physical Memory: More RAM reduces page fault frequency

- Optimize Page Size: Balance between internal fragmentation and I/O efficiency

- Improve Locality: Reorganize code and data structures for better access patterns

- Use Memory Mapping: Leverage memory-mapped files for efficient I/O

Future Developments

Demand paging continues to evolve with technological advances:

Non-Volatile Memory

Technologies like Intel Optane blur the line between memory and storage, potentially changing how demand paging operates.

Machine Learning Integration

AI-driven page replacement algorithms can learn application patterns to make better eviction decisions.

Heterogeneous Memory Systems

Future systems may manage multiple memory types with different characteristics, requiring sophisticated demand paging strategies.

Conclusion

Demand paging represents a fundamental advancement in memory management, enabling efficient use of system resources while supporting complex, memory-intensive applications. By loading pages only when needed, operating systems can run more programs simultaneously and handle datasets larger than physical memory.

Understanding demand paging concepts is essential for system programmers, performance engineers, and anyone working with memory-intensive applications. As computing systems continue to evolve, demand paging will remain a critical component of efficient memory management, adapting to new hardware architectures and application requirements.

The success of demand paging lies in its ability to transparently manage memory complexity while providing applications with the illusion of unlimited memory space. This abstraction has enabled the development of sophisticated software systems that would be impossible without effective virtual memory management.