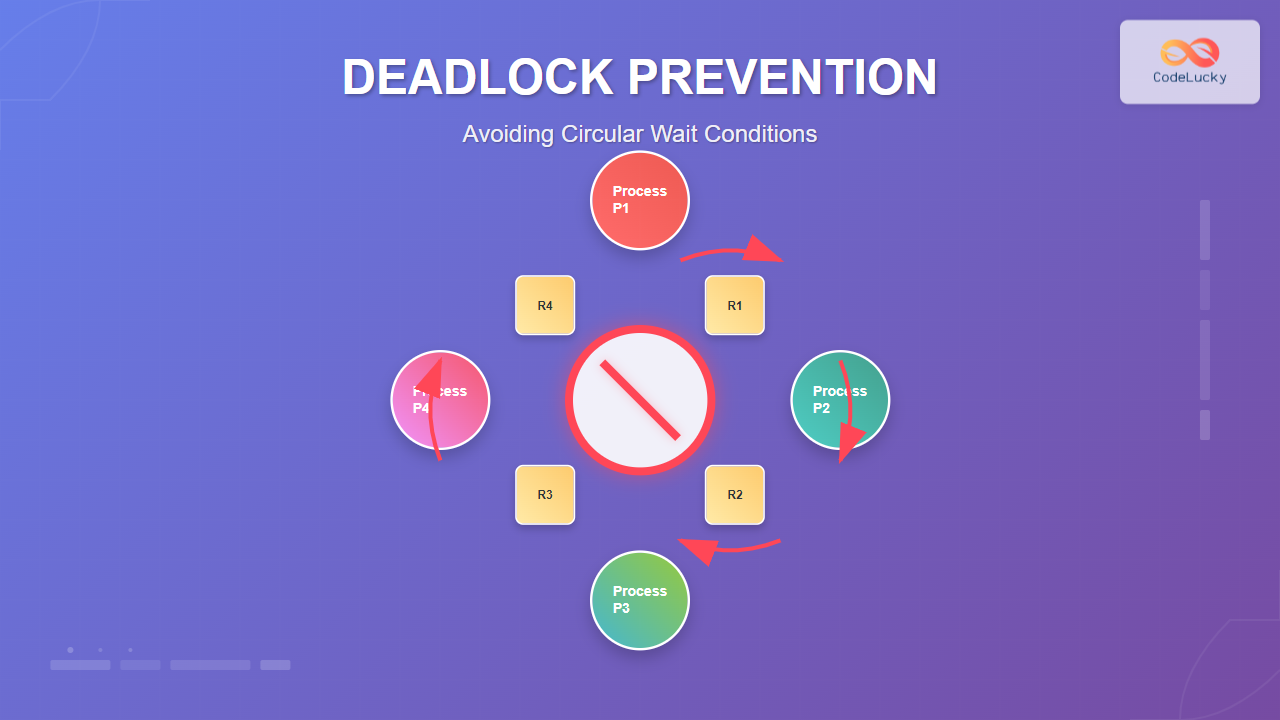

Understanding Deadlock and Circular Wait

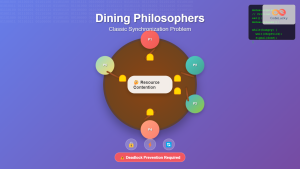

Deadlock is one of the most critical issues in operating systems where two or more processes become permanently blocked, each waiting for resources held by the other. Among the four necessary conditions for deadlock (mutual exclusion, hold and wait, no preemption, and circular wait), circular wait is often the most practical target for prevention strategies.

Circular wait occurs when processes form a chain where each process holds a resource needed by the next process in the chain, creating a closed loop. By preventing this condition, we can effectively eliminate the possibility of deadlock occurrence.

Core Strategies for Preventing Circular Wait

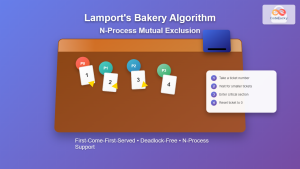

1. Resource Ordering (Linear Ordering)

The most widely implemented approach involves assigning a unique numerical order to all resources in the system. Processes must request resources in ascending order of their assigned numbers, preventing the formation of circular dependencies.

Implementation Example

// Resource ordering example

#define PRINTER 1

#define SCANNER 2

#define DISK 3

#define NETWORK 4

// Correct implementation - ascending order

void process_A() {

acquire_resource(PRINTER); // Resource 1

acquire_resource(DISK); // Resource 3

// ... perform operations

release_resource(DISK);

release_resource(PRINTER);

}

void process_B() {

acquire_resource(SCANNER); // Resource 2

acquire_resource(NETWORK); // Resource 4

// ... perform operations

release_resource(NETWORK);

release_resource(SCANNER);

}

2. Two-Phase Locking Protocol

This approach divides resource acquisition into two distinct phases: growing phase (acquire resources) and shrinking phase (release resources). Once a process begins releasing resources, it cannot acquire new ones.

Practical Implementation

class TwoPhaseLocking:

def __init__(self):

self.phase = "GROWING" # GROWING or SHRINKING

self.held_resources = []

self.lock = threading.Lock()

def acquire_resource(self, resource_id):

with self.lock:

if self.phase == "SHRINKING":

raise Exception("Cannot acquire resources in shrinking phase")

# Simulate resource acquisition

self.held_resources.append(resource_id)

print(f"Acquired resource {resource_id}")

return True

def release_resource(self, resource_id):

with self.lock:

self.phase = "SHRINKING" # Switch to shrinking phase

if resource_id in self.held_resources:

self.held_resources.remove(resource_id)

print(f"Released resource {resource_id}")

return True

3. Timestamp-Based Resource Allocation

Each process receives a unique timestamp when it starts. Resources are allocated based on these timestamps, ensuring a consistent ordering that prevents circular wait conditions.

public class TimestampBasedAllocation {

private static long globalTimestamp = 0;

private long processTimestamp;

private Map<Integer, Long> resourceOwners;

public TimestampBasedAllocation() {

this.processTimestamp = ++globalTimestamp;

this.resourceOwners = new ConcurrentHashMap<>();

}

public boolean requestResource(int resourceId) {

Long currentOwner = resourceOwners.get(resourceId);

// If resource is free or owned by newer process

if (currentOwner == null || currentOwner > this.processTimestamp) {

resourceOwners.put(resourceId, this.processTimestamp);

return true;

}

// Wait-die: if older process needs resource from newer, wait

// Otherwise, die (restart with same timestamp)

return false;

}

}

Advanced Prevention Techniques

Hierarchical Resource Allocation

This method organizes resources into a hierarchical structure where processes can only request resources from higher levels after completing operations on lower levels.

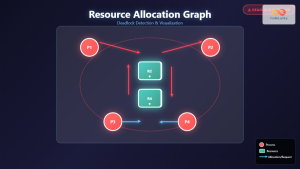

Resource Allocation Graph Analysis

Before granting any resource request, the system analyzes the resource allocation graph to ensure that granting the request won’t create a cycle.

class ResourceAllocationGraph:

def __init__(self):

self.processes = set()

self.resources = set()

self.allocation = {} # resource -> process

self.request = {} # process -> set of resources

def has_cycle(self):

"""Check if the graph contains a cycle using DFS"""

visited = set()

rec_stack = set()

def dfs(node):

visited.add(node)

rec_stack.add(node)

# Get neighbors based on graph structure

neighbors = self.get_neighbors(node)

for neighbor in neighbors:

if neighbor not in visited:

if dfs(neighbor):

return True

elif neighbor in rec_stack:

return True

rec_stack.remove(node)

return False

for node in self.processes.union(self.resources):

if node not in visited:

if dfs(node):

return True

return False

def safe_to_allocate(self, process, resource):

"""Check if allocation is safe before proceeding"""

# Temporarily make the allocation

old_allocation = self.allocation.get(resource)

self.allocation[resource] = process

# Check for cycles

safe = not self.has_cycle()

# Restore previous state

if old_allocation:

self.allocation[resource] = old_allocation

else:

del self.allocation[resource]

return safe

Real-World Implementation Examples

Database Transaction Management

Database systems commonly implement ordered locking to prevent deadlocks in concurrent transactions.

-- Example: Banking transaction with ordered locking

BEGIN TRANSACTION;

-- Always lock accounts in ascending order by account_id

DECLARE @account1 INT = CASE WHEN @from_account < @to_account

THEN @from_account ELSE @to_account END;

DECLARE @account2 INT = CASE WHEN @from_account > @to_account

THEN @from_account ELSE @to_account END;

-- Lock accounts in order

SELECT balance FROM accounts WHERE account_id = @account1 WITH (UPDLOCK);

SELECT balance FROM accounts WHERE account_id = @account2 WITH (UPDLOCK);

-- Perform transfer

UPDATE accounts SET balance = balance - @amount

WHERE account_id = @from_account;

UPDATE accounts SET balance = balance + @amount

WHERE account_id = @to_account;

COMMIT TRANSACTION;

Operating System Process Synchronization

// Linux kernel-style resource ordering

struct resource_manager {

spinlock_t lock;

struct list_head resources;

int max_resource_id;

};

int acquire_resources_ordered(int *resource_ids, int count) {

// Sort resource IDs to ensure consistent ordering

qsort(resource_ids, count, sizeof(int), compare_int);

for (int i = 0; i < count; i++) {

if (!try_acquire_resource(resource_ids[i])) {

// Release already acquired resources in reverse order

for (int j = i - 1; j >= 0; j--) {

release_resource(resource_ids[j]);

}

return -1; // Failed to acquire all resources

}

}

return 0; // Success

}

Performance Considerations and Trade-offs

Overhead Analysis

Resource ordering introduces minimal runtime overhead but may reduce system throughput due to artificial constraints on resource access patterns. The key trade-offs include:

- Memory overhead: Maintaining resource ordering information

- CPU overhead: Sorting resource requests and validation

- Throughput impact: Potentially longer waiting times for optimal resource combinations

- Fairness considerations: Some processes may consistently wait longer

Optimization Strategies

class OptimizedResourceManager {

private:

std::vector<std::mutex> resource_mutexes;

std::atomic<int> next_resource_id{0};

public:

// Batch acquisition with timeout

bool acquire_multiple_resources(

const std::vector<int>& resource_ids,

std::chrono::milliseconds timeout

) {

auto sorted_ids = resource_ids;

std::sort(sorted_ids.begin(), sorted_ids.end());

std::vector<std::unique_lock<std::mutex>> locks;

auto deadline = std::chrono::steady_clock::now() + timeout;

for (int id : sorted_ids) {

auto remaining_time = deadline - std::chrono::steady_clock::now();

if (remaining_time <= std::chrono::milliseconds(0)) {

return false; // Timeout

}

locks.emplace_back(

resource_mutexes[id],

std::defer_lock

);

if (!locks.back().try_lock_for(remaining_time)) {

return false; // Could not acquire within timeout

}

}

// All resources acquired successfully

return true;

}

};

Testing and Validation

Deadlock Detection Testing

import threading

import time

import random

class DeadlockTester:

def __init__(self, num_resources=5, num_processes=10):

self.resources = [threading.Lock() for _ in range(num_resources)]

self.resource_order = list(range(num_resources))

self.deadlock_detected = False

def ordered_acquisition_test(self, process_id):

"""Test ordered resource acquisition"""

# Randomly select resources to acquire

needed_resources = random.sample(

range(len(self.resources)),

random.randint(2, 4)

)

# Sort for ordered acquisition

needed_resources.sort()

acquired_locks = []

try:

for resource_id in needed_resources:

print(f"Process {process_id} acquiring resource {resource_id}")

self.resources[resource_id].acquire(timeout=5)

acquired_locks.append(resource_id)

# Simulate work

time.sleep(random.uniform(0.1, 0.5))

except Exception as e:

print(f"Process {process_id} failed: {e}")

self.deadlock_detected = True

finally:

# Release in reverse order

for resource_id in reversed(acquired_locks):

self.resources[resource_id].release()

print(f"Process {process_id} released resource {resource_id}")

def run_test(self, duration=30):

"""Run concurrent deadlock test"""

threads = []

start_time = time.time()

while time.time() - start_time < duration:

if len(threads) < 10: # Limit concurrent processes

t = threading.Thread(

target=self.ordered_acquisition_test,

args=(len(threads),)

)

threads.append(t)

t.start()

# Clean up completed threads

threads = [t for t in threads if t.is_alive()]

time.sleep(0.1)

# Wait for all threads to complete

for t in threads:

t.join(timeout=10)

return not self.deadlock_detected

Best Practices and Implementation Guidelines

Design Principles

- Establish consistent resource ordering early in system design

- Document resource hierarchy clearly for all developers

- Implement timeout mechanisms as a secondary safety measure

- Use automated testing to validate deadlock prevention

- Monitor resource contention patterns in production

Common Implementation Mistakes

- Inconsistent ordering: Different modules using different resource orders

- Dynamic resource creation: New resources not properly integrated into ordering scheme

- Exception handling gaps: Failing to release resources during error conditions

- Performance optimization bypasses: Circumventing ordering for performance gains

Conclusion

Preventing circular wait conditions is a highly effective approach to deadlock prevention that can be implemented with reasonable overhead. By establishing consistent resource ordering, implementing proper acquisition protocols, and maintaining rigorous testing practices, systems can achieve robust deadlock prevention.

The key to successful implementation lies in choosing the right strategy for your specific use case, whether it’s simple resource ordering for basic systems, timestamp-based allocation for distributed environments, or hierarchical approaches for complex resource dependencies. Remember that prevention is generally more efficient than detection and recovery, making these techniques valuable tools in system design.

Modern operating systems and database management systems successfully employ these techniques, demonstrating their practical viability in real-world applications. As systems become increasingly concurrent and distributed, mastering these deadlock prevention strategies becomes even more critical for building reliable software.