In modern computing, where applications handle thousands or even millions of tasks simultaneously, efficient task management becomes critical. Thread Pool algorithms provide an essential mechanism for managing concurrency by reusing a fixed set of worker threads to execute tasks, avoiding the overhead of creating and destroying threads repeatedly. This approach plays a vital role in high-performance systems, web servers, and large-scale parallel processing.

What is a Thread Pool?

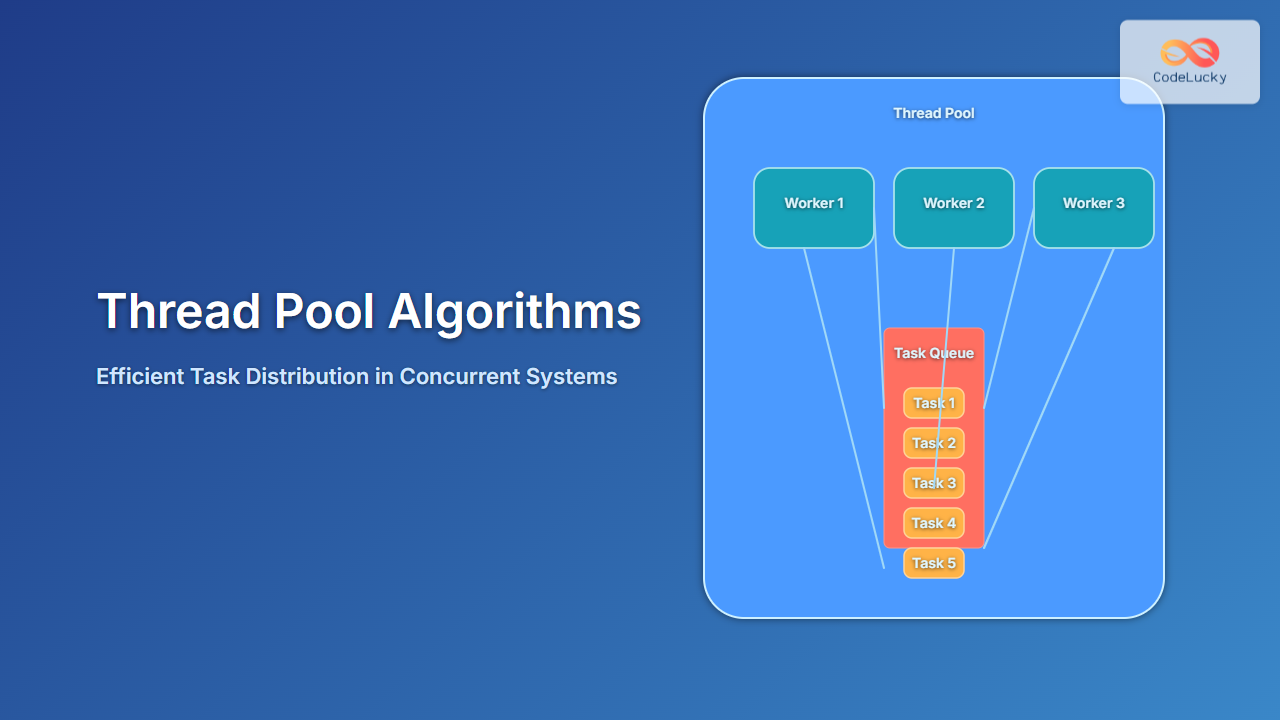

A thread pool is a collection of pre-instantiated, reusable worker threads maintained to perform tasks. Instead of creating a new thread for each incoming task, a system pushes tasks into a queue, and the available threads pick tasks for execution. This reduces memory consumption, avoids high CPU scheduling costs, and ensures better throughput in scalable applications.

Why Use a Thread Pool?

- Performance: Eliminates the overhead of frequent thread creation and destruction.

- Resource Management: Limits the maximum number of simultaneous threads, preventing resource exhaustion.

- Task Scheduling: Allows task prioritization and batch processing.

- Scalability: Provides efficient distribution in large-scale systems.

How Thread Pool Algorithms Work

Thread pool algorithms typically follow a simple but powerful workflow:

This diagram demonstrates how submitted tasks either get assigned immediately to an available worker thread or wait in the queue until a thread becomes free.

Types of Thread Pool Algorithms

Fixed Thread Pool

A fixed number of worker threads are allocated. If all are busy, tasks wait in the queue until one becomes available.

Cached Thread Pool

Dynamically creates threads as needed but reuses previously created threads. Useful for many short-lived asynchronous tasks.

Scheduled Thread Pool

Executes tasks after a given delay or periodically. Used for recurring background processes such as monitoring or periodic updates.

ForkJoin Pool

Designed for recursive divide-and-conquer tasks. Suitable for parallel processing scenarios such as sorting and large-scale computations.

Thread Pool in Practice

Below is a simple example in Java showcasing a fixed thread pool execution:

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class ThreadPoolExample {

public static void main(String[] args) {

ExecutorService executor = Executors.newFixedThreadPool(3);

for (int i = 1; i <= 6; i++) {

int taskNumber = i;

executor.execute(() -> {

System.out.println("Executing Task " + taskNumber + " by " + Thread.currentThread().getName());

try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); }

});

}

executor.shutdown();

}

}

Output Example:

Executing Task 1 by pool-1-thread-1 Executing Task 2 by pool-1-thread-2 Executing Task 3 by pool-1-thread-3 Executing Task 4 by pool-1-thread-1 Executing Task 5 by pool-1-thread-2 Executing Task 6 by pool-1-thread-3

This shows how tasks are distributed across a fixed number of threads efficiently.

Interactive Visualization of Thread Distribution

The following simulation illustrates how multiple tasks enter the queue and get distributed among available worker threads:

Advantages of Thread Pool Algorithms

- Reduces response time in high-load systems.

- Improves CPU utilization by keeping threads busy.

- Offers controlled concurrency instead of unbounded thread creation.

- Supports scheduling and recurring background jobs.

Challenges in Thread Pools

- Deadlocks: Poor task design can lead to blocking threads indefinitely.

- Queue Saturation: If tasks arrive faster than they are processed, queue overflow may occur.

- Fairness: Ensuring fair task scheduling between long and short-running tasks can be tricky.

Best Practices for Using Thread Pool Algorithms

- Choose pool size wisely depending on CPU cores and workload patterns.

- Use bounded queues to prevent memory overflow.

- Design task granularity properly to balance load.

- Monitor metrics such as queue length, thread utilization, and task completion time.

Conclusion

Thread Pool Algorithms provide a scalable and efficient approach to concurrent task execution. By managing thread reuse, task scheduling, and resource constraints effectively, they enable high-performance computing systems, web servers, and parallel applications to operate smoothly. Whether implementing fixed, cached, or scheduled pools, understanding these algorithms is essential for building modern, efficient software systems.