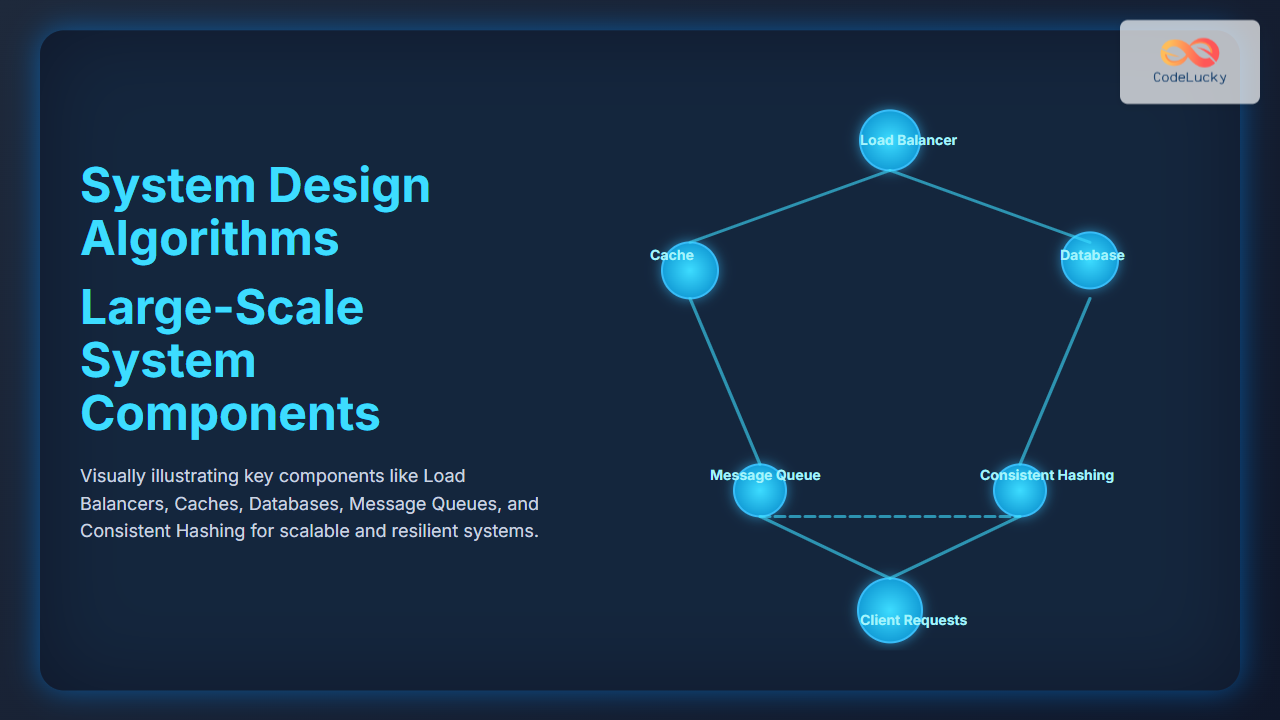

The architecture of large-scale systems involves multiple complex components working together to achieve high availability, scalability, and fault tolerance. This article explores critical system design algorithms essential for building robust and performant distributed systems. Readers will gain a deep understanding of common components such as load balancers, caches, databases, messaging queues, and consistent hashing, supported by example workflows and visual diagrams.

Introduction to Large-Scale System Components

Large-scale systems (e.g., social networks, e-commerce platforms, search engines) must efficiently handle massive volumes of data and concurrent users. This demand necessitates careful design of components and algorithms that distribute load, minimize latency, and ensure data consistency. Key components include:

- Load Balancers – Distribute incoming requests evenly.

- Caching Systems – Reduce database load by storing frequent data.

- Databases – Store persistent, structured data with options for replication and partitioning.

- Message Queues – Facilitate asynchronous communication between services.

- Consistent Hashing – Efficiently distribute keys across multiple nodes.

Load Balancing Algorithms

Load balancing is critical for high availability and fault tolerance. It ensures client requests are efficiently routed to healthy servers.

Common load balancing algorithms:

- Round Robin: Requests distributed sequentially across servers in a circular manner.

- Least Connections: Directs traffic to the server with the fewest active connections.

- IP Hash: Client IP is hashed to consistently route requests to the same server, improving cache hit rates.

Example: Round Robin Load Balancer

Assume three application servers (S1, S2, S3). Incoming client requests are assigned in order:

Request 1 → S1 | Request 2 → S2 | Request 3 → S3 | Request 4 → S1 ...

Caching Strategies and Algorithms

Cache layers improve system performance by storing frequently accessed data in fast storage.

Key caching algorithms include:

- LRU (Least Recently Used): Evicts the least recently accessed item when cache is full.

- LFU (Least Frequently Used): Removes items used least often.

- Write-Through Cache: Updates cache and database synchronously to ensure consistency.

- Write-Back Cache: Updates only cache initially, deferring database writes to reduce latency.

Example: LRU Cache Mechanism

Imagine a cache size of 3 items. When a new item is accessed, it is added to the front, and the least recently accessed item is removed if cache is full.

Databases: Partitioning & Replication

Databases in large-scale systems use algorithms to manage data distribution and ensure durability.

- Replication: Copies data to multiple servers for fault tolerance. Methods include master-slave and master-master.

- Sharding/Partitioning: Splits data horizontally across servers to scale storage.

Example: Hash-Based Sharding

Using a hash function on the user ID, requests are routed to a shard responsible for a subset of users, distributing load and data evenly.

Message Queues and Event-Driven Systems

Message queues enable asynchronous processing and decoupling in distributed architecture. They use queuing algorithms such as FIFO (First In First Out) to maintain order or priority queues for handling urgent messages.

This setup allows producers to send messages quickly without waiting for consumers to process them, improving system throughput.

Consistent Hashing for Distributed Caches and Databases

Consistent hashing minimizes data movement when nodes are added or removed, critical in scalable systems.

Keys are mapped onto points on a ring. Each node owns the segment of the ring following its position. When nodes change, only relevant key ranges move, keeping cache hits stable.

Summary

Understanding and implementing algorithms such as load balancing, caching strategies, database partitioning, message queuing, and consistent hashing form the backbone of designing scalable, reliable large-scale systems. These components and algorithms, when combined thoughtfully, enable systems to handle high traffic volumes efficiently and provide fault tolerance, low latency, and maintain data integrity.