String algorithms are fundamental to computer science because most real-world data is text. From searching keywords in documents to DNA sequence alignment in bioinformatics, efficient string processing determines performance and scalability. In this article, we dive deep into string algorithms, specifically focusing on text processing and pattern matching, and illustrate them with Python examples and visual diagrams.

Importance of String Algorithms

- Search Engines: String matching helps locate user queries in billions of pages.

- Compilers: Lexical analysis relies on efficient text scanning.

- Bioinformatics: DNA sequence alignment uses string matching.

- Cybersecurity: Malware detection looks for known patterns in code/text.

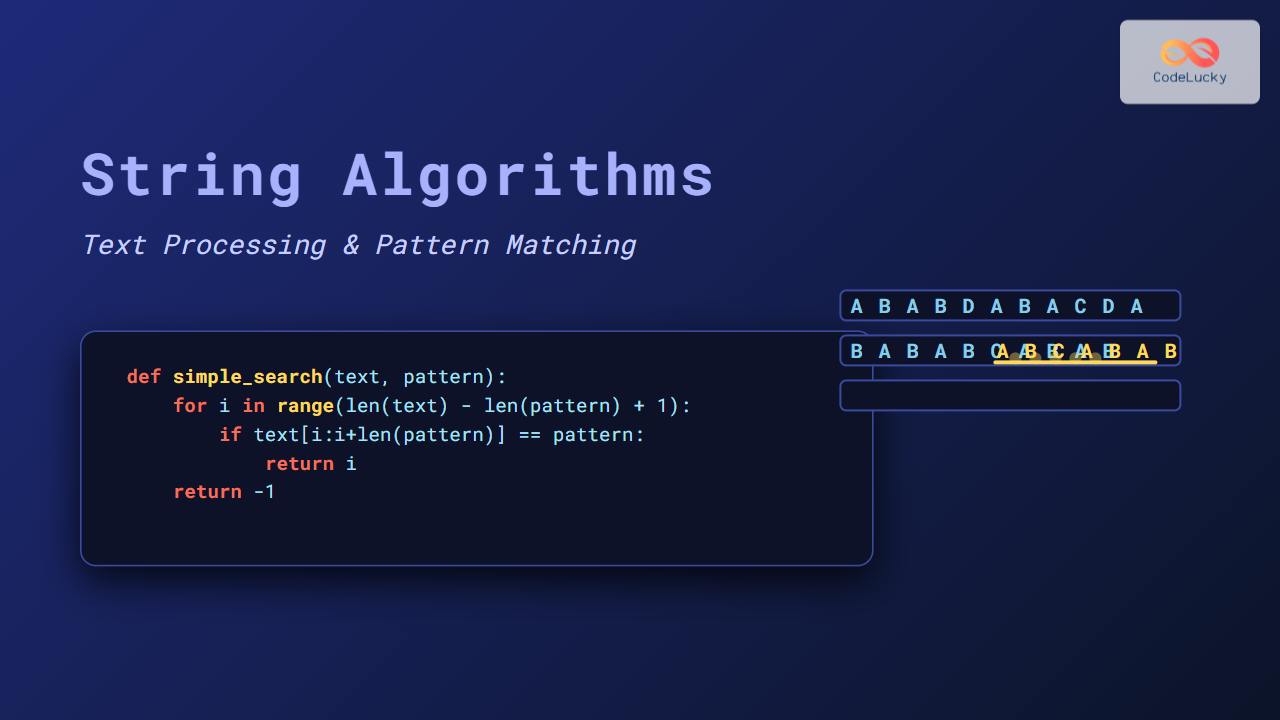

Naive String Matching Algorithm

The simplest approach is the naive method, which checks for the pattern starting at every position of the text. Though inefficient for long texts, it’s useful to understand first.

def naive_search(text, pattern):

positions = []

n, m = len(text), len(pattern)

for i in range(n - m + 1):

if text[i:i+m] == pattern:

positions.append(i)

return positions

# Example

text = "ABABDABACDABABCABAB"

pattern = "ABABCABAB"

print(naive_search(text, pattern)) # Output: [10]

Time Complexity: O(n * m), where n is text length and m is pattern length.

Knuth-Morris-Pratt (KMP) Algorithm

The KMP algorithm improves efficiency by avoiding re-checking of characters after mismatch, using a preprocessing step called LPS (Longest Prefix Suffix) array.

def compute_lps(pattern):

lps = [0] * len(pattern)

length = 0

i = 1

while i < len(pattern):

if pattern[i] == pattern[length]:

length += 1

lps[i] = length

i += 1

else:

if length != 0:

length = lps[length - 1]

else:

lps[i] = 0

i += 1

return lps

def kmp_search(text, pattern):

lps = compute_lps(pattern)

i = j = 0

positions = []

while i < len(text):

if text[i] == pattern[j]:

i += 1

j += 1

if j == len(pattern):

positions.append(i - j)

j = lps[j - 1]

elif i < len(text) and text[i] != pattern[j]:

if j != 0:

j = lps[j - 1]

else:

i += 1

return positions

# Example

print(kmp_search("ABABDABACDABABCABAB", "ABABCABAB")) # Output: [10]

Time Complexity: O(n + m), making it far superior to the naive approach for large text.

Rabin-Karp Algorithm (Rolling Hash)

The Rabin-Karp algorithm uses hashing to match the pattern quickly. It computes hash values for substrings of text and compares them with the hash of the pattern. A rolling hash makes this efficient.

def rabin_karp(text, pattern, prime=101):

n, m = len(text), len(pattern)

hpattern = 0

htext = 0

h = 1

d = 256

positions = []

for i in range(m - 1):

h = (h * d) % prime

for i in range(m):

hpattern = (d * hpattern + ord(pattern[i])) % prime

htext = (d * htext + ord(text[i])) % prime

for i in range(n - m + 1):

if hpattern == htext:

if text[i:i+m] == pattern:

positions.append(i)

if i < n - m:

htext = (d * (htext - ord(text[i]) * h) + ord(text[i+m])) % prime

if htext < 0:

htext += prime

return positions

# Example

print(rabin_karp("ABABDABACDABABCABAB", "ABABCABAB")) # Output: [10]

Time Complexity: Average O(n + m), worst-case O(nm) if hash collisions occur.

Boyer-Moore Algorithm

The Boyer-Moore algorithm is considered one of the fastest for practical use. It preprocesses the pattern to build two heuristic tables: the bad character rule and good suffix rule. It skips large sections of text, making it efficient in real-world usage.

def boyer_moore_search(text, pattern):

m, n = len(pattern), len(text)

skip = {c: m - i - 1 for i, c in enumerate(pattern[:-1])}

positions = []

i = 0

while i <= n - m:

j = m - 1

while j >= 0 and text[i + j] == pattern[j]:

j -= 1

if j < 0:

positions.append(i)

i += m

else:

i += skip.get(text[i + m - 1], m)

return positions

# Example

print(boyer_moore_search("HERE IS A SIMPLE EXAMPLE", "EXAMPLE")) # Output: [17]

Time Complexity: Best case O(n/m), average O(n), worst case O(nm).

Applications of String Pattern Matching

- Search Engines: Keyword search optimization.

- Spell Checkers: Detecting misspelled words.

- Fraud Detection: Matching transaction patterns.

- Bioinformatics: DNA and protein sequence alignment.

Conclusion

String algorithms are at the heart of modern computing applications. From the naive method to KMP, Rabin-Karp, and Boyer-Moore, each has unique strengths and trade-offs. Mastering these algorithms allows developers to process text at scale efficiently.

At CodeLucky.com, we recommend starting from naive matching, then experimenting with efficient algorithms like KMP and Rabin-Karp, and finally diving into Boyer-Moore for performance-critical applications.