Streaming algorithms are specialized algorithms designed to efficiently process and analyze massive data streams in real-time or near real-time, while using minimal memory and computational resources. These algorithms are critical in big data scenarios where the data is too large to store or process with traditional methods. Streaming algorithms enable applications such as network traffic analysis, financial transactions monitoring, sensor data processing, and clickstream analysis by providing approximate but timely insights without the luxury of random access or multiple passes over the data.

This article explores the core concepts behind streaming algorithms, their challenges, common algorithmic patterns, and practical examples with clear visualizations for easy understanding.

Why Streaming Algorithms?

Traditional algorithms often assume that the entire dataset fits into memory and can be accessed multiple times. However, with modern data sources generating flood-like streams of data continuously, such assumptions do not hold.

- Scalability: Data streams can be infinitely large, making it impossible to store all data in memory.

- Time Sensitivity: Decisions often need to happen instantly or with minimal latency.

- Memory Constraints: Only limited memory is available for storage and processing.

Streaming algorithms operate with these constraints — they process each data element as it arrives, update concise summaries or statistics, and discard raw data.

Key Challenges in Streaming Algorithms

- One-Pass Processing: The algorithm typically makes only one pass over the data stream.

- Limited Memory: Only sublinear or polylogarithmic space relative to input size is allowed.

- Approximate Results: Exact answers are often impossible, so probabilistic approximations with error guarantees are used.

- Concept Drift: Data streams may evolve over time, necessitating adaptive algorithms.

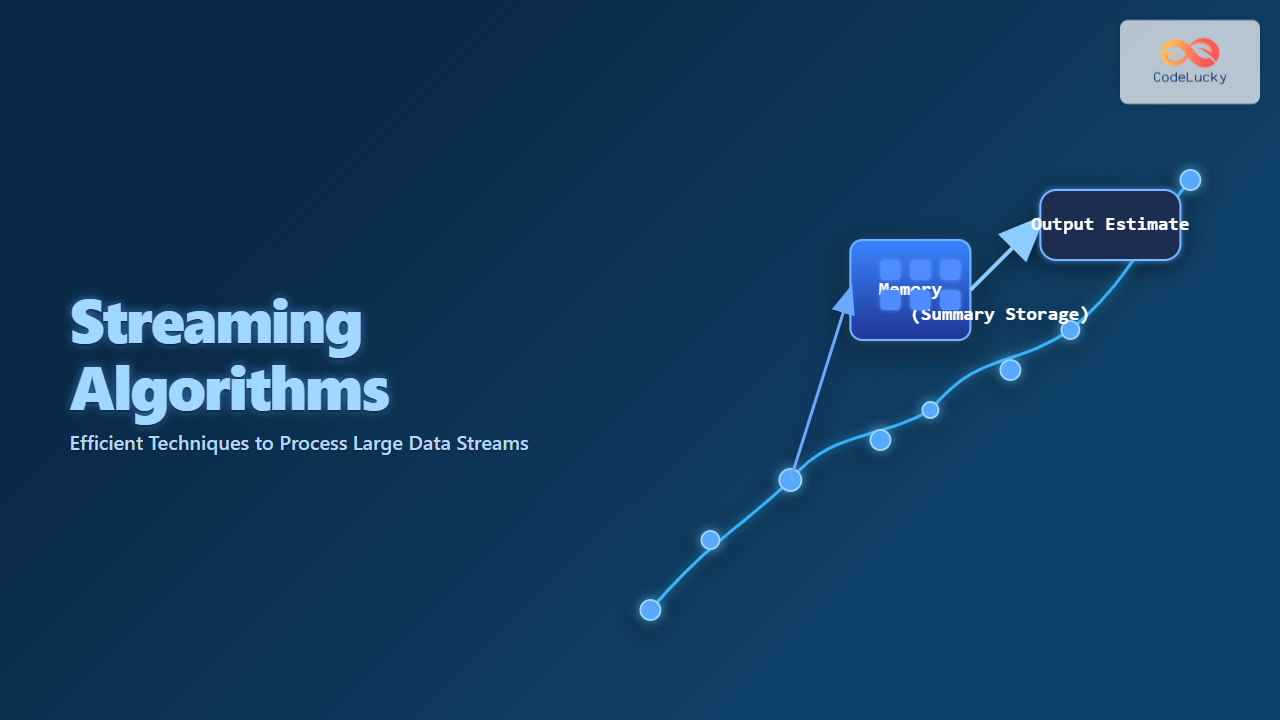

Basic Model of Streaming Algorithms

A typical streaming algorithm processes a stream of data items \(x_1, x_2, …, x_n\) arriving sequentially. It maintains a summary state \(S\), updated for each new item, such that \(S\) is much smaller than the entire input but allows extracting useful information.

Fundamental Techniques in Streaming Algorithms

1. Sampling

Sampling selects a representative subset of the stream to approximate statistics. A common example is reservoir sampling, which maintains a random sample of \(k\) items from an unknown-length stream with uniform probability.

2. Hashing & Sketches

Hash-based summaries called sketches store frequency-related information. Examples include Count-Min Sketch and HyperLogLog, used for frequency estimation and distinct counting.

3. Sliding Window Models

These focus on the most recent subset of data defined by time or count windows, adapting summaries accordingly.

Example: Counting Distinct Elements with HyperLogLog

One classical problem is estimating the number of unique items in a stream. The HyperLogLog algorithm hashes each element, analyzes the position of leading zeros in the binary representation of the hash, and combines these observations statistically.

This method uses fixed memory and is highly accurate for large streams.

Interactive Example: Reservoir Sampling (Sample 3 Items)

Suppose a stream of unknown length arrives. Reservoir sampling allows maintaining a uniform sample of size 3 at any time.

Initialize reservoir R of size 3 with first 3 stream items

for i = 4 to length of stream:

j = random number between 1 and i

if j ≤ 3:

R[j] = stream[i]

This ensures each element of the entire stream has the same probability of being in the reservoir at the end.

Example: Count-Min Sketch for Frequency Estimation

Count-Min Sketch (CMS) uses multiple hash functions to map stream elements to a two-dimensional array and maintain counts. Queries on CMS give an upper bound on the frequency of an element.

Applications of Streaming Algorithms

- Network traffic monitoring for detecting heavy hitters or anomalies.

- Real-time analytics on social media feeds or clickstreams.

- Sensor networks with continuous data collection.

- Financial tick data monitoring.

Summary

Streaming algorithms enable effective processing of big data streams by approximating solutions under strict memory and time limitations. Key algorithmic techniques like sampling, hashing, and sliding windows provide powerful tools to tackle classical data problems in this environment. Exploring these methods with clear examples and visual guides helps in understanding their strengths and practical applications.