In the world of database management, performance is king. As your database grows and your applications become more complex, the efficiency of your SQL queries becomes increasingly crucial. This article delves deep into the art and science of SQL performance tuning, providing you with practical techniques to optimize your database queries and supercharge your applications.

Understanding SQL Query Performance

Before we dive into optimization techniques, it's essential to understand what affects SQL query performance. Several factors come into play:

🔍 Query complexity: The more complex your query, the longer it takes to execute.

🔢 Data volume: Larger datasets generally require more processing time.

🏗️ Database schema: How your tables are structured and related affects query efficiency.

📊 Indexing: Proper indexing can dramatically speed up data retrieval.

🖥️ Hardware resources: CPU, memory, and disk I/O all impact query performance.

Analyzing Query Performance

The first step in optimization is identifying which queries need improvement. Most database management systems provide tools for query analysis.

Using EXPLAIN

The EXPLAIN command is your best friend when it comes to query analysis. It provides a wealth of information about how the database executes your query.

Let's look at an example:

EXPLAIN SELECT * FROM customers WHERE city = 'New York';

This might produce output like:

+----+-------------+-----------+------------+------+---------------+------+---------+------+------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+-----------+------------+------+---------------+------+---------+------+------+----------+-------------+

| 1 | SIMPLE | customers | NULL | ALL | NULL | NULL | NULL | NULL | 1000 | 10.00 | Using where |

+----+-------------+-----------+------------+------+---------------+------+---------+------+------+----------+-------------+

This output tells us that the query is doing a full table scan (type: ALL) on the customers table, examining 1000 rows. This isn't efficient, especially if the table is large.

Query Optimization Techniques

Now that we've identified slow queries, let's explore techniques to optimize them.

1. Use Appropriate Indexes

Indexes are one of the most powerful tools for query optimization. They allow the database to find data quickly without scanning the entire table.

Consider our previous example:

CREATE INDEX idx_city ON customers(city);

After creating this index, let's run EXPLAIN again:

EXPLAIN SELECT * FROM customers WHERE city = 'New York';

Now we might see:

+----+-------------+-----------+------------+------+---------------+----------+---------+-------+------+----------+-------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+-----------+------------+------+---------------+----------+---------+-------+------+----------+-------+

| 1 | SIMPLE | customers | NULL | ref | idx_city | idx_city | 152 | const | 50 | 100.00 | NULL |

+----+-------------+-----------+------------+------+---------------+----------+---------+-------+------+----------+-------+

The query now uses the index (type: ref), examining only 50 rows instead of 1000. This is a significant improvement!

2. Avoid SELECT *

While SELECT * is convenient, it often retrieves more data than necessary. Instead, select only the columns you need:

-- Instead of this

SELECT * FROM orders WHERE order_date > '2023-01-01';

-- Do this

SELECT order_id, customer_id, total_amount

FROM orders

WHERE order_date > '2023-01-01';

This reduces the amount of data transferred and processed, improving query performance.

3. Use JOINs Wisely

JOINs are powerful but can be performance-intensive. Use them judiciously and ensure you're joining on indexed columns.

Consider this query:

SELECT c.customer_name, o.order_date, o.total_amount

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

WHERE o.order_date > '2023-01-01';

Ensure that customer_id is indexed in both tables for optimal performance.

4. Utilize LIMIT for Large Result Sets

If you're dealing with large result sets but only need a subset, use LIMIT:

SELECT product_name, unit_price

FROM products

ORDER BY unit_price DESC

LIMIT 10;

This query efficiently retrieves only the top 10 most expensive products.

5. Avoid Functions in WHERE Clauses

Using functions in WHERE clauses can prevent the use of indexes. For example:

-- Instead of this

SELECT * FROM employees WHERE YEAR(hire_date) = 2023;

-- Do this

SELECT * FROM employees WHERE hire_date >= '2023-01-01' AND hire_date < '2024-01-01';

The second query allows the use of an index on the hire_date column, if one exists.

6. Use EXPLAIN ANALYZE for Deeper Insights

While EXPLAIN is useful, EXPLAIN ANALYZE provides even more detailed performance information:

EXPLAIN ANALYZE SELECT * FROM orders WHERE total_amount > 1000;

This might produce output like:

+----+-------------+--------+------------+-------+---------------+---------+---------+------+------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------+------------+-------+---------------+---------+---------+------+------+----------+-------------+

| 1 | SIMPLE | orders | NULL | range | total_amount | total_amount | 9 | NULL | 500 | 100.00 | Using where |

+----+-------------+--------+------------+-------+---------------+---------+---------+------+------+----------+-------------+

-> Filter: (orders.total_amount > 1000.0) (cost=51.25 rows=500) (actual time=0.136..0.897 rows=487 loops=1)

-> Index range scan on orders using total_amount (cost=51.25 rows=500) (actual time=0.132..0.816 rows=487 loops=1)

This output provides actual execution times and row counts, helping you understand query performance in real-world conditions.

7. Optimize Subqueries

Subqueries can be performance bottlenecks. Often, they can be rewritten as JOINs for better performance:

-- Instead of this

SELECT customer_name

FROM customers

WHERE customer_id IN (SELECT customer_id FROM orders WHERE total_amount > 1000);

-- Do this

SELECT DISTINCT c.customer_name

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

WHERE o.total_amount > 1000;

The JOIN version often performs better, especially with proper indexing.

8. Use Appropriate Data Types

Choosing the right data types can significantly impact performance. For example, using VARCHAR for fixed-length data or INT for small numbers wastes space and slows queries.

-- Instead of this

CREATE TABLE products (

product_id VARCHAR(20),

price DECIMAL(10,2),

in_stock VARCHAR(5)

);

-- Do this

CREATE TABLE products (

product_id INT,

price DECIMAL(10,2),

in_stock BOOLEAN

);

The second version uses more appropriate data types, improving storage efficiency and query performance.

9. Partition Large Tables

For very large tables, consider partitioning. This divides a table into smaller, more manageable pieces:

CREATE TABLE sales (

id INT,

sale_date DATE,

amount DECIMAL(10,2)

)

PARTITION BY RANGE (YEAR(sale_date)) (

PARTITION p0 VALUES LESS THAN (2020),

PARTITION p1 VALUES LESS THAN (2021),

PARTITION p2 VALUES LESS THAN (2022),

PARTITION p3 VALUES LESS THAN (2023),

PARTITION p4 VALUES LESS THAN MAXVALUE

);

Queries that include the partitioning key can now access only relevant partitions, significantly improving performance for large datasets.

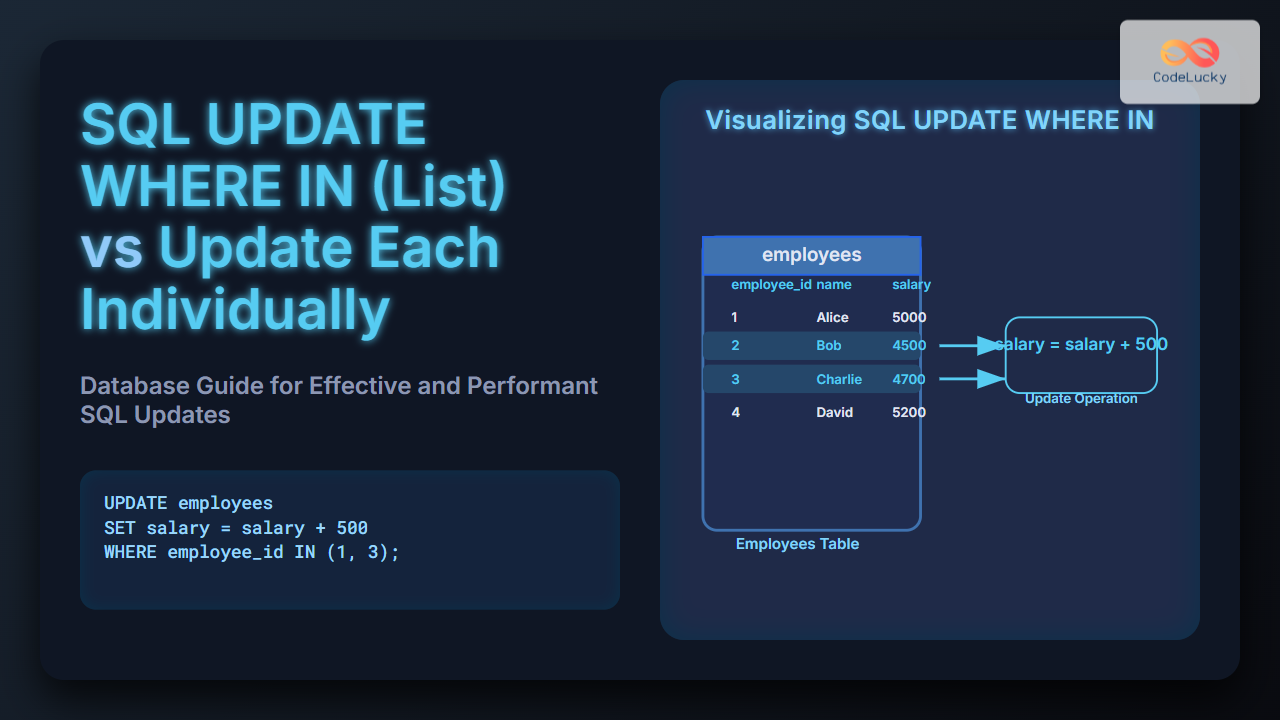

10. Use Query Caching

For queries that are run frequently and don't change often, consider using query caching. While the implementation varies by database system, the concept involves storing the results of expensive queries for quick retrieval.

In MySQL, for example, you might enable query caching like this:

SET GLOBAL query_cache_type = 1;

SET GLOBAL query_cache_size = 10485760; -- 10MB cache

Then, queries can be marked as cacheable:

SELECT SQL_CACHE customer_name, SUM(order_total)

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

GROUP BY c.customer_id;

Remember to invalidate the cache when the underlying data changes.

Advanced Optimization Techniques

As we delve deeper into SQL performance tuning, let's explore some advanced techniques that can take your query optimization skills to the next level.

11. Use Common Table Expressions (CTEs)

CTEs can improve query readability and sometimes performance, especially for complex queries:

WITH high_value_orders AS (

SELECT customer_id, COUNT(*) as order_count

FROM orders

WHERE total_amount > 1000

GROUP BY customer_id

HAVING COUNT(*) > 5

)

SELECT c.customer_name, hvo.order_count

FROM customers c

JOIN high_value_orders hvo ON c.customer_id = hvo.customer_id;

This query efficiently identifies customers with more than 5 high-value orders.

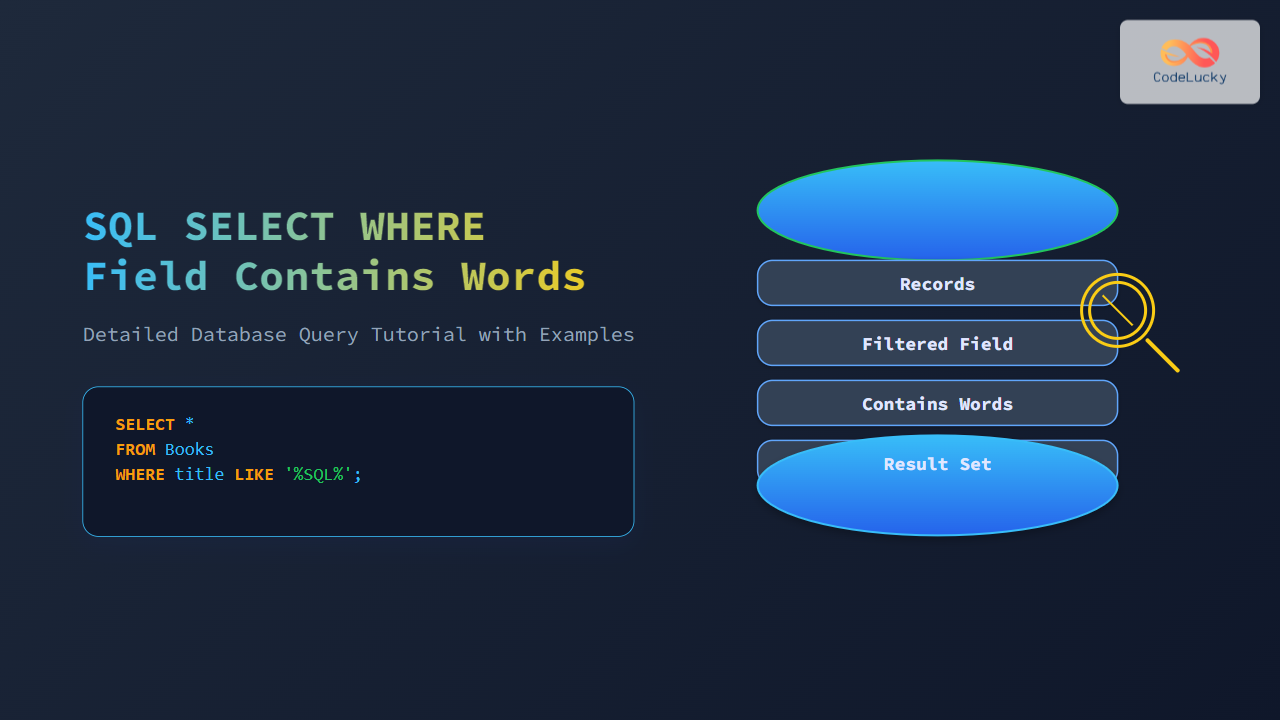

12. Optimize LIKE Queries

LIKE queries with leading wildcards can be slow. When possible, avoid them:

-- Instead of this

SELECT * FROM products WHERE product_name LIKE '%widget%';

-- Do this if possible

SELECT * FROM products WHERE product_name LIKE 'widget%';

If you must use leading wildcards, consider full-text indexing for better performance.

13. Use EXISTS Instead of IN for Subqueries

In many cases, EXISTS can be more efficient than IN for subqueries:

-- Instead of this

SELECT * FROM customers

WHERE customer_id IN (SELECT customer_id FROM orders WHERE total_amount > 1000);

-- Do this

SELECT * FROM customers c

WHERE EXISTS (SELECT 1 FROM orders o

WHERE o.customer_id = c.customer_id

AND o.total_amount > 1000);

EXISTS can stop searching as soon as it finds a match, potentially improving performance.

14. Denormalize When Necessary

While normalization is generally good for data integrity, sometimes denormalization can improve query performance:

-- Instead of joining every time

SELECT c.customer_name, o.order_date, o.total_amount

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id;

-- Consider adding customer_name to the orders table

SELECT customer_name, order_date, total_amount

FROM orders;

Be cautious with this approach, as it can lead to data inconsistencies if not managed properly.

15. Use Table Partitioning for Very Large Tables

For tables with millions of rows, consider partitioning:

CREATE TABLE sales (

id INT,

sale_date DATE,

amount DECIMAL(10,2)

)

PARTITION BY RANGE (YEAR(sale_date)) (

PARTITION p2020 VALUES LESS THAN (2021),

PARTITION p2021 VALUES LESS THAN (2022),

PARTITION p2022 VALUES LESS THAN (2023),

PARTITION p2023 VALUES LESS THAN MAXVALUE

);

Queries that include the partitioning key (in this case, sale_date) can now access only relevant partitions, significantly improving performance for large datasets.

Monitoring and Maintaining Performance

Optimizing your queries is not a one-time task. It's an ongoing process that requires regular monitoring and maintenance.

Use Performance Monitoring Tools

Most database systems come with built-in performance monitoring tools. For example, MySQL has the Performance Schema and sys schema, which provide detailed insights into query performance.

-- Find the top 10 queries by average execution time

SELECT DIGEST_TEXT, COUNT_STAR, AVG_TIMER_WAIT

FROM performance_schema.events_statements_summary_by_digest

ORDER BY AVG_TIMER_WAIT DESC

LIMIT 10;

This query helps you identify the slowest queries in your system, allowing you to focus your optimization efforts where they'll have the most impact.

Regularly Update Statistics

Database statistics help the query optimizer make good decisions. Regularly updating these statistics ensures optimal query plans:

-- In MySQL

ANALYZE TABLE customers, orders, products;

-- In PostgreSQL

ANALYZE customers, orders, products;

-- In SQL Server

UPDATE STATISTICS customers, orders, products;

Implement Query Timeouts

To prevent long-running queries from bogging down your system, implement query timeouts:

-- In MySQL

SET max_execution_time = 1000; -- 1 second timeout

SELECT * FROM large_table WHERE complex_condition;

-- In PostgreSQL

SET statement_timeout = '1s';

SELECT * FROM large_table WHERE complex_condition;

This ensures that no single query can monopolize system resources for too long.

Conclusion

SQL performance tuning is a complex but crucial skill for any database professional. By understanding how queries are executed, using appropriate indexing strategies, writing efficient queries, and regularly monitoring performance, you can ensure your database operates at peak efficiency.

Remember, optimization is an iterative process. What works well today may need adjustment as your data grows or your usage patterns change. Stay vigilant, keep learning, and your databases will thank you with lightning-fast performance!

🚀 Happy optimizing!