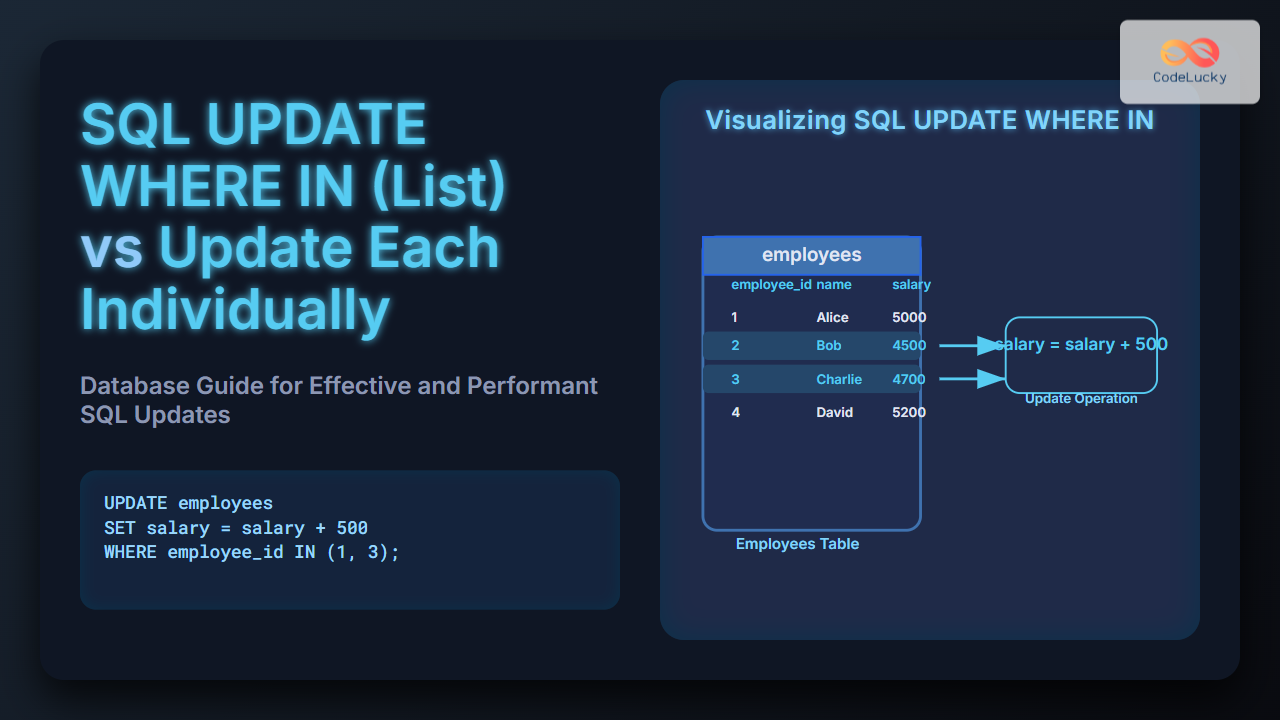

In today's data-driven world, the ability to work with diverse data formats is crucial for any database professional. While structured data has long been the cornerstone of relational databases, the rise of semi-structured data, particularly JSON (JavaScript Object Notation), has revolutionized how we store and query information. This article delves into the fascinating intersection of SQL and JSON, exploring how modern database systems have evolved to handle this flexible data format.

Understanding JSON in the Context of SQL

JSON has become ubiquitous in web applications, APIs, and NoSQL databases. Its popularity stems from its human-readable format and ability to represent complex, nested data structures. But how does this fit into the world of SQL, traditionally known for its rigid, tabular data model?

🔍 Key Insight: Modern SQL databases have adapted to support JSON, allowing developers to combine the best of both worlds – the flexibility of semi-structured data with the power of relational querying.

Let's start with a simple JSON object representing a customer:

{

"id": 1001,

"name": "John Doe",

"email": "[email protected]",

"orders": [

{

"orderId": "A001",

"product": "Laptop",

"quantity": 1

},

{

"orderId": "A002",

"product": "Mouse",

"quantity": 2

}

]

}

This JSON object contains nested data (the orders array) that would be challenging to represent in a traditional relational model without normalization.

Storing JSON in SQL Databases

Most modern relational database management systems (RDBMS) now offer native support for JSON data types. Let's explore how we can store this data in a SQL table:

CREATE TABLE customers (

id INT PRIMARY KEY,

data JSON

);

INSERT INTO customers (id, data)

VALUES (1, '{"id": 1001, "name": "John Doe", "email": "[email protected]", "orders": [{"orderId": "A001", "product": "Laptop", "quantity": 1}, {"orderId": "A002", "product": "Mouse", "quantity": 2}]}');

This creates a table with an integer ID and a JSON column to store our customer data. The entire JSON object is inserted as a string into the data column.

Querying JSON Data in SQL

Now that we have JSON data in our SQL database, let's explore various ways to query and manipulate this data.

1. Extracting Scalar Values

To extract simple values from our JSON data, we can use JSON path expressions. The exact syntax may vary slightly between different database systems, but the concept remains the same.

SELECT

id,

data->>'name' AS customer_name,

data->>'email' AS email

FROM customers;

This query extracts the name and email fields from our JSON data. The result would look like this:

| id | customer_name | |

|---|---|---|

| 1 | John Doe | [email protected] |

🔑 Pro Tip: The -> operator typically returns a JSON object, while ->> returns text. Use ->> when you want to extract a value as a string.

2. Working with Nested Arrays

One of JSON's strengths is its ability to represent nested data structures. Let's query the orders array in our customer data:

SELECT

id,

json_array_elements(data->'orders') AS order

FROM customers;

This query uses the json_array_elements function to unnest the orders array. The result might look like:

| id | order |

|---|---|

| 1 | {"orderId": "A001", "product": "Laptop", "quantity": 1} |

| 1 | {"orderId": "A002", "product": "Mouse", "quantity": 2} |

3. Filtering Based on JSON Properties

We can also use JSON properties in our WHERE clauses to filter data:

SELECT id

FROM customers

WHERE data->>'name' = 'John Doe';

This query finds all customers named "John Doe".

4. Updating JSON Data

Updating JSON data in SQL requires careful consideration. Here's an example of how to update a nested value:

UPDATE customers

SET data = jsonb_set(data::jsonb, '{email}', '"[email protected]"'::jsonb)

WHERE id = 1;

This query updates John's email address. Note the use of jsonb_set, which is specific to PostgreSQL. Other databases may have different functions for updating JSON data.

Advanced JSON Operations in SQL

As we dive deeper into JSON and SQL integration, let's explore some more advanced operations that showcase the power of this combination.

5. Indexing JSON Fields

To improve query performance on frequently accessed JSON fields, we can create indexes:

CREATE INDEX idx_customer_name ON customers ((data->>'name'));

This creates an index on the name field of our JSON data, which can significantly speed up queries that filter or sort by customer name.

6. Aggregating JSON Data

JSON data can also be aggregated using SQL functions. Let's calculate the total quantity of items ordered by each customer:

SELECT

id,

data->>'name' AS customer_name,

SUM((json_array_elements(data->'orders')->>'quantity')::int) AS total_quantity

FROM customers

GROUP BY id, data->>'name';

This query sums up the quantity field from all orders for each customer. The result might look like:

| id | customer_name | total_quantity |

|---|---|---|

| 1 | John Doe | 3 |

7. Combining JSON with Regular SQL Columns

In real-world scenarios, you might have a mix of regular SQL columns and JSON data. Let's create a more complex table structure:

CREATE TABLE orders (

order_id VARCHAR(10) PRIMARY KEY,

customer_id INT,

order_date DATE,

order_details JSON

);

INSERT INTO orders (order_id, customer_id, order_date, order_details)

VALUES

('A001', 1001, '2023-01-15', '{"product": "Laptop", "quantity": 1, "price": 999.99, "specs": {"brand": "TechPro", "model": "X1"}}'),

('A002', 1001, '2023-01-16', '{"product": "Mouse", "quantity": 2, "price": 24.99, "specs": {"brand": "ClickMaster", "model": "M2"}}');

Now we can combine regular SQL columns with JSON data in our queries:

SELECT

o.order_id,

o.order_date,

o.order_details->>'product' AS product,

(o.order_details->>'quantity')::int AS quantity,

(o.order_details->>'price')::numeric AS unit_price,

((o.order_details->>'quantity')::int * (o.order_details->>'price')::numeric) AS total_price,

o.order_details->'specs'->>'brand' AS brand

FROM orders o

WHERE o.customer_id = 1001

ORDER BY o.order_date;

This query combines regular SQL columns (order_id, order_date) with JSON data, performing calculations and extracting nested information. The result would be:

| order_id | order_date | product | quantity | unit_price | total_price | brand |

|---|---|---|---|---|---|---|

| A001 | 2023-01-15 | Laptop | 1 | 999.99 | 999.99 | TechPro |

| A002 | 2023-01-16 | Mouse | 2 | 24.99 | 49.98 | ClickMaster |

JSON Validation and Constraints

When working with JSON in SQL, it's important to ensure data integrity. While JSON is flexible, we often want to enforce some structure.

8. JSON Schema Validation

Some databases support JSON schema validation. For example, in PostgreSQL, you can use the jsonb_typeof function to check the type of a JSON field:

ALTER TABLE customers

ADD CONSTRAINT check_customer_data

CHECK (

jsonb_typeof(data->'id') = 'number' AND

jsonb_typeof(data->'name') = 'string' AND

jsonb_typeof(data->'email') = 'string' AND

jsonb_typeof(data->'orders') = 'array'

);

This constraint ensures that our customer data adheres to a specific structure.

9. Handling NULL Values in JSON

When working with JSON, you might encounter NULL values. It's important to handle these correctly:

SELECT

id,

COALESCE(data->>'name', 'Unknown') AS customer_name,

COALESCE(data->>'email', 'No email provided') AS email

FROM customers;

This query uses COALESCE to provide default values if the JSON fields are NULL.

Performance Considerations

While JSON in SQL offers great flexibility, it's important to consider performance implications:

🚀 Performance Tip: Extracting data from JSON fields can be slower than querying regular columns. For frequently accessed fields, consider duplicating the data in regular columns or creating computed columns.

ALTER TABLE customers

ADD COLUMN extracted_name VARCHAR(100) GENERATED ALWAYS AS (data->>'name') STORED;

This creates a computed column that extracts the name from the JSON data, which can then be indexed and queried more efficiently.

Conclusion

The integration of JSON with SQL represents a significant evolution in database technology, bridging the gap between structured and semi-structured data. By supporting JSON, SQL databases have become more versatile, capable of handling complex, nested data structures while retaining the powerful querying and transaction capabilities that make SQL indispensable.

As we've seen through various examples, from basic JSON extraction to complex queries combining JSON and regular SQL data, the possibilities are vast. This flexibility allows developers and data analysts to work with diverse data formats within a familiar SQL environment.

However, it's crucial to approach JSON in SQL with careful consideration of data integrity, performance, and query optimization. Proper indexing, constraints, and thoughtful data modeling are key to leveraging the full potential of JSON in SQL databases.

As data continues to grow in volume and complexity, the ability to work effectively with both structured and semi-structured data becomes increasingly valuable. Mastering the use of JSON in SQL equips you with a powerful tool to handle a wide range of modern data challenges, making you a more versatile and effective database professional.

Remember, the specific syntax and functions for working with JSON may vary between different database systems, so always consult your database's documentation for the most accurate and up-to-date information.

Happy querying, and may your data always be both flexible and queryable! 🎉📊