In today’s web architecture, delivering fast, scalable user experiences is paramount. One of the fundamental strategies to achieve this is through server-side caching. While basic caching principles are widely known, advanced server-side caching techniques unlock new levels of performance optimization and efficiency, essential for modern high-demand applications.

This comprehensive guide dives deep into advanced server-side caching strategies, illustrating methods, best practices, and practical examples to elevate your backend performance. Whether you operate a REST API, a dynamic web app, or microservices, understanding these techniques will empower you to reduce latency, lower database load, and vastly improve user experience.

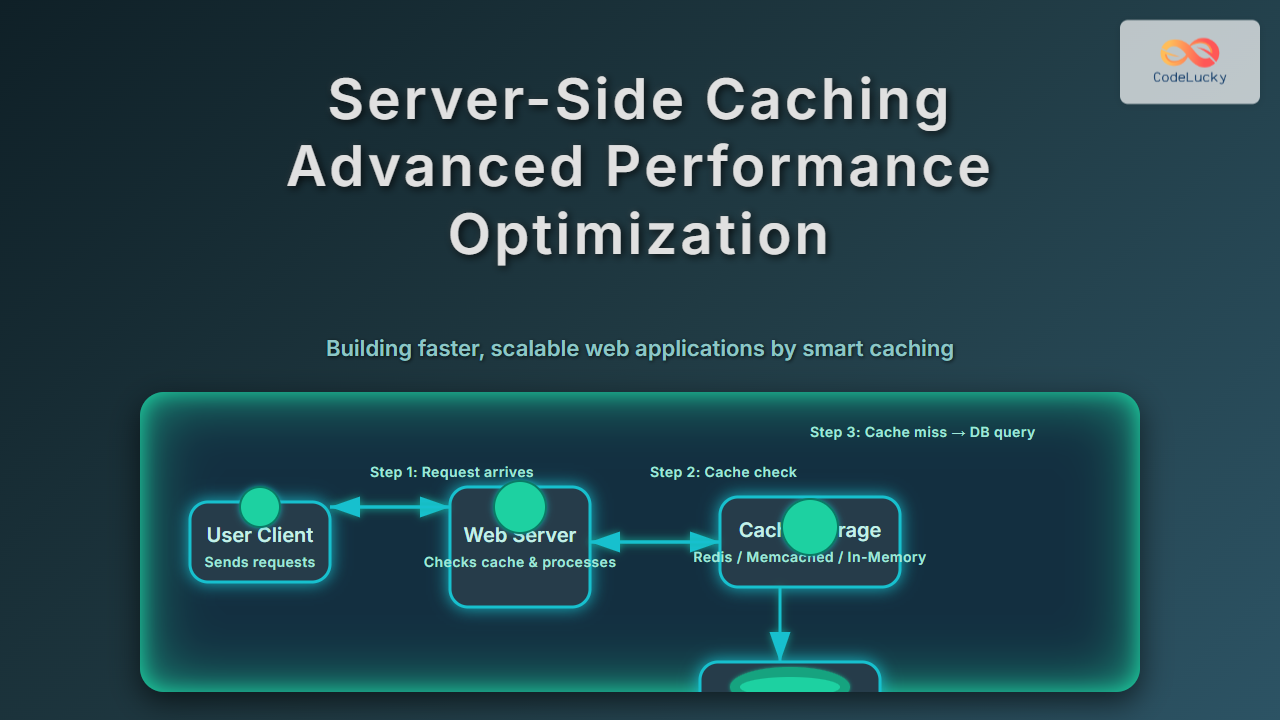

What Is Server-Side Caching?

Server-side caching refers to temporarily storing data or computation results on the server, so subsequent requests for the same data can be served faster without reprocessing or querying the database. This is crucial in scenarios where data retrieval or processing is resource-intensive or slow.

Common cacheable data includes:

- API responses

- Database query results

- Rendered HTML pages or fragments

- Sessions and authentication tokens

- Configuration settings and frequently used business logic outputs

Key Benefits of Server-Side Caching

- Reduced Latency: Faster response times by serving cached data instantly.

- Reduced Server Load: Minimizes processing and database queries, freeing resources.

- Improved Scalability: Supports higher concurrency with less resource contention.

- Cost Efficiency: Saves resources and reduces infrastructure costs.

Advanced Server-Side Caching Strategies

1. Cache Invalidation and Expiry Policies

One of the trickiest parts of caching is cache invalidation — ensuring cached data stays consistent with the source of truth. Common strategies include:

- Time-based Expiry (TTL): Cached data expires after a defined duration.

- Event-based Invalidation: Invalidate cache when underlying data changes, triggered by events or hooks.

- Lazy Loading / Cache Stampede Protection: Use locking mechanisms or “request coalescing” to prevent thundering herds refreshing cache simultaneously.

2. Hierarchical Caching

Implement multiple cache layers to optimize different parts of the system:

- In-memory cache: Ultra-fast caches using RAM (e.g., Redis, Memcached) for frequently accessed data.

- Distributed caches: Shared caches across multiple servers for scalability.

- Database-level caching: Database query caches or materialized views.

- Application layer caches: Cache rendered templates or partial HTML.

3. Cache Aside Pattern

The cache aside or lazy loading pattern lets the application check if cache has the data before querying the database. On cache miss, the app fetches from the database, stores it in cache, and returns the result. This technique is flexible and widely used.

// Cache Aside Example in Node.js with Redis

async function getUser(userId) {

const cacheKey = `user:${userId}`;

let user = await redisClient.get(cacheKey);

if (user) {

console.log('Cache hit');

return JSON.parse(user);

}

console.log('Cache miss, querying DB');

const userData = await db.query('SELECT * FROM users WHERE id = ?', [userId]);

await redisClient.set(cacheKey, JSON.stringify(userData), 'EX', 3600); // Cache for 1 hour

return userData;

}

Visual Output: On the first call, the console shows “Cache miss,” subsequent calls show “Cache hit” with instant retrieval.

4. Write-Through and Write-Back Caches

Unlike cache aside, these patterns handle writes differently:

- Write-Through Cache: Data is written to cache and persistent store simultaneously, ensuring strong consistency but potentially slower writes.

- Write-Back Cache: Writes initially go to cache and are asynchronously persisted, improving write speed but risking data loss if cache fails.

5. Partial and Fragment Caching

Cache pieces of a response instead of the entire output, e.g., cached widgets or HTML fragments, especially for highly dynamic pages. This optimizes bandwidth and processing without full page reloads.

Measuring Cache Effectiveness

Important metrics to monitor include:

- Cache Hit Ratio: Percentage of requests served from cache.

- Latency Reduction: Time saved per request versus no cache.

- Eviction Rate: Frequency of cached data removal due to capacity limits.

Practical Interactive Example: In-Memory Cache Simulation

Here’s a simple interactive simulation in JavaScript demonstrating cache hits and misses with TTL expiration:

class SimpleCache {

constructor(ttl = 5000) {

this.ttl = ttl;

this.cache = new Map();

}

set(key, value) {

const expiry = Date.now() + this.ttl;

this.cache.set(key, { value, expiry });

console.log(`Cached key: ${key}`);

}

get(key) {

const cached = this.cache.get(key);

if (!cached) return null;

if (cached.expiry < Date.now()) {

this.cache.delete(key);

console.log(`Cache expired for key: ${key}`);

return null;

}

console.log(`Cache hit for key: ${key}`);

return cached.value;

}

}

const cache = new SimpleCache(3000);

cache.set('foo', 'bar');

setTimeout(() => console.log('Get foo:', cache.get('foo')), 1000); // Cache hit

setTimeout(() => console.log('Get foo:', cache.get('foo')), 4000); // Expired

Summary

Advanced server-side caching is a critical lever for performance optimization in modern web apps. By employing appropriate cache patterns, invalidation strategies, and multi-layer caching, backend systems can achieve faster response times, lower cost, and better scalability.

Implement caching thoughtfully, monitor effectiveness, and combine multiple techniques tailored to your application’s requirements to unlock maximum performance gains.