Random Forest is one of the most powerful and widely used ensemble learning algorithms in machine learning. As the name suggests, it builds a forest of decision trees and combines their predictions to achieve higher accuracy and robustness compared to a single decision tree. This article explores the idea of Random Forest in detail, providing clear explanations, visual diagrams, and examples to help you understand how it works and why it is so effective.

What is Random Forest?

Random Forest is an ensemble machine learning algorithm that constructs multiple decision trees during training and outputs the class that is the mode of classes (classification) or the mean prediction (regression) of the individual trees. It belongs to the bagging family of ensemble methods, where multiple models are trained on different subsets of data and their results are aggregated.

Key Concepts of Random Forest

- Decision Trees: The base learners in random forest are decision trees, each built independently using a random subset of training data.

- Bagging (Bootstrap Aggregating): Each tree is trained on a random sample (with replacement) of the dataset to increase diversity.

- Feature Randomness: At each split in a tree, a random subset of input features is considered, which reduces correlation between trees.

- Majority Voting / Averaging: For classification tasks, the final decision is made by majority voting among trees. For regression, the average value of predictions is used.

Why Random Forest Works So Well?

Random Forest reduces variance and prevents overfitting by averaging the results of multiple uncorrelated decision trees. Even if individual trees may overfit, their combined output generalizes better. This leads to improved performance and stability across different datasets.

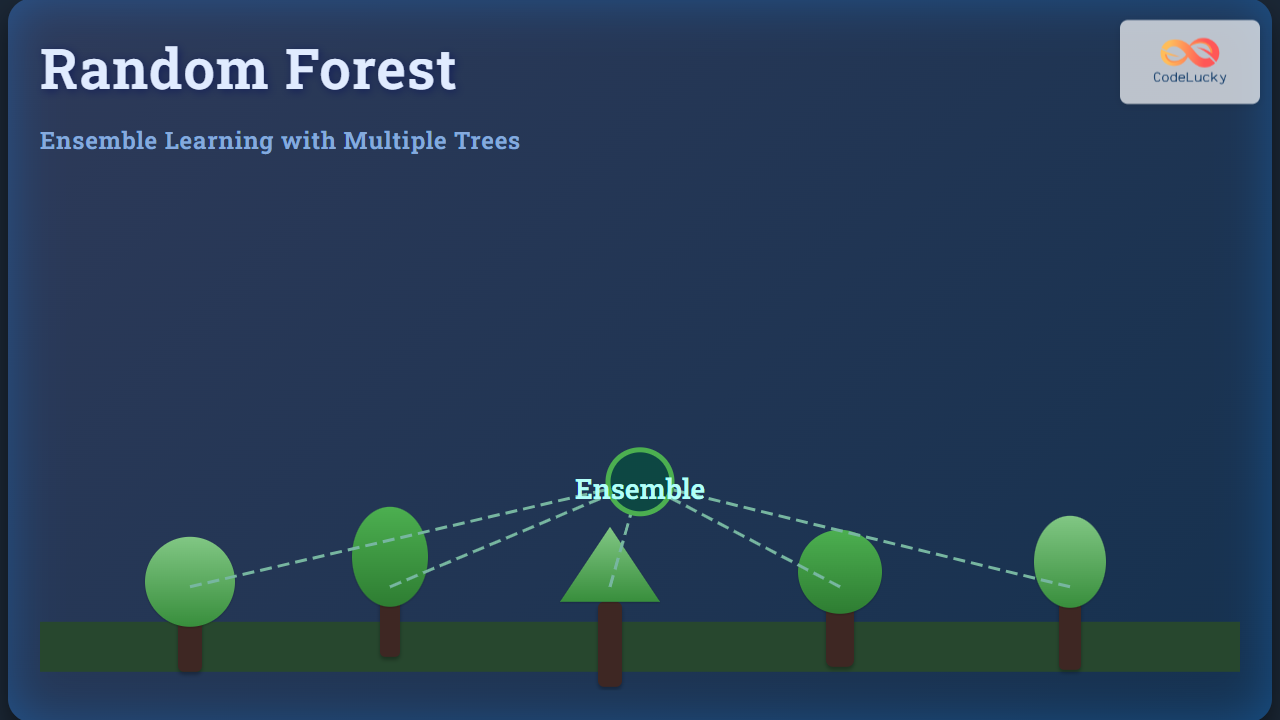

Diagram: Random Forest builds multiple decision trees on different bootstrapped samples and aggregates their predictions.

Example of Random Forest in Classification

Imagine a dataset where we want to classify animals as cat or dog based on features like weight, ear shape, and whether they bark. A single decision tree may misclassify some animals, but a Random Forest with 100 trees will consider multiple perspectives and vote for the most likely category.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(

iris.data, iris.target, test_size=0.3, random_state=42

)

# Random Forest Model

clf = RandomForestClassifier(n_estimators=100, random_state=42)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print("Accuracy:", accuracy_score(y_test, y_pred))

The output will typically show high accuracy (often above 95%) compared to a single decision tree, demonstrating the power of ensemble learning.

Advantages of Random Forest

- High accuracy and reliable performance on most datasets.

- Works for both classification and regression tasks.

- Handles missing values and noisy data effectively.

- Less prone to overfitting compared to single decision trees.

- Provides feature importance, helping identify key input variables.

Limitations of Random Forest

- Can be computationally expensive with very large datasets and many trees.

- Harder to interpret compared to decision trees.

- Predictions can sometimes be slower due to ensemble voting across many trees.

Visualizing Random Forest Decision Making

Multiple trees vote for a class, and the class with majority votes becomes the final prediction.

Random Forest for Feature Importance

One of the biggest advantages of Random Forest is its ability to measure feature importance, which helps in understanding which parameters contribute most to predictions.

import pandas as pd

# Feature importance

importance = clf.feature_importances_

feature_names = iris.feature_names

importance_df = pd.DataFrame({

"Feature": feature_names,

"Importance": importance

}).sort_values("Importance", ascending=False)

print(importance_df)

This output shows which features matter most in classification. For example, in the Iris dataset, petal length and petal width are usually the most important predictors.

Interactive Thought Experiment

To intuitively understand Random Forest, consider 10 students trying to guess the weight of a watermelon. If one student guesses incorrectly, the error is high. But if 10 students give their guesses independently, the average of their guesses is much closer to the true weight. Random Forest uses the same principle of wisdom of the crowd to make better predictions.

Conclusion

Random Forest is a versatile and robust machine learning algorithm that works well for both classification and regression tasks. By combining multiple decision trees through bootstrap sampling and feature randomness, it achieves high accuracy, reduces overfitting, and provides insights into feature importance. If you are looking for a reliable default algorithm for your dataset, Random Forest is often the best starting point.

At CodeLucky.com, we emphasize clarity and depth in explaining algorithms. Hopefully, this article has made the concept of Random Forest clear with examples, diagrams, and practical code snippets you can try yourself.