Python multithreading is a powerful technique that allows developers to execute multiple threads concurrently within a single program. This approach can significantly enhance the performance of I/O-bound and CPU-bound tasks, making it an essential tool in a Python programmer's toolkit. In this comprehensive guide, we'll dive deep into the world of Python multithreading, exploring its concepts, implementation, and best practices.

Understanding Threads in Python

Before we delve into multithreading, it's crucial to understand what threads are and how they work in Python.

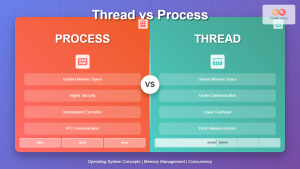

🧵 A thread is the smallest unit of execution within a process. It's a lightweight, independent sequence of instructions that can be scheduled and executed by the operating system.

In Python, threads are managed by the Global Interpreter Lock (GIL), which allows only one thread to execute Python bytecode at a time. This might seem counterintuitive for concurrent execution, but Python's threading is still highly effective for I/O-bound tasks and can provide significant performance improvements in many scenarios.

The threading Module

Python's threading module is the cornerstone of multithreading in the language. It provides a high-level interface for working with threads, making it easier to create and manage concurrent execution.

Let's start with a simple example to illustrate how to create and start a thread:

import threading

import time

def print_numbers():

for i in range(1, 6):

print(f"Thread {threading.current_thread().name}: {i}")

time.sleep(1)

# Create a thread

thread = threading.Thread(target=print_numbers, name="NumberPrinter")

# Start the thread

thread.start()

# Wait for the thread to complete

thread.join()

print("Main thread: All done!")

In this example, we define a function print_numbers() that prints numbers from 1 to 5 with a 1-second delay between each print. We then create a thread with this function as its target and start it. The join() method is called to wait for the thread to complete before the main thread continues.

When you run this script, you'll see the numbers printed by the thread, followed by the "All done!" message from the main thread.

Creating Multiple Threads

One of the key advantages of multithreading is the ability to run multiple tasks concurrently. Let's expand our previous example to create multiple threads:

import threading

import time

def worker(worker_id):

print(f"Worker {worker_id} starting")

time.sleep(2)

print(f"Worker {worker_id} finished")

# Create a list to hold our threads

threads = []

# Create and start 5 worker threads

for i in range(5):

thread = threading.Thread(target=worker, args=(i,))

threads.append(thread)

thread.start()

# Wait for all threads to complete

for thread in threads:

thread.join()

print("All workers have finished their tasks")

In this example, we create five worker threads, each with a unique ID. The threads execute concurrently, sleeping for 2 seconds to simulate some work. The main thread waits for all worker threads to complete before printing the final message.

Thread Synchronization

When working with multiple threads that access shared resources, it's crucial to implement proper synchronization to avoid race conditions and ensure data integrity. Python's threading module provides several synchronization primitives, including locks, semaphores, and events.

Using Locks

Locks (also known as mutexes) are the most basic synchronization primitive. They ensure that only one thread can access a shared resource at a time.

Here's an example demonstrating the use of a lock:

import threading

import time

class BankAccount:

def __init__(self):

self.balance = 1000

self.lock = threading.Lock()

def withdraw(self, amount):

with self.lock:

if self.balance >= amount:

time.sleep(0.1) # Simulate some processing time

self.balance -= amount

print(f"Withdrew {amount}. New balance: {self.balance}")

else:

print("Insufficient funds!")

def perform_withdrawals(account):

for _ in range(5):

account.withdraw(100)

account = BankAccount()

# Create two threads that will withdraw money concurrently

thread1 = threading.Thread(target=perform_withdrawals, args=(account,))

thread2 = threading.Thread(target=perform_withdrawals, args=(account,))

thread1.start()

thread2.start()

thread1.join()

thread2.join()

print(f"Final balance: {account.balance}")

In this example, we have a BankAccount class with a withdraw method. We use a lock to ensure that only one thread can modify the balance at a time. This prevents race conditions where both threads might try to withdraw money simultaneously, potentially leading to incorrect balance calculations.

Using Semaphores

Semaphores are another synchronization primitive that can be used to limit the number of threads that can access a resource simultaneously.

Here's an example using a semaphore to limit concurrent access to a resource:

import threading

import time

import random

# Semaphore limiting to 3 concurrent accesses

semaphore = threading.Semaphore(3)

def access_resource(thread_num):

print(f"Thread {thread_num} is trying to access the resource")

with semaphore:

print(f"Thread {thread_num} has accessed the resource")

time.sleep(random.uniform(1, 3))

print(f"Thread {thread_num} has released the resource")

# Create and start 10 threads

threads = []

for i in range(10):

thread = threading.Thread(target=access_resource, args=(i,))

threads.append(thread)

thread.start()

# Wait for all threads to complete

for thread in threads:

thread.join()

print("All threads have finished")

In this example, we use a semaphore to limit access to a resource to a maximum of three threads at a time. This can be useful in scenarios where you want to limit the load on a particular resource, such as a database connection pool or a network service.

Thread Communication

Threads often need to communicate with each other or signal certain events. Python's threading module provides the Event class for this purpose.

Here's an example demonstrating thread communication using events:

import threading

import time

def waiter(event, timeout):

print(f"Waiter: Waiting for the event (timeout: {timeout}s)")

event_set = event.wait(timeout)

if event_set:

print("Waiter: Event was set!")

else:

print("Waiter: Timed out waiting for the event")

def setter(event, delay):

print(f"Setter: Waiting {delay}s before setting the event")

time.sleep(delay)

event.set()

print("Setter: Event has been set")

# Create an event object

event = threading.Event()

# Create and start the waiter and setter threads

waiter_thread = threading.Thread(target=waiter, args=(event, 5))

setter_thread = threading.Thread(target=setter, args=(event, 3))

waiter_thread.start()

setter_thread.start()

waiter_thread.join()

setter_thread.join()

print("Main thread: All done!")

In this example, we have two threads: a waiter and a setter. The waiter thread waits for an event to be set, with a timeout of 5 seconds. The setter thread waits for 3 seconds before setting the event. This demonstrates how threads can communicate and synchronize their actions using events.

Thread Pools

When you need to execute many similar tasks concurrently, creating a new thread for each task can be inefficient. Thread pools solve this problem by maintaining a pool of worker threads that can be reused for multiple tasks.

Python's concurrent.futures module provides a high-level interface for working with thread pools. Here's an example:

import concurrent.futures

import time

def task(name):

print(f"Task {name} starting")

time.sleep(1)

return f"Task {name} completed"

# Create a thread pool with 3 worker threads

with concurrent.futures.ThreadPoolExecutor(max_workers=3) as executor:

# Submit 5 tasks to the pool

futures = [executor.submit(task, f"Task-{i}") for i in range(5)]

# Wait for all tasks to complete and get their results

for future in concurrent.futures.as_completed(futures):

result = future.result()

print(result)

print("All tasks have been completed")

In this example, we create a thread pool with three worker threads. We then submit five tasks to the pool, which are executed concurrently by the available worker threads. The as_completed() function allows us to iterate over the futures as they complete, regardless of the order in which they were submitted.

Best Practices and Considerations

When working with multithreading in Python, keep these best practices and considerations in mind:

-

🔒 Use appropriate synchronization: Always use locks, semaphores, or other synchronization primitives when accessing shared resources to prevent race conditions.

-

🧠 Be aware of the GIL: Remember that Python's Global Interpreter Lock (GIL) prevents true parallel execution of threads for CPU-bound tasks. For CPU-intensive operations, consider using multiprocessing instead.

-

🏃♂️ Avoid creating too many threads: Creating a large number of threads can lead to increased overhead and diminishing returns. Use thread pools for better resource management.

-

🚫 Prevent deadlocks: Be careful when using multiple locks to avoid deadlock situations. Always acquire locks in a consistent order across all threads.

-

📊 Monitor and profile: Use tools like the

threading.enumerate()function and thetracemallocmodule to monitor and profile your multithreaded applications. -

🧪 Test thoroughly: Multithreaded code can be prone to race conditions and other concurrency issues. Implement comprehensive testing, including stress tests, to ensure reliability.

Conclusion

Python multithreading is a powerful tool for improving the performance and responsiveness of your applications, especially for I/O-bound tasks. By leveraging the threading module and following best practices, you can create efficient, concurrent programs that make the most of modern multi-core processors.

Remember that while multithreading can bring significant benefits, it also introduces complexity. Always carefully consider whether the performance gains justify the added complexity in your specific use case. With practice and experience, you'll become proficient at identifying opportunities for multithreading and implementing it effectively in your Python projects.