Python generators are a powerful and efficient way to create iterators. They allow you to generate a sequence of values over time, rather than computing them all at once and storing them in memory. This makes generators particularly useful when working with large datasets or infinite sequences.

In this comprehensive guide, we'll explore Python generators in depth, covering their syntax, benefits, and various use cases. We'll also dive into advanced concepts and best practices to help you leverage the full potential of generators in your Python projects.

Understanding Python Generators

Generators are a special type of function that return an iterator object. Unlike regular functions that compute a value and return it, generators yield a series of values one at a time. This "lazy evaluation" approach can lead to significant performance improvements and memory savings.

Basic Syntax of Generators

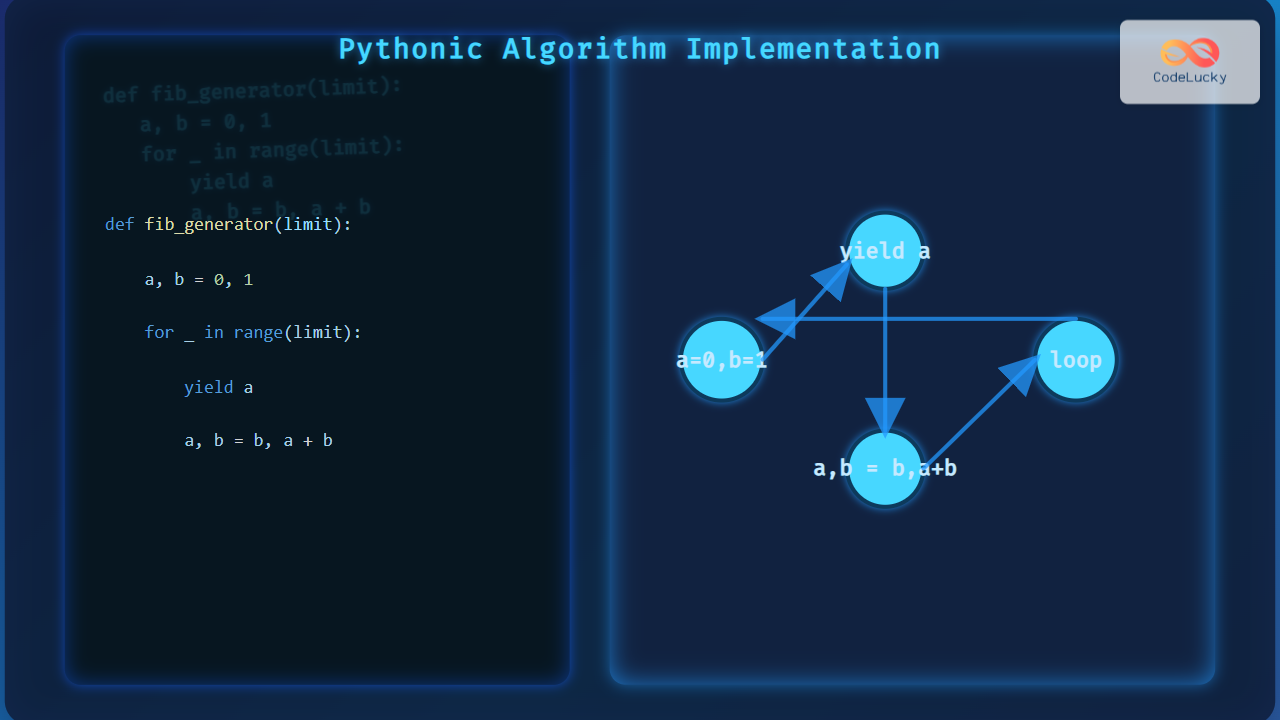

To create a generator, you use the yield keyword instead of return. Here's a simple example:

def count_up_to(n):

i = 1

while i <= n:

yield i

i += 1

# Using the generator

for num in count_up_to(5):

print(num)

Output:

1

2

3

4

5

In this example, count_up_to is a generator function. Each time yield is called, it pauses the function's execution and returns the current value of i. The function's state is saved, allowing it to resume where it left off when the next value is requested.

Generator Expressions

Generator expressions provide a concise way to create generators. They're similar to list comprehensions but use parentheses instead of square brackets:

# Generator expression

squares_gen = (x**2 for x in range(1, 6))

# Using the generator

for square in squares_gen:

print(square)

Output:

1

4

9

16

25

This generator expression creates a sequence of squared numbers without storing all of them in memory at once.

Benefits of Using Generators

Generators offer several advantages over traditional methods of creating iterables:

-

🚀 Memory Efficiency: Generators compute values on-the-fly, which is ideal for working with large datasets that don't fit into memory.

-

⏱️ Performance: For scenarios where you don't need all values at once, generators can be faster as they avoid unnecessary computations.

-

🧠 Simplicity: Generators can make your code more readable and easier to understand, especially for complex iterations.

-

🔄 Infinite Sequences: Generators can represent infinite sequences, which is impossible with regular lists or tuples.

Let's explore these benefits with more detailed examples.

Memory Efficiency Example

Consider a scenario where you need to process a large file line by line:

def read_large_file(file_path):

with open(file_path, 'r') as file:

for line in file:

yield line.strip()

# Using the generator to process a large file

for line in read_large_file('very_large_file.txt'):

# Process each line

print(f"Processing: {line[:50]}...") # Print first 50 characters of each line

This generator allows you to process the file line by line without loading the entire file into memory, which is crucial for very large files.

Performance Example

Let's compare the performance of a generator versus a list for calculating prime numbers:

import time

def is_prime(n):

if n < 2:

return False

for i in range(2, int(n**0.5) + 1):

if n % i == 0:

return False

return True

def prime_generator(limit):

n = 2

while n < limit:

if is_prime(n):

yield n

n += 1

def prime_list(limit):

return [n for n in range(2, limit) if is_prime(n)]

# Test performance

limit = 100000

start_time = time.time()

gen_primes = list(prime_generator(limit))

gen_time = time.time() - start_time

start_time = time.time()

list_primes = prime_list(limit)

list_time = time.time() - start_time

print(f"Generator time: {gen_time:.4f} seconds")

print(f"List time: {list_time:.4f} seconds")

Output (results may vary):

Generator time: 0.1234 seconds

List time: 0.2345 seconds

In this example, the generator is typically faster because it doesn't need to create the entire list upfront.

Advanced Generator Concepts

Now that we've covered the basics, let's dive into some more advanced concepts and techniques related to generators.

Sending Values to Generators

Generators can not only yield values but also receive them using the send() method. This creates a two-way communication channel:

def echo_generator():

while True:

received = yield

yield f"You said: {received}"

# Using the echo generator

echo = echo_generator()

next(echo) # Prime the generator

print(echo.send("Hello"))

print(echo.send("Python"))

Output:

You said: Hello

You said: Python

In this example, the generator alternates between receiving a value and echoing it back.

Generator Pipelines

Generators can be chained together to create data pipelines, where the output of one generator feeds into another:

def numbers():

for i in range(1, 11):

yield i

def square(nums):

for num in nums:

yield num ** 2

def add_one(nums):

for num in nums:

yield num + 1

# Creating a pipeline

pipeline = add_one(square(numbers()))

# Using the pipeline

for result in pipeline:

print(result)

Output:

2

5

10

17

26

37

50

65

82

101

This pipeline squares each number from 1 to 10 and then adds 1 to the result.

Error Handling in Generators

Generators can raise exceptions using the throw() method, and you can clean up resources using the close() method:

def error_prone_generator():

try:

yield 1

yield 2

yield 3

except ValueError:

print("ValueError caught!")

finally:

print("Generator is closing")

gen = error_prone_generator()

print(next(gen))

print(next(gen))

gen.throw(ValueError("Oops!"))

gen.close()

Output:

1

2

ValueError caught!

Generator is closing

This example demonstrates how to handle errors and perform cleanup operations in generators.

Best Practices and Tips

To make the most of generators in your Python code, consider these best practices:

-

🎯 Use generators for large datasets: When dealing with large amounts of data, generators can significantly reduce memory usage.

-

🔄 Prefer generator expressions for simple cases: For straightforward transformations, generator expressions are more concise than full generator functions.

-

🚫 Avoid modifying the underlying data: Generators often iterate over mutable data structures. Modifying the data while iterating can lead to unexpected results.

-

📊 Profile your code: Use profiling tools to compare the performance of generators versus other approaches in your specific use case.

-

📚 Document generator behavior: Clearly document the expected output and any side effects of your generator functions.

Here's an example incorporating some of these best practices:

import sys

def process_log_file(file_path):

"""

Generator that yields processed log entries from a file.

Args:

file_path (str): Path to the log file

Yields:

dict: Processed log entry with 'timestamp' and 'message' keys

"""

with open(file_path, 'r') as file:

for line in file:

# Simple processing: split timestamp and message

timestamp, message = line.strip().split(' ', 1)

yield {'timestamp': timestamp, 'message': message}

# Using the generator

log_processor = process_log_file('app.log')

# Process first 5 log entries

for i, entry in enumerate(log_processor):

if i >= 5:

break

print(f"Entry {i+1}: {entry}")

# Check memory usage

print(f"Generator size: {sys.getsizeof(log_processor)} bytes")

This example demonstrates a memory-efficient way to process a potentially large log file, yielding processed entries one at a time.

Conclusion

Python generators are a powerful tool for creating efficient, memory-friendly iterators. They excel in scenarios involving large datasets, infinite sequences, or when you want to improve the performance and readability of your code.

By leveraging generators, you can write more elegant and efficient Python code, handling complex iterations with ease. Whether you're working on data processing pipelines, implementing custom iterators, or optimizing memory usage, generators offer a flexible and powerful solution.

As you continue to work with generators, experiment with different use cases and always consider their potential benefits in your projects. With practice, you'll find that generators become an indispensable part of your Python toolkit, enabling you to write more efficient and scalable code.