In the world of distributed systems, understanding the CAP theorem is crucial for building resilient applications. Among its three pillars—Consistency, Availability, and Partition Tolerance—partition tolerance often presents the most complex implementation challenges. This comprehensive guide explores partition tolerance in depth, providing practical examples and implementation strategies.

What is Partition Tolerance?

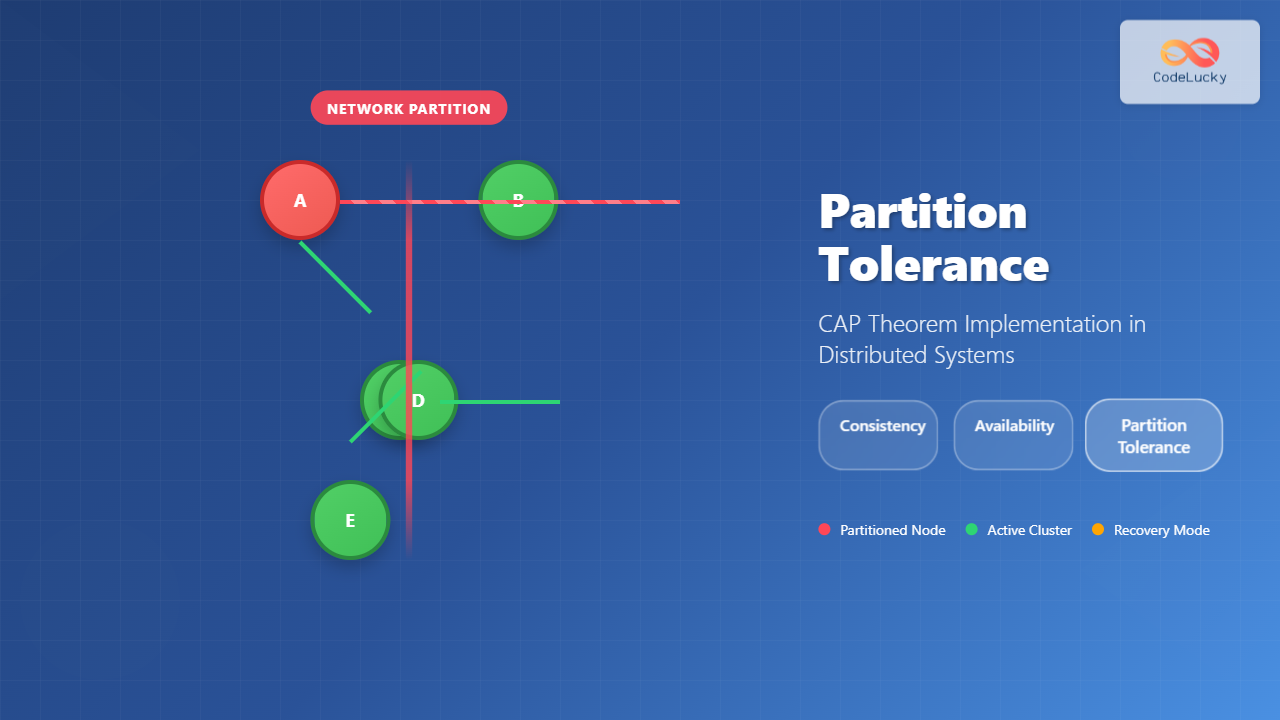

Partition tolerance refers to a distributed system’s ability to continue operating despite network partitions—scenarios where network failures prevent some nodes from communicating with others. When a partition occurs, the system must decide how to handle the split-brain situation while maintaining its core functionality.

Key Characteristics of Network Partitions

- Temporary isolation: Nodes become unreachable but continue running

- Split-brain scenarios: Multiple groups of nodes operate independently

- Data inconsistency risks: Conflicting updates in different partitions

- Service degradation: Reduced functionality during partition events

CAP Theorem Fundamentals

The CAP theorem, formulated by Eric Brewer, states that any distributed system can provide at most two of the following three guarantees simultaneously:

Consistency (C)

All nodes see the same data simultaneously. Every read receives the most recent write or an error.

Availability (A)

The system remains operational and responsive, guaranteeing that every request receives a response.

Partition Tolerance (P)

The system continues to operate despite arbitrary message loss or failure of part of the system.

Implementing Partition Tolerance Strategies

1. Quorum-Based Systems

Quorum systems ensure that operations proceed only when a majority of nodes are available and can communicate. This approach maintains consistency while handling partitions gracefully.

class QuorumSystem:

def __init__(self, total_nodes):

self.total_nodes = total_nodes

self.quorum_size = (total_nodes // 2) + 1

self.available_nodes = set(range(total_nodes))

def can_perform_operation(self):

return len(self.available_nodes) >= self.quorum_size

def simulate_partition(self, failed_nodes):

self.available_nodes -= set(failed_nodes)

if self.can_perform_operation():

return f"Operation allowed with {len(self.available_nodes)} nodes"

else:

return f"Operation blocked - insufficient nodes for quorum"

# Example usage

system = QuorumSystem(5)

print(system.simulate_partition([0, 1])) # 3 nodes remain - quorum maintained

print(system.simulate_partition([2])) # 2 nodes remain - no quorum

Output:

Operation allowed with 3 nodes

Operation blocked - insufficient nodes for quorum

2. Vector Clocks for Conflict Resolution

Vector clocks help track causality relationships between events in distributed systems, enabling conflict detection and resolution when partitions heal.

class VectorClock {

constructor(nodeId, nodes) {

this.nodeId = nodeId;

this.clock = {};

nodes.forEach(node => this.clock[node] = 0);

}

tick() {

this.clock[this.nodeId]++;

return this.getClock();

}

update(remoteClock) {

Object.keys(this.clock).forEach(node => {

this.clock[node] = Math.max(this.clock[node], remoteClock[node] || 0);

});

this.clock[this.nodeId]++;

}

compare(otherClock) {

let less = false, greater = false;

Object.keys(this.clock).forEach(node => {

const mine = this.clock[node];

const other = otherClock[node] || 0;

if (mine < other) less = true;

if (mine > other) greater = true;

});

if (less && greater) return 'concurrent';

if (less) return 'before';

if (greater) return 'after';

return 'equal';

}

getClock() {

return {...this.clock};

}

}

// Simulation of partition healing

const nodeA = new VectorClock('A', ['A', 'B', 'C']);

const nodeB = new VectorClock('B', ['A', 'B', 'C']);

// Operations during partition

nodeA.tick(); // A: {A:1, B:0, C:0}

nodeB.tick(); // B: {A:0, B:1, C:0}

// Partition heals - detect conflicts

console.log(nodeA.compare(nodeB.getClock())); // Output: concurrent

3. Eventual Consistency Model

Systems choosing availability over consistency implement eventual consistency, where all nodes will eventually converge to the same state once partitions heal.

Real-World Implementation Examples

Apache Cassandra: AP System

Cassandra prioritizes availability and partition tolerance over strong consistency. It uses tunable consistency levels and handles partitions through replica placement strategies.

-- Cassandra CQL example with tunable consistency

CREATE KEYSPACE ecommerce

WITH REPLICATION = {

'class': 'NetworkTopologyStrategy',

'datacenter1': 3,

'datacenter2': 3

};

-- Write with consistency level

INSERT INTO users (id, name, email)

VALUES (1, 'John Doe', '[email protected]')

USING CONSISTENCY QUORUM;

-- Read with eventual consistency

SELECT * FROM users WHERE id = 1

USING CONSISTENCY ONE;

MongoDB: CP System

MongoDB chooses consistency and partition tolerance, becoming unavailable for writes when it cannot maintain a majority.

// MongoDB replica set configuration

rs.initiate({

_id: "myReplicaSet",

members: [

{ _id: 0, host: "mongo1:27017", priority: 2 },

{ _id: 1, host: "mongo2:27017", priority: 1 },

{ _id: 2, host: "mongo3:27017", priority: 1 }

]

});

// During partition, only majority partition accepts writes

db.users.insertOne(

{ name: "Alice", status: "active" },

{ writeConcern: { w: "majority" } }

);

Partition Detection and Recovery Strategies

Heartbeat Mechanism

Implementing robust failure detection is crucial for partition tolerance. Here’s a simple heartbeat system:

import time

import threading

from datetime import datetime, timedelta

class HeartbeatMonitor:

def __init__(self, timeout_seconds=30):

self.nodes = {}

self.timeout = timeout_seconds

self.running = True

def register_node(self, node_id):

self.nodes[node_id] = datetime.now()

def heartbeat(self, node_id):

self.nodes[node_id] = datetime.now()

def check_partitions(self):

current_time = datetime.now()

partitioned_nodes = []

for node_id, last_heartbeat in self.nodes.items():

if current_time - last_heartbeat > timedelta(seconds=self.timeout):

partitioned_nodes.append(node_id)

return partitioned_nodes

def monitor(self):

while self.running:

partitioned = self.check_partitions()

if partitioned:

print(f"Partition detected: nodes {partitioned} are unreachable")

time.sleep(5)

# Usage example

monitor = HeartbeatMonitor(timeout_seconds=10)

monitor.register_node("node_1")

monitor.register_node("node_2")

# Simulate node activity

monitor.heartbeat("node_1")

# node_2 doesn't send heartbeat - will be detected as partitioned

Partition Recovery Process

Testing Partition Tolerance

Chaos Engineering Approach

Testing partition tolerance requires controlled chaos to simulate real-world failures:

#!/bin/bash

# Network partition simulation script

# Block communication between node1 and node2

sudo iptables -A INPUT -s 192.168.1.10 -j DROP

sudo iptables -A OUTPUT -d 192.168.1.10 -j DROP

echo "Partition created - monitoring system behavior..."

sleep 30

# Heal partition

sudo iptables -D INPUT -s 192.168.1.10 -j DROP

sudo iptables -D OUTPUT -d 192.168.1.10 -j DROP

echo "Partition healed - checking data consistency..."

Jepsen Testing Framework

Jepsen is a powerful tool for testing distributed systems under partition scenarios:

(deftest partition-tolerance-test

(let [test (assoc base-test

:name "partition-tolerance"

:nemesis (nemesis/partition-random-halves)

:generator (gen/phases

(gen/sleep 5)

(gen/clients

(gen/mix [read-gen write-gen]))

(gen/sleep 5)

(nemesis/partition-random-halves)

(gen/sleep 10)

(nemesis/heal-all-partitions)))]

(is (analysis/valid? (jepsen/run! test)))))

Performance Implications

Latency Trade-offs

Partition tolerance mechanisms introduce performance overhead:

| Strategy | Read Latency | Write Latency | Partition Recovery Time |

|---|---|---|---|

| Quorum-based | Medium | High | Fast |

| Eventual Consistency | Low | Low | Slow |

| Strong Consistency | High | High | Medium |

Throughput Considerations

# Performance monitoring during partitions

class PartitionPerformanceMonitor:

def __init__(self):

self.metrics = {

'successful_ops': 0,

'failed_ops': 0,

'avg_latency': 0,

'partition_events': 0

}

def record_operation(self, success, latency):

if success:

self.metrics['successful_ops'] += 1

else:

self.metrics['failed_ops'] += 1

# Update rolling average latency

total_ops = self.metrics['successful_ops'] + self.metrics['failed_ops']

self.metrics['avg_latency'] = (

(self.metrics['avg_latency'] * (total_ops - 1) + latency) / total_ops

)

def get_availability_percentage(self):

total = self.metrics['successful_ops'] + self.metrics['failed_ops']

if total == 0:

return 100.0

return (self.metrics['successful_ops'] / total) * 100

Best Practices for Partition Tolerance

Design Principles

- Embrace eventual consistency: Design applications that can handle temporary inconsistencies

- Implement idempotent operations: Ensure operations can be safely retried

- Use circuit breakers: Prevent cascade failures during partitions

- Design for graceful degradation: Maintain core functionality even with reduced capabilities

Monitoring and Alerting

class PartitionAlert:

def __init__(self, alert_threshold=0.3):

self.alert_threshold = alert_threshold

def check_partition_risk(self, total_nodes, available_nodes):

availability_ratio = available_nodes / total_nodes

if availability_ratio < self.alert_threshold:

return {

'severity': 'CRITICAL',

'message': f'Only {available_nodes}/{total_nodes} nodes available',

'action': 'Immediate attention required'

}

elif availability_ratio < 0.6:

return {

'severity': 'WARNING',

'message': f'Reduced capacity: {available_nodes}/{total_nodes} nodes',

'action': 'Monitor closely'

}

return {'severity': 'OK', 'message': 'System healthy'}

Conclusion

Partition tolerance is a fundamental requirement for modern distributed systems. While implementing it involves complex trade-offs between consistency and availability, understanding these concepts enables architects to build resilient systems that gracefully handle network failures.

The key to successful partition tolerance implementation lies in:

- Choosing the appropriate consistency model for your use case

- Implementing robust failure detection and recovery mechanisms

- Designing applications that can operate with temporary inconsistencies

- Continuously testing your system’s behavior under partition scenarios

By applying these principles and strategies, you can build distributed systems that maintain functionality even when faced with the inevitable challenges of network partitions in production environments.