Particle Swarm Optimization (PSO) stands as one of the most celebrated swarm intelligence algorithms designed to solve complex optimization problems by simulating the social behavior of birds flocking or fish schooling. Originally introduced by Kennedy and Eberhart in 1995, PSO has found widespread application in various fields including engineering, computer science, and machine learning due to its simplicity, effectiveness, and ease of implementation.

What Is Particle Swarm Optimization?

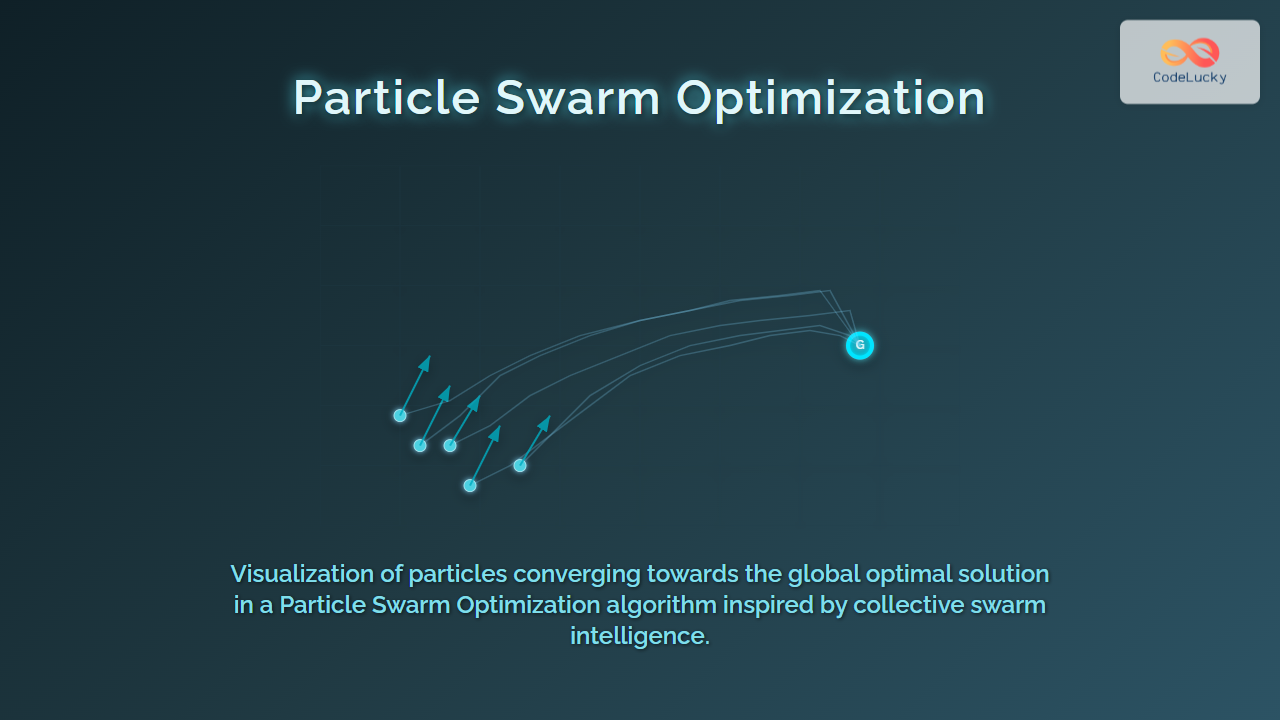

PSO is a population-based stochastic optimization technique inspired by the collective behavior of decentralized, self-organized systems found in nature. It optimizes a problem by iteratively improving candidate solutions called “particles” with respect to a given measure of quality, or fitness function.

Each particle adjusts its trajectory based on its own previous best position and the best-known positions found by the entire swarm, facilitating an efficient exploration and exploitation of the search space.

Mathematical Model and Algorithm

In PSO, each particle \( i \) is represented by its position vector \( \mathbf{x}_i = (x_{i1}, x_{i2}, …, x_{id}) \) and velocity vector \( \mathbf{v}_i = (v_{i1}, v_{i2}, …, v_{id}) \) in a \( d \)-dimensional solution space.

The velocity and position of each particle are updated as follows:

\( \mathbf{v}_i(t+1) = w \mathbf{v}_i(t) + c_1 r_1 (\mathbf{p}_i - \mathbf{x}_i(t)) + c_2 r_2 (\mathbf{g} - \mathbf{x}_i(t)) \)

\( \mathbf{x}_i(t+1) = \mathbf{x}_i(t) + \mathbf{v}_i(t+1) \)

Where:

- \( w \) = inertia weight to control exploration

- \( c_1, c_2 \) = cognitive and social acceleration coefficients

- \( r_1, r_2 \) = random numbers between 0 and 1

- \( \mathbf{p}_i \) = personal best position of particle \( i \)

- \( \mathbf{g} \) = global best position found by the swarm

Step-by-Step PSO Algorithm

- Initialization: Generate a swarm of particles randomly spread in the solution space.

- Fitness Evaluation: Calculate the fitness value for each particle based on the problem’s objective function.

- Update Bests: For each particle, update its personal best if the current position is better; update global best if any particle surpasses the global best fitness.

- Velocity & Position Update: Calculate new velocities and positions based on the PSO formula.

- Repeat: Iterate the evaluation and update steps until the maximum iterations or acceptable error is reached.

Visual Explanation with Mermaid Flowchart

Simple PSO Example: Minimize \( f(x) = x^2 \) on real line

Consider a 1-D optimization problem to find minimum of \( f(x) = x^2 \). Particles start at random points along the x-axis and move towards the position with minimum \( f(x) \).

Python pseudocode for PSO iteration:

initialize particles x_i, velocities v_i randomly

for t in 1 to max_iterations:

for each particle i:

evaluate fitness f(x_i)

update personal best p_i

update global best g

update velocity v_i = w*v_i + c1*r1*(p_i - x_i) + c2*r2*(g - x_i)

update position x_i = x_i + v_i

Interactive Visualization Suggestion

An interactive plot with particles dynamically moving in the search space towards the global minimum could illustrate this beautifully. Using libraries like D3.js or Plotly (if integrated in an interactive article environment on CodeLucky.com) would allow readers to observe:

- Initial particle spread

- Particle velocity vectors

- Trajectories converging to optimal point

Applications of PSO

PSO has been successfully applied in various domains including:

- Function optimization in engineering design

- Training neural networks

- Scheduling and resource allocation

- Image processing and computer vision

- Robotics path planning

Advantages and Limitations

| Advantages | Limitations |

|---|---|

|

|

Conclusion

Particle Swarm Optimization offers a powerful, nature-inspired method for tackling optimization problems by harnessing the collective intelligence of a swarm. Its balance between simplicity and effectiveness makes it an essential tool in the algorithm designer’s toolkit. When combined with visual and interactive components, learners and practitioners alike gain a deeper intuitive understanding of how swarm intelligence optimizes solutions efficiently.