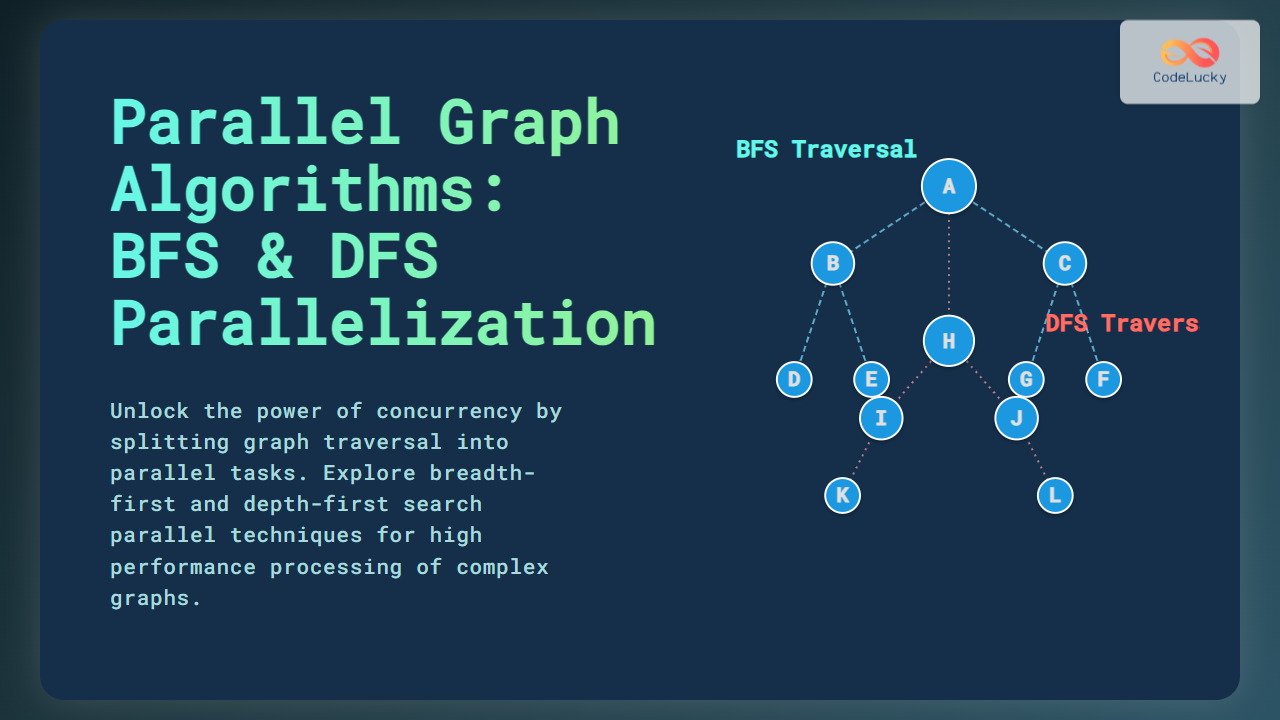

Parallel graph algorithms are crucial for processing large-scale graphs efficiently, especially as data grows in size and complexity. Among all such algorithms, Breadth-First Search (BFS) and Depth-First Search (DFS) are fundamental tools in fields like network analysis, AI, and databases. This article covers advanced techniques to parallelize BFS and DFS, includes explanatory mermaid diagrams, and provides interactive code samples for hands-on learning.

Why Parallelize Graph Traversal?

Real-world graphs—like social networks and citation graphs—often contain millions or billions of nodes and edges, making sequential traversal infeasible. Parallelization leverages multiple cores or distributed machines to reduce traversal time, enabling real-time analytics and AI applications even for immense graphs.

Core Challenges in Parallel Graph Algorithms

- Irregular Data Access: Graphs lead to unpredictable memory patterns.

- Load Balancing: Some graph regions are denser, causing thread starvation or overload.

- Synchronization Overheads: Threads competing to mark visited nodes can cause contention.

- Communication Costs: In distributed settings, data exchange across nodes can bottleneck performance.

Parallel Breadth-First Search (BFS)

Parallel BFS divides nodes at the same “frontier” among multiple threads or processes. This approach scales well on shared-memory systems and can utilize SIMD/vectorization techniques.

Step-by-Step Overview:

- Initialize the queue with the source node.

- Assign workers to expand the current frontier in parallel.

- Each thread collects unvisited neighbors and marks them visited.

- Frontier updates are combined for the next level.

- Repeat until the queue is empty.

Parallel BFS Example (Python with Multithreading):

import threading

from queue import Queue

def parallel_bfs(graph, start):

visited = set()

q = Queue()

q.put(start)

visited.add(start)

def worker():

while not q.empty():

node = q.get()

for neighbor in graph[node]:

if neighbor not in visited:

visited.add(neighbor)

q.put(neighbor)

q.task_done()

threads = []

for _ in range(4): # 4 threads for illustration

t = threading.Thread(target=worker)

t.start()

threads.append(t)

q.join()

for t in threads:

t.join()

return visited

# Example usage:

graph = {0:[1,2], 1:[0,3], 2:[0,4], 3:[1], 4:[2]}

visited = parallel_bfs(graph, 0)

print(visited)

Try experimenting by adjusting the number of threads or the graph structure!

Parallel BFS in Distributed Systems

In large clusters, BFS is distributed by partitioning the graph and exchanging frontier information via message passing. Libraries like Apache Giraph and GraphX use this paradigm for web-scale data.

- Each machine processes its local subgraph.

- After each BFS level, frontiers are exchanged across partitions.

- Synchronization points at end of each frontier level.

Parallel Depth-First Search (DFS)

Unlike BFS, parallelizing DFS is harder due to its inherently sequential, stack-based nature. However, techniques such as task stealing and speculative execution enable effective parallel DFS, especially for applications like path finding and cycle detection.

Work Stealing DFS (Pseudocode):

from concurrent.futures import ThreadPoolExecutor

def parallel_dfs(graph, start):

visited = set()

stack = [start]

def dfs(node):

if node in visited:

return

visited.add(node)

for neighbor in graph[node]:

dfs(neighbor)

with ThreadPoolExecutor(max_workers=4) as executor:

futures = [executor.submit(dfs, neighbor) for neighbor in graph[start]]

for f in futures: f.result()

return visited

# Example usage:

graph = {0:[1,2], 1:[0,3], 2:[0,4], 3:[1], 4:[2]}

visited = parallel_dfs(graph, 0)

print(visited)

Work stealing allows idle threads to “steal” stack frames from busier threads, improving load balance for large, disconnected graphs or trees.

DFS Parallelization: Task Decomposition Diagram

This diagram illustrates how DFS can split work at branches, with each thread processing a subtree independently.

Benefits and Limitations of Parallel DFS and BFS

| Technique | Benefits | Limitations |

|---|---|---|

| Parallel BFS | High throughput on wide frontiers Easier load balance on uniform graphs |

Memory contention for large queues Synchronization costs per level |

| Parallel DFS | Effective for wide trees or acyclic graphs Suited for search and enumeration tasks |

Stack dependencies limit speedup Harder to balance irregular graphs |

Interactive BFS Frontier Visualization

Step through the BFS frontier evolution below.

Example: Click any node to list its children at the next level.

Best Practices for Parallelizing BFS and DFS

- Choose BFS for: Layered analysis (shortest paths, minimum cost, graph diameter) and dense graphs.

- Choose DFS for: Topological sorting, cycle detection, exhaustive search, trees/subgraphs.

- Use high-level libraries (OpenMP for C/C++, ThreadPoolExecutor for Python) to manage threads and memory.

- Optimize for cache locality—partition graph if possible.

- Avoid fine-grained locks; prefer atomic operations or lock-free structures for visited sets.

Conclusion: Future of Parallel Graph Search

Parallel BFS and DFS form the backbone of big data analysis and scalable AI pipelines. Modern frameworks enable seamless deployment on multicore, GPU, and distributed systems. As real-world graphs continue to grow, mastery of parallel traversal will be an essential computer science skill for practitioners and researchers alike.