Efficient memory management is a cornerstone of modern computing systems. Among the many algorithms that govern memory management, Online Paging algorithms play a crucial role in controlling how pages are replaced in memory during runtime. This article offers a detailed and SEO-friendly exploration of the Online Paging algorithm, its workings, practical examples, and useful visualizations, making it indispensable for software engineers, students, and computer science enthusiasts.

What is Online Paging?

Online Paging is a class of page replacement algorithms designed to manage the contents of a limited-size memory cache without knowledge of future requests. Unlike offline algorithms, which have foreknowledge of future memory requests, online algorithms make decisions on-the-fly based only on past and current information. The fundamental challenge is deciding which page to evict when the cache is full and a new page must be loaded.

Online Paging algorithms are essential in operating systems for virtual memory management, browser cache optimization, and embedded systems where memory is limited.

Key Concepts and Terminology

- Page: A fixed-length contiguous block of virtual memory.

- Cache Frame: A slot in the physical memory that can hold a page.

- Page Fault: Occurs when a requested page is not in memory and must be loaded from disk.

- Hit: When the requested page is found in memory.

- Cache Miss: When the requested page is not in cache, resulting in a page fault.

Popular Online Paging Algorithms

The choice of an online paging algorithm greatly impacts system performance. Some of the most widely used online algorithms include:

- Least Recently Used (LRU): Evicts the page that has not been used for the longest time.

- First In First Out (FIFO): Removes the earliest loaded page regardless of usage frequency.

- Random Replacement: Evicts a random page from memory.

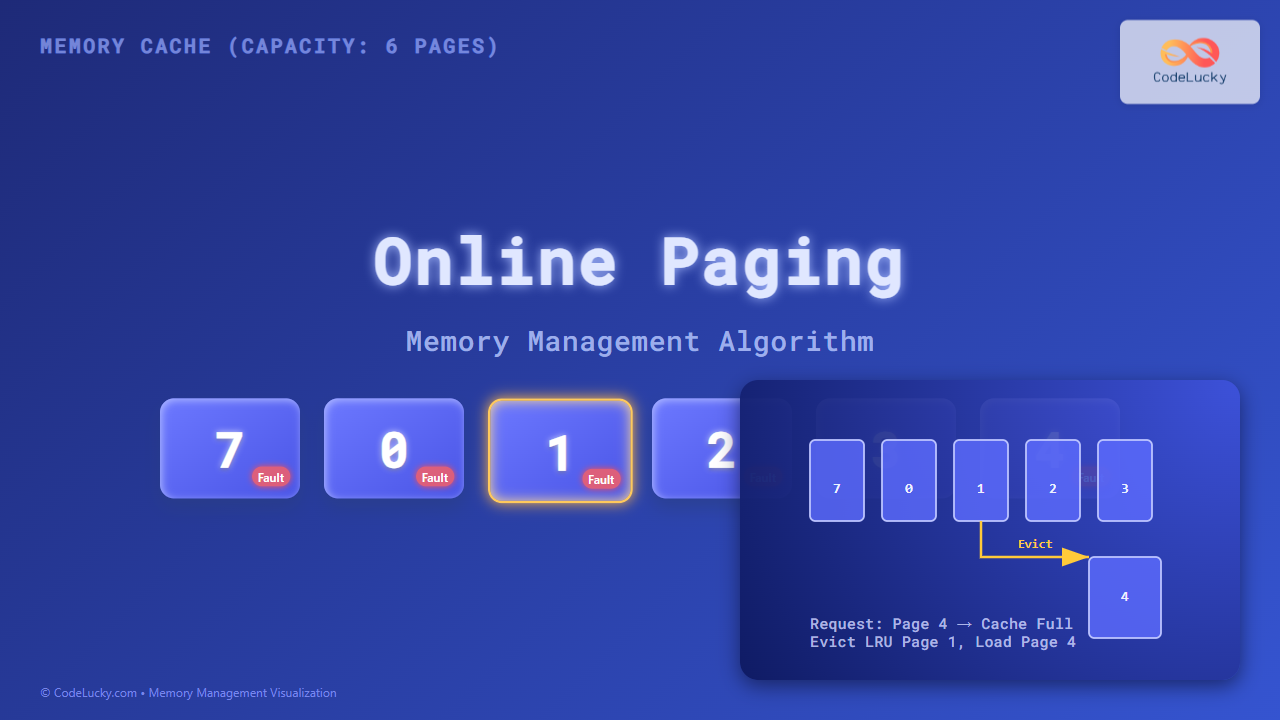

How Online Paging Works: Step-by-Step Example

Consider a memory cache that can hold 3 pages. Pages are requested in the following order:

7, 0, 1, 2, 0, 3, 0, 4

Let’s explore how the LRU algorithm handles this sequence.

Through this example, we see that page faults occur when loading 7, 0, 1, 2, 3, and 4. Hits occur when requesting page 0 again after it has been loaded.

Interactive LRU Example

Below is a simplified interaction demonstrating page requests and cache content updates for LRU:

| Page Request | Cache Content (Most Recent on Left) | Page Fault? |

|---|---|---|

| 7 | 7 | Yes (cache miss) |

| 0 | 0, 7 | Yes |

| 1 | 1, 0, 7 | Yes |

| 2 | 2, 1, 0 | Yes (evicted 7) |

| 0 | 0, 2, 1 | No (cache hit) |

| 3 | 3, 0, 2 | Yes (evicted 1) |

| 0 | 0, 3, 2 | No |

| 4 | 4, 0, 3 | Yes (evicted 2) |

Algorithmic Complexity

Implementation efficiency is critical:

- Naive LRU: Linked list or array based, O(n) to update on access.

- Optimized LRU: Uses a combination of a hashmap and doubly linked list to achieve O(1) for access and updates.

Visualizing LRU using Mermaid Sequence Diagram

Applications and Practical Use Cases

- Operating Systems: Virtual memory management to reduce page faults.

- Web Browsers: Caching web pages and scripts efficiently.

- Databases: Buffer pool management for quick-access data pages.

- Embedded Systems: Managing limited RAM for fast cache access.

Conclusion

Online Paging algorithms, specifically LRU and its variants, provide efficient strategies for memory management where future page requests are unknown. They balance cache hits and faults dynamically by replacing the least recent pages and adapt well in various computing contexts. Understanding these algorithms through examples, tables, and diagrams builds a solid foundation for optimizing memory management in software applications and systems.