The Master Theorem is an essential tool in computer science for analyzing the time complexity of divide and conquer algorithms. When an algorithm breaks a problem into smaller subproblems, solves them recursively, and combines their results, the Master Theorem provides a straightforward formula to determine the overall efficiency.

This article offers a comprehensive and SEO-friendly guide on the Master Theorem, including detailed explanations, practical examples with clear visualizations, and useful Mermaid diagrams to better understand the concepts.

Understanding Divide and Conquer Recurrence

Divide and conquer algorithms typically follow a recurrence relation of the form:

T(n) = aT(n/b) + f(n)

- a: Number of subproblems the problem is divided into.

- n/b: Size of each subproblem (divide the original size n by b).

- f(n): Cost of dividing the problem and combining the results (non-recursive work).

Analyzing this recurrence directly can be complicated, but the Master Theorem simplifies it for many cases by comparing f(n) to n^{log_b a}.

Master Theorem Statement

For the recurrence T(n) = aT(n/b) + f(n), where a ≥ 1 and b > 1, let c = log_b a. Then:

| Case | Condition | Time Complexity |

|---|---|---|

| 1 | f(n) = O(n^{c – ε}) for some ε > 0 | T(n) = Θ(n^c) |

| 2 | f(n) = Θ(n^c log^k n) for some k ≥ 0 | T(n) = Θ(n^c log^{k+1} n) |

| 3 | f(n) = Ω(n^{c + ε}) for some ε > 0, and af(n/b) ≤ kf(n) for some k < 1 and sufficiently large n | T(n) = Θ(f(n)) |

Explanation of the Cases

- Case 1: The work done at the leaves dominates. The recursive part contributes most complexity.

- Case 2: The work done at all levels is nearly equal, resulting in an extra logarithmic factor.

- Case 3: The non-recursive work dominates, so the combining step dominates total time.

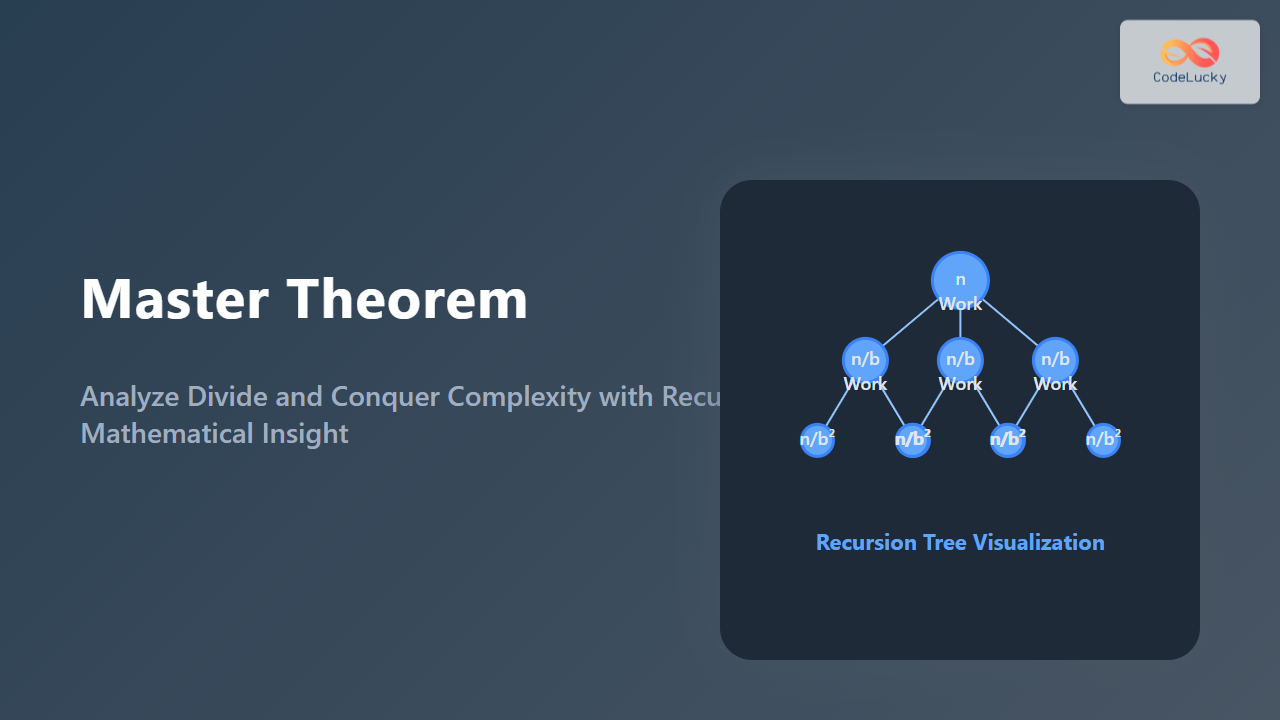

Visualizing the Recursion Tree

Consider a recursion tree representing the calls and work at each level.

At each level, total work is:

Total work at level k = a^k × f(n/b^k)

The depth of this tree is log_b n, with work distributed across levels depending on a and f(n).

Examples with Analysis

Example 1: Merge Sort

Merge sort splits the array in two halves, recursively sorts them, and merges the results.

- a = 2 (two subproblems),

- b = 2 (each subproblem half the size),

- f(n) = Θ(n) (merging step).

Calculate c = log_b a = log_2 2 = 1. Here, f(n) = Θ(n) which equals n^c, so case 2 applies:

T(n) = Θ(n log n)

Example 2: Binary Search

- a = 1

- b = 2

- f(n) = Θ(1)

Here, c = log_2 1 = 0. Since f(n) = Θ(1) = Θ(n^0), case 2 applies with k=0:

T(n) = Θ(log n)

Example 3: Strassen’s Matrix Multiplication

- a = 7 (7 subproblems)

- b = 2 (matrix size halves)

- f(n) = Θ(n^2) (combining step)

Calculate c = log_2 7 ≈ 2.81. Since f(n) = O(n^2) which is asymptotically smaller than n^c, this fits case 1:

T(n) = Θ(n^{2.81})

Interactive Visualization Idea

An interactive recurrence visualizer can help users input a, b, and f(n) and view resulting complexity. This deepens practical understanding by showing recursion trees and complexity comparisons live.

Common Pitfalls and Tips

- Ensure a and b correctly represent the problem subdivision.

- Accurately identify the complexity of the divide and combine step f(n).

- The theorem applies only if f(n) is polynomially comparable with n^{log_b a}. For other forms, use alternative methods.

Conclusion

The Master Theorem dramatically simplifies time complexity analysis of divide and conquer algorithms, making it an indispensable skill for programmers and computer scientists. Understanding and applying its three cases gives a quick and powerful way to grasp algorithm efficiency, whether for classical algorithms like merge sort or complex ones like Strassen’s matrix multiply.

Utilizing the theorem alongside recursion trees and practical examples leads to deeper mastery and better algorithm design and optimization.