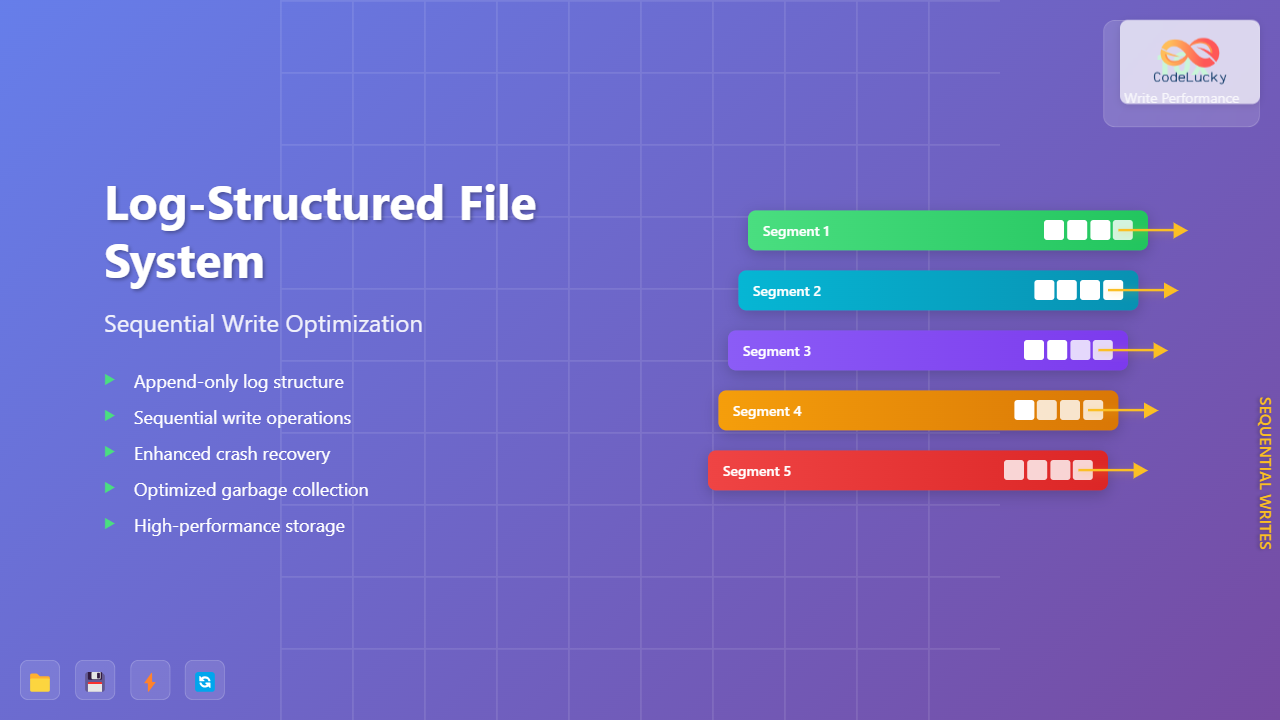

Log-structured File Systems (LFS) represent a revolutionary approach to data storage that fundamentally changes how operating systems handle file operations. Unlike traditional file systems that perform random writes across the disk, LFS treats the entire storage device as a sequential log, optimizing write performance and enabling better handling of modern workloads.

Understanding Log-structured File Systems

A log-structured file system organizes all data and metadata as a continuous sequence of log entries written to storage. This approach transforms the chaotic pattern of random disk writes into an ordered, sequential stream that maximizes write throughput and minimizes disk seek times.

The core principle behind LFS is simple yet powerful: all writes are sequential. When an application modifies a file, instead of updating the existing location on disk, the file system writes the new data to the end of the log and updates its internal indexes to point to the new location.

Sequential Write Optimization Benefits

Sequential write optimization in LFS provides several significant advantages over traditional file systems:

Improved Write Performance

Sequential writes eliminate the need for disk head movement in traditional hard drives, dramatically reducing seek times. For Solid State Drives (SSDs), sequential writes help minimize write amplification and extend device lifespan.

# Performance comparison example

# Traditional file system random write

$ dd if=/dev/zero of=random_file bs=4K count=1000 seek=$RANDOM

# Average: 50-100 IOPS

# Log-structured sequential write

$ dd if=/dev/zero of=log_file bs=4K count=1000

# Average: 500-1000+ IOPS

Enhanced Crash Recovery

The append-only nature of LFS creates an inherent versioning system. Each write operation creates a new version of data while preserving previous versions until garbage collection occurs. This design provides excellent crash recovery capabilities.

Better Handling of Small Writes

LFS excels at handling workloads with frequent small writes by batching them into larger sequential writes, reducing the overhead associated with numerous small disk operations.

LFS Architecture Components

Log Segments

The storage device is divided into fixed-size segments, typically ranging from 512KB to several megabytes. Each segment contains a sequence of log entries representing file data and metadata changes.

Inode Map

The inode map serves as the primary index structure, mapping file identifiers to their current locations in the log. This indirection layer enables efficient file lookups while supporting the dynamic nature of log-structured storage.

// Simplified inode map structure

typedef struct {

uint32_t file_id;

uint64_t log_address; // Current location in log

uint32_t version; // For consistency checking

uint64_t timestamp; // Last modification time

} inode_map_entry_t;

Checkpoint Regions

Checkpoint regions store critical file system metadata, including the current state of the inode map and segment usage information. These regions are updated periodically to ensure file system consistency.

Write Process in Detail

The write process in LFS follows a carefully orchestrated sequence that ensures data integrity while maximizing performance:

Write Buffering

LFS maintains an in-memory write buffer that accumulates multiple write operations before committing them to storage as a single sequential write. This batching mechanism significantly improves efficiency for workloads with many small writes.

# Conceptual write buffering implementation

class WriteBuffer:

def __init__(self, max_size=1024*1024): # 1MB buffer

self.buffer = bytearray()

self.max_size = max_size

self.entries = []

def add_write(self, file_id, offset, data):

entry = {

'file_id': file_id,

'offset': offset,

'data': data,

'timestamp': time.time()

}

self.entries.append(entry)

self.buffer.extend(data)

if len(self.buffer) >= self.max_size:

self.flush()

def flush(self):

if self.buffer:

log_address = write_sequential(self.buffer)

self.update_inode_map(self.entries, log_address)

self.buffer.clear()

self.entries.clear()

Garbage Collection Mechanism

One of the most critical aspects of LFS is garbage collection, which reclaims space from segments containing obsolete data. This process is essential for maintaining storage efficiency in a log-structured environment.

Segment Cleaning Process

The garbage collector identifies segments with low utilization, reads valid data from these segments, writes it to new segments, and marks the old segments as free for reuse.

Cleaning Policy

Effective garbage collection requires intelligent segment selection policies. Common approaches include:

- Greedy Policy: Always clean the segment with the least valid data

- Cost-Benefit Policy: Consider both space reclaimed and cleaning cost

- Age-Based Policy: Prioritize older segments that are less likely to be modified

// Cost-benefit calculation for segment cleaning

double calculate_cleaning_benefit(segment_t *seg) {

double utilization = (double)seg->valid_bytes / seg->total_bytes;

double age = current_time - seg->last_modified;

// Higher benefit for low utilization and older segments

return (1.0 - utilization) * age;

}

Performance Characteristics

Write Performance

LFS demonstrates exceptional write performance, particularly for workloads characterized by:

- High write-to-read ratios

- Frequent file creation and deletion

- Applications requiring high write throughput

Read Performance Considerations

While LFS optimizes write performance, read operations may experience some overhead due to indirection through the inode map. However, modern implementations mitigate this through aggressive caching and optimized index structures.

Space Utilization

The effectiveness of garbage collection directly impacts space utilization. Well-tuned systems typically maintain 70-80% space utilization under steady-state conditions.

Real-world Implementations

JFFS2 (Journaling Flash File System)

JFFS2 implements log-structured principles specifically for NAND flash memory in embedded systems. It provides wear leveling and bad block management while maintaining the sequential write benefits of LFS.

F2FS (Flash-Friendly File System)

F2FS, developed by Samsung, adapts log-structured concepts for modern SSDs. It incorporates multi-head logging and sophisticated garbage collection algorithms to maximize SSD performance and lifespan.

NILFS (New Implementation of Log-structured File System)

NILFS brings log-structured file system capabilities to Linux, providing continuous snapshotting and efficient backup capabilities through its append-only design.

Implementation Challenges and Solutions

Write Amplification

Garbage collection can cause write amplification, where the same data is written multiple times. Modern LFS implementations address this through:

- Intelligent cleaning policies that minimize data movement

- Hot/cold data separation to reduce cleaning frequency

- Adaptive algorithms that adjust cleaning parameters based on workload

Crash Consistency

Ensuring file system consistency after unexpected shutdowns requires careful design of checkpoint mechanisms and recovery procedures:

Memory Requirements

LFS typically requires more memory than traditional file systems due to write buffering and inode map caching. Modern implementations use techniques like lazy loading and LRU caching to manage memory efficiently.

Performance Tuning Guidelines

Segment Size Optimization

Choosing the appropriate segment size involves balancing several factors:

- Large segments: Reduce metadata overhead but may increase garbage collection cost

- Small segments: Enable fine-grained garbage collection but increase management overhead

Buffer Management

Effective write buffer management is crucial for optimal performance:

# Example configuration for LFS parameters

echo 2048 > /sys/fs/lfs/write_buffer_size_kb # 2MB write buffer

echo 30 > /sys/fs/lfs/gc_threshold_percent # Start GC at 30% utilization

echo 10 > /sys/fs/lfs/checkpoint_interval_sec # Checkpoint every 10 seconds

Future Developments

Log-structured file systems continue to evolve with advances in storage technology:

- NVMe Integration: Optimized implementations for high-speed NVMe SSDs

- Persistent Memory Support: Adaptations for byte-addressable persistent memory

- Machine Learning: AI-driven garbage collection and caching policies

- Distributed LFS: Scaling log-structured concepts to distributed storage systems

Log-structured file systems represent a paradigm shift in storage system design, prioritizing write performance through sequential operations while providing robust data protection and efficient space management. As storage technologies continue to evolve, LFS principles remain highly relevant for applications requiring high write throughput and strong consistency guarantees.

Understanding LFS concepts is essential for system administrators, developers, and anyone working with high-performance storage systems. The sequential write optimization at the heart of LFS provides a foundation for building efficient, scalable storage solutions that can handle the demanding requirements of modern computing environments.