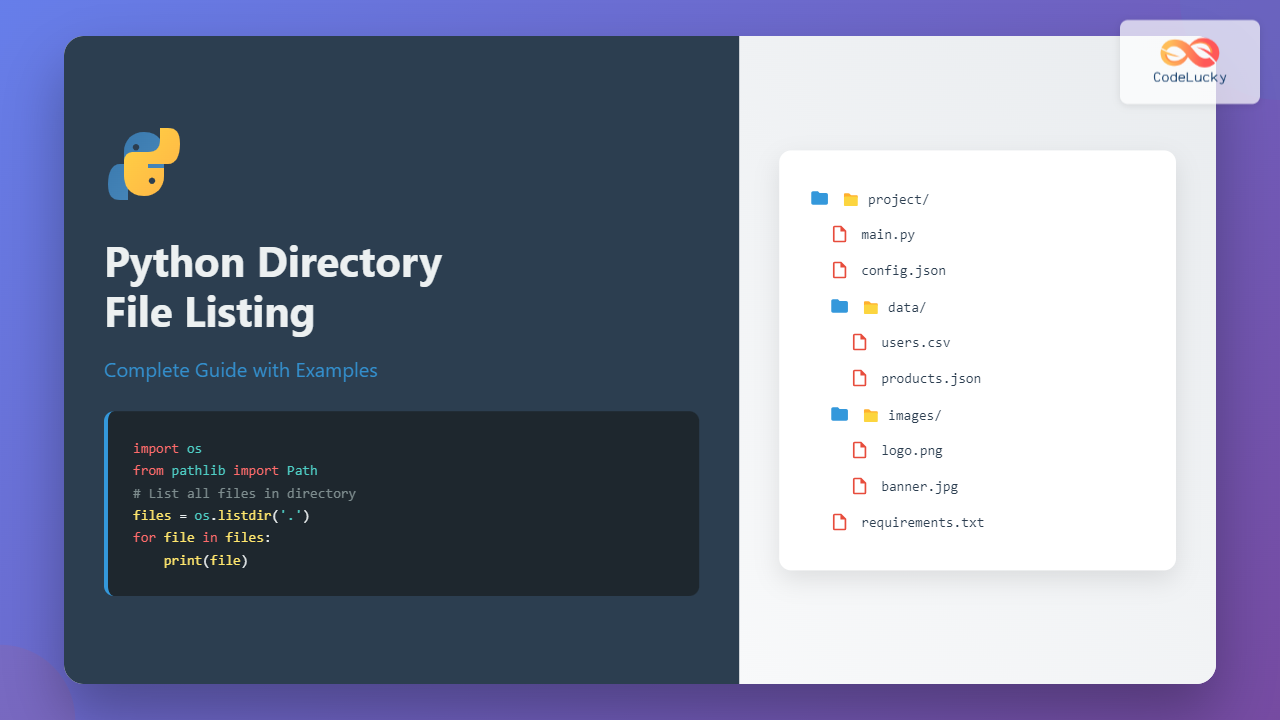

Working with files and directories is a fundamental task in Python programming. Whether you’re building a file manager, organizing data, or automating file operations, knowing how to list files in a directory is essential. This comprehensive guide will show you multiple methods to accomplish this task efficiently.

Why List Files in Directories?

Before diving into the implementation, let’s understand common scenarios where you might need to list directory contents:

- File processing automation – Batch processing multiple files

- Data analysis – Working with datasets stored in multiple files

- Backup systems – Creating file inventories

- Web development – Managing static assets

- Log file analysis – Processing application logs

Method 1: Using os.listdir()

The most straightforward method uses the os.listdir() function, which returns a list of all items in a specified directory.

Basic Usage

import os

# List all items in current directory

files = os.listdir('.')

print("Files in current directory:")

for file in files:

print(file)

Expected Output:

Files in current directory:

script.py

data.txt

images

logs

config.json

Filtering Only Files (Excluding Directories)

import os

def list_files_only(directory_path):

all_items = os.listdir(directory_path)

files_only = []

for item in all_items:

item_path = os.path.join(directory_path, item)

if os.path.isfile(item_path):

files_only.append(item)

return files_only

# Usage example

current_files = list_files_only('.')

print("Files only:")

for file in current_files:

print(f"📄 {file}")

Expected Output:

Files only:

📄 script.py

📄 data.txt

📄 config.json

Method 2: Using os.walk() for Recursive Listing

When you need to list files in all subdirectories, os.walk() is your best friend. It recursively traverses directory trees.

import os

def list_all_files_recursive(directory_path):

all_files = []

for root, directories, files in os.walk(directory_path):

for file in files:

file_path = os.path.join(root, file)

all_files.append(file_path)

return all_files

# Usage example

recursive_files = list_all_files_recursive('.')

print("All files recursively:")

for file in recursive_files[:10]: # Show first 10 files

print(f"📁 {file}")

Expected Output:

All files recursively:

📁 ./script.py

📁 ./data.txt

📁 ./config.json

📁 ./images/photo1.jpg

📁 ./images/photo2.png

📁 ./logs/app.log

📁 ./logs/error.log

Method 3: Using pathlib (Modern Approach)

Python 3.4+ introduced pathlib, which provides an object-oriented approach to file system operations. It’s more intuitive and powerful than traditional os methods.

Basic File Listing

from pathlib import Path

def list_files_pathlib(directory_path):

path = Path(directory_path)

files = []

for item in path.iterdir():

if item.is_file():

files.append(item.name)

return files

# Usage example

files = list_files_pathlib('.')

print("Files using pathlib:")

for file in files:

print(f"🐍 {file}")

Advanced pathlib Features

from pathlib import Path

def analyze_directory(directory_path):

path = Path(directory_path)

print(f"📂 Analyzing directory: {path.absolute()}")

print("-" * 50)

files = list(path.glob('*'))

for item in files:

if item.is_file():

size = item.stat().st_size

print(f"📄 {item.name} ({size} bytes)")

elif item.is_dir():

file_count = len(list(item.glob('*')))

print(f"📁 {item.name}/ ({file_count} items)")

# Usage example

analyze_directory('.')

Expected Output:

📂 Analyzing directory: /home/user/project

--------------------------------------------------

📄 script.py (1024 bytes)

📄 data.txt (2048 bytes)

📁 images/ (5 items)

📁 logs/ (3 items)

📄 config.json (512 bytes)

Method 4: Using glob for Pattern Matching

The glob module is perfect when you need to find files matching specific patterns, similar to shell wildcards.

Basic Pattern Matching

import glob

# Find all Python files

python_files = glob.glob('*.py')

print("Python files:")

for file in python_files:

print(f"🐍 {file}")

# Find all image files

image_extensions = ['*.jpg', '*.png', '*.gif', '*.bmp']

image_files = []

for extension in image_extensions:

image_files.extend(glob.glob(extension))

print("\nImage files:")

for file in image_files:

print(f"🖼️ {file}")

Recursive Pattern Matching

import glob

def find_files_by_pattern(pattern, recursive=True):

if recursive:

files = glob.glob(pattern, recursive=True)

else:

files = glob.glob(pattern)

return files

# Find all .txt files recursively

txt_files = find_files_by_pattern('**/*.txt')

print("Text files (recursive):")

for file in txt_files:

print(f"📝 {file}")

# Find all log files in logs directory

log_files = find_files_by_pattern('logs/*.log')

print("\nLog files:")

for file in log_files:

print(f"📋 {file}")

Method 5: Advanced File Information Gathering

Sometimes you need more than just file names. Here’s how to get detailed file information:

import os

import time

from pathlib import Path

def get_detailed_file_info(directory_path):

path = Path(directory_path)

file_info = []

for item in path.iterdir():

if item.is_file():

stat = item.stat()

info = {

'name': item.name,

'size': stat.st_size,

'modified': time.ctime(stat.st_mtime),

'extension': item.suffix,

'full_path': str(item.absolute())

}

file_info.append(info)

return file_info

# Usage example

files_info = get_detailed_file_info('.')

print("Detailed file information:")

print(f"{'Name':<20} {'Size':<10} {'Extension':<12} {'Modified':<25}")

print("-" * 70)

for info in files_info:

print(f"{info['name']:<20} {info['size']:<10} {info['extension']:<12} {info['modified']:<25}")

Expected Output:

Detailed file information:

Name Size Extension Modified

----------------------------------------------------------------------

script.py 1024 .py Mon Aug 29 14:30:25 2025

data.txt 2048 .txt Mon Aug 29 12:15:10 2025

config.json 512 .json Mon Aug 29 16:45:30 2025

Practical Examples and Use Cases

Example 1: File Organization Script

import os

from pathlib import Path

from collections import defaultdict

def organize_files_by_extension(directory_path):

path = Path(directory_path)

files_by_extension = defaultdict(list)

for file in path.iterdir():

if file.is_file():

extension = file.suffix.lower() or 'no_extension'

files_by_extension[extension].append(file.name)

return dict(files_by_extension)

# Usage

organized = organize_files_by_extension('.')

for extension, files in organized.items():

print(f"\n{extension.upper()} files:")

for file in files:

print(f" • {file}")

Example 2: Large File Finder

from pathlib import Path

def find_large_files(directory_path, size_limit_mb=10):

path = Path(directory_path)

large_files = []

size_limit_bytes = size_limit_mb * 1024 * 1024

for file in path.rglob('*'):

if file.is_file():

if file.stat().st_size > size_limit_bytes:

size_mb = file.stat().st_size / (1024 * 1024)

large_files.append({

'name': file.name,

'path': str(file),

'size_mb': round(size_mb, 2)

})

return large_files

# Find files larger than 5MB

large_files = find_large_files('.', 5)

if large_files:

print("Large files found:")

for file in large_files:

print(f"📦 {file['name']} - {file['size_mb']} MB")

else:

print("No large files found.")

Performance Considerations

When working with large directories or file systems, performance becomes crucial. Here are some optimization tips:

Comparison of Methods

import time

from pathlib import Path

import os

import glob

def benchmark_methods(directory_path, iterations=100):

results = {}

# Method 1: os.listdir

start_time = time.time()

for _ in range(iterations):

files = os.listdir(directory_path)

results['os.listdir'] = time.time() - start_time

# Method 2: pathlib

start_time = time.time()

for _ in range(iterations):

path = Path(directory_path)

files = [f.name for f in path.iterdir() if f.is_file()]

results['pathlib'] = time.time() - start_time

# Method 3: glob

start_time = time.time()

for _ in range(iterations):

files = glob.glob(f"{directory_path}/*")

results['glob'] = time.time() - start_time

return results

# Run benchmark

print("Performance comparison (100 iterations):")

benchmark_results = benchmark_methods('.')

for method, time_taken in benchmark_results.items():

print(f"{method:<12}: {time_taken:.4f} seconds")

Error Handling and Best Practices

Always implement proper error handling when working with file operations:

from pathlib import Path

import logging

def safe_list_files(directory_path):

try:

path = Path(directory_path)

if not path.exists():

raise FileNotFoundError(f"Directory {directory_path} does not exist")

if not path.is_dir():

raise NotADirectoryError(f"{directory_path} is not a directory")

files = []

for item in path.iterdir():

try:

if item.is_file():

files.append({

'name': item.name,

'size': item.stat().st_size,

'readable': os.access(item, os.R_OK)

})

except PermissionError:

logging.warning(f"Permission denied: {item}")

continue

return files

except Exception as e:

logging.error(f"Error listing files: {e}")

return []

# Usage with error handling

files = safe_list_files('/some/directory')

if files:

print("Files found:")

for file in files:

access = "✅" if file['readable'] else "❌"

print(f"{access} {file['name']} ({file['size']} bytes)")

else:

print("No files found or error occurred.")

Interactive File Explorer Example

Here’s a complete interactive file explorer that combines all the methods we’ve discussed:

from pathlib import Path

import os

from datetime import datetime

class FileExplorer:

def __init__(self, start_directory='.'):

self.current_directory = Path(start_directory).resolve()

def list_contents(self, show_hidden=False):

"""List all contents in current directory"""

contents = []

try:

for item in self.current_directory.iterdir():

if not show_hidden and item.name.startswith('.'):

continue

stat_info = item.stat()

contents.append({

'name': item.name,

'type': 'dir' if item.is_dir() else 'file',

'size': stat_info.st_size if item.is_file() else '-',

'modified': datetime.fromtimestamp(stat_info.st_mtime).strftime('%Y-%m-%d %H:%M'),

'path': str(item)

})

return sorted(contents, key=lambda x: (x['type'], x['name'].lower()))

except PermissionError:

print(f"❌ Permission denied accessing {self.current_directory}")

return []

def display_contents(self, show_hidden=False):

"""Display directory contents in a formatted table"""

contents = self.list_contents(show_hidden)

if not contents:

print("📂 Directory is empty or inaccessible")

return

print(f"\n📂 Contents of: {self.current_directory}")

print("=" * 80)

print(f"{'Type':<6} {'Name':<30} {'Size':<12} {'Modified':<20}")

print("-" * 80)

for item in contents:

icon = "📁" if item['type'] == 'dir' else "📄"

size_str = f"{item['size']} bytes" if item['size'] != '-' else item['size']

print(f"{icon:<6} {item['name']:<30} {size_str:<12} {item['modified']:<20}")

def find_files_by_extension(self, extension):

"""Find files with specific extension recursively"""

files = list(self.current_directory.rglob(f"*.{extension}"))

return [str(f.relative_to(self.current_directory)) for f in files if f.is_file()]

def get_directory_stats(self):

"""Get statistics about current directory"""

total_files = 0

total_dirs = 0

total_size = 0

for item in self.current_directory.rglob('*'):

if item.is_file():

total_files += 1

total_size += item.stat().st_size

elif item.is_dir():

total_dirs += 1

return {

'files': total_files,

'directories': total_dirs,

'total_size': total_size,

'size_mb': round(total_size / (1024 * 1024), 2)

}

# Usage example

explorer = FileExplorer('.')

explorer.display_contents()

# Show statistics

stats = explorer.get_directory_stats()

print(f"\n📊 Directory Statistics:")

print(f"Files: {stats['files']}")

print(f"Directories: {stats['directories']}")

print(f"Total Size: {stats['size_mb']} MB")

# Find specific files

python_files = explorer.find_files_by_extension('py')

if python_files:

print(f"\n🐍 Python files found:")

for file in python_files[:5]: # Show first 5

print(f" • {file}")

Conclusion

Mastering file and directory operations in Python opens up endless possibilities for automation and data processing. Here’s a quick summary of when to use each method:

- os.listdir() – Simple, fast listing for basic needs

- os.walk() – Recursive directory traversal

- pathlib – Modern, object-oriented approach (recommended)

- glob – Pattern matching and wildcards

- Custom functions – Complex filtering and processing

Remember to always implement proper error handling and consider performance implications when working with large file systems. The pathlib module is generally recommended for new projects due to its clean API and powerful features.

Whether you’re building a file manager, processing datasets, or automating file operations, these techniques will serve as a solid foundation for your Python file handling needs.