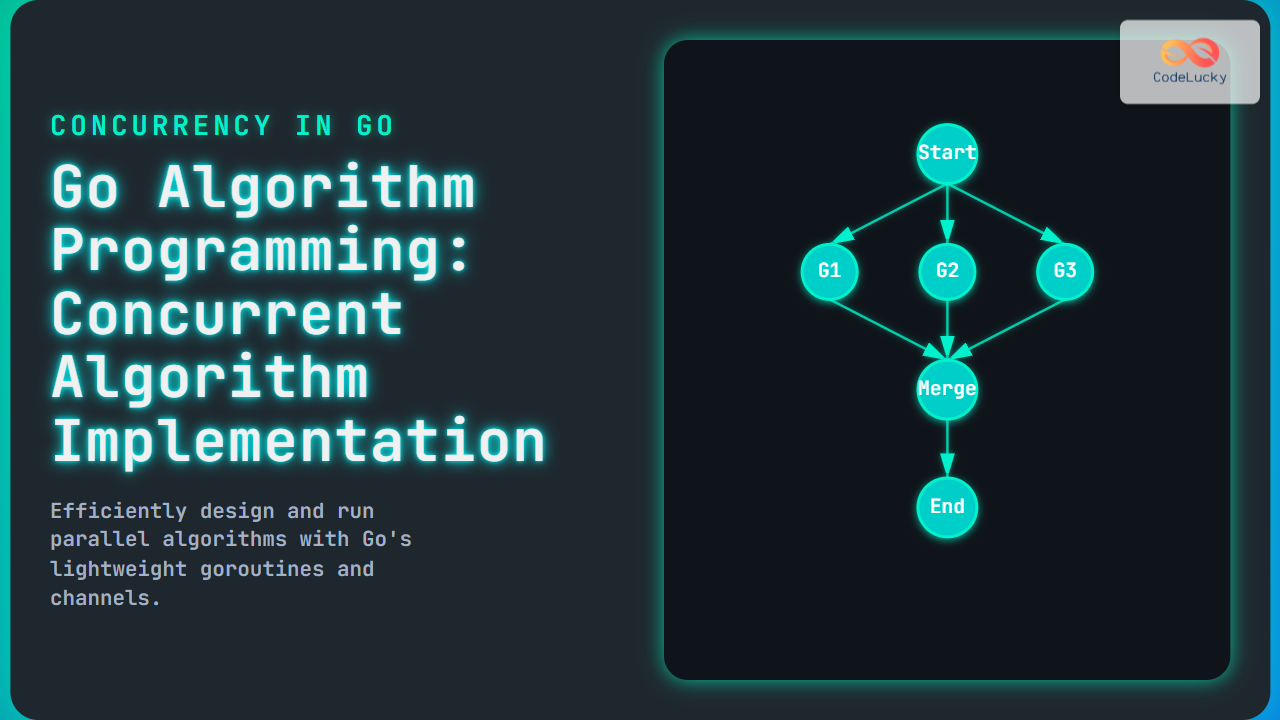

Concurrency has become one of the most important aspects of modern computing, where multiple tasks must be executed efficiently without blocking each other. In algorithm design, concurrent implementations improve responsiveness, throughput, and resource utilization. Go (also called Golang) is one of the most powerful languages for writing concurrent algorithms, thanks to its goroutines and channels, which provide lightweight and highly efficient concurrency primitives.

This article explores concurrent algorithm implementation in Go, detailing concepts, examples, and best practices to achieve safe and efficient execution of parallel tasks.

What is Concurrency in Algorithms?

Concurrency refers to the ability of an algorithm or system to handle multiple tasks simultaneously. In algorithms, concurrency helps in optimizing performance when working with I/O-bound or CPU-intensive processes. Unlike parallelism, which strictly means executing multiple operations at the same time, concurrency focuses more on managing multiple tasks efficiently to give the illusion of simultaneous execution.

Why Use Go for Concurrent Algorithms?

Go provides an elegant solution for concurrency through goroutines and channels:

- Goroutines: Lightweight threads managed by the Go runtime. Starting a goroutine takes minimal memory overhead compared to traditional OS threads.

- Channels: Safe communication mechanisms that allow goroutines to exchange data without explicit locks.

- Built-in Synchronization: Go provides tools like

sync.Mutexandsync.WaitGroupfor safely managing shared resources.

Basic Example: Concurrent Sum of an Array

Let’s start with a simple algorithm that computes the sum of an array using multiple goroutines to speed up computation.

package main

import (

"fmt"

"sync"

)

func partialSum(nums []int, wg *sync.WaitGroup, resultChan chan int) {

defer wg.Done()

sum := 0

for _, n := range nums {

sum += n

}

resultChan <- sum

}

func main() {

numbers := []int{1, 2, 3, 4, 5, 6, 7, 8, 9, 10}

mid := len(numbers) / 2

var wg sync.WaitGroup

resultChan := make(chan int, 2)

wg.Add(2)

go partialSum(numbers[:mid], &wg, resultChan)

go partialSum(numbers[mid:], &wg, resultChan)

wg.Wait()

close(resultChan)

total := 0

for r := range resultChan {

total += r

}

fmt.Println("Total Sum:", total)

}

Visual Output:

Array: [1 2 3 4 5 6 7 8 9 10] Left Half Sum: 15 Right Half Sum: 40 Total Sum: 55

Concurrent Sorting: Parallel Merge Sort

Merge Sort, a divide-and-conquer algorithm, benefits greatly from concurrency. Go allows us to assign recursive sorts to different goroutines, making sorting faster on multicore systems.

package main

import (

"fmt"

"sync"

)

func merge(left, right []int) []int {

result := []int{}

i, j := 0, 0

for i < len(left) && j < len(right) {

if left[i] < right[j] {

result = append(result, left[i])

i++

} else {

result = append(result, right[j])

j++

}

}

result = append(result, left[i:]...)

result = append(result, right[j:]...)

return result

}

func parallelMergeSort(arr []int, wg *sync.WaitGroup) []int {

defer wg.Done()

if len(arr) <= 1 {

return arr

}

mid := len(arr) / 2

var left, right []int

var wgl sync.WaitGroup

wgl.Add(2)

go func() {

left = parallelMergeSort(arr[:mid], &wgl)

}()

go func() {

right = parallelMergeSort(arr[mid:], &wgl)

}()

wgl.Wait()

return merge(left, right)

}

func main() {

arr := []int{9, 3, 7, 5, 6, 2, 8, 1, 4}

var wg sync.WaitGroup

wg.Add(1)

sorted := parallelMergeSort(arr, &wg)

wg.Wait()

fmt.Println("Sorted Array:", sorted)

}

Example Output:

Original: [9 3 7 5 6 2 8 1 4] Sorted Array: [1 2 3 4 5 6 7 8 9]

Synchronization Challenges in Concurrent Algorithms

Concurrent algorithms in Go face common challenges:

- Race Conditions: Multiple goroutines writing or reading shared variables simultaneously.

- Deadlocks: Goroutines waiting indefinitely on resources locked by each other.

- Starvation: Some tasks never get CPU time due to scheduling issues.

Go provides sync.Mutex, sync.RWMutex, and sync.WaitGroup as tools to mitigate these problems, alongside channels for safe communication.

Interactive Concurrency Demo

You can try running the following Go code in an online Go playground to see concurrent workers process tasks:

package main

import (

"fmt"

"math/rand"

"sync"

"time"

)

func worker(id int, jobs <-chan int, wg *sync.WaitGroup) {

defer wg.Done()

for j := range jobs {

fmt.Printf("Worker %d processing job %d\n", id, j)

time.Sleep(time.Duration(rand.Intn(500)) * time.Millisecond)

}

}

func main() {

jobs := make(chan int, 5)

var wg sync.WaitGroup

for w := 1; w <= 3; w++ {

wg.Add(1)

go worker(w, jobs, &wg)

}

for j := 1; j <= 5; j++ {

jobs <- j

}

close(jobs)

wg.Wait()

fmt.Println("All jobs completed!")

}

Possible Output:

Worker 1 processing job 1 Worker 2 processing job 2 Worker 3 processing job 3 Worker 2 processing job 4 Worker 1 processing job 5 All jobs completed!

Best Practices for Concurrent Algorithms in Go

- Use

channelsfor communication instead of shared memory wherever possible. - Minimize the use of

Mutexto avoid complex deadlock scenarios. - Test with Go’s built-in race detector:

go run -race yourprogram.go. - Benchmark performance improvements versus sequential execution.

- Keep goroutines lightweight and avoid spawning unbounded numbers of them.

Conclusion

Concurrent algorithm implementation in Go is streamlined by its goroutines and channels, enabling developers to write highly scalable, efficient, and safe concurrency-powered algorithms. From simple sum calculations to advanced algorithms like parallel sorting, Go provides elegant primitives for real-world algorithm design challenges. By following best practices and leveraging Go’s runtime features, developers can avoid common concurrency pitfalls and build highly performant systems.