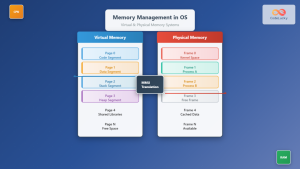

File system caching is a fundamental performance optimization technique in modern operating systems that dramatically reduces disk I/O operations by storing frequently accessed data in memory. This comprehensive guide explores the two primary caching mechanisms: buffer cache and page cache, their differences, implementation details, and real-world applications.

Understanding File System Cache Fundamentals

File system cache acts as an intermediary layer between applications and storage devices, leveraging the principle of locality of reference. When applications request data, the operating system first checks if the data exists in cache memory before accessing slower storage devices.

Key Benefits of File System Caching

- Reduced Latency: Memory access (nanoseconds) vs disk access (milliseconds)

- Improved Throughput: Multiple requests served from cache simultaneously

- Lower CPU Utilization: Fewer context switches for I/O operations

- Enhanced User Experience: Faster application response times

Buffer Cache: Block-Level Caching Mechanism

Buffer cache operates at the block level, managing fixed-size blocks of data typically ranging from 512 bytes to 8KB. This caching layer sits between the file system and block device drivers, providing efficient storage and retrieval of disk blocks.

Buffer Cache Architecture

Buffer Cache Implementation Example

// Simplified buffer cache structure in C

struct buffer_head {

unsigned long b_blocknr; // Block number

char *b_data; // Pointer to data

struct buffer_head *b_next; // Hash chain pointer

struct buffer_head *b_prev; // LRU chain pointer

int b_count; // Reference count

int b_state; // Buffer state flags

};

// Buffer states

#define BH_Uptodate 0 // Buffer contains valid data

#define BH_Dirty 1 // Buffer needs writing to disk

#define BH_Lock 2 // Buffer is locked

#define BH_Req 3 // Buffer has pending request

Buffer Cache Operations

The buffer cache implements several key operations for efficient data management:

1. Buffer Allocation and Lookup

// Example of buffer lookup function

struct buffer_head *getblk(dev_t device, int block, int size) {

struct buffer_head *bh;

// Hash lookup for existing buffer

bh = get_hash_table(device, block, size);

if (bh) {

bh->b_count++; // Increment reference count

return bh;

}

// Allocate new buffer if not found

bh = get_unused_buffer_head();

bh->b_blocknr = block;

bh->b_size = size;

bh->b_dev = device;

// Add to hash table

insert_into_hash_queue(bh);

return bh;

}

2. Write-Back Strategy

Buffer cache implements write-back caching where dirty buffers are written to disk asynchronously:

// Periodic sync operation

void sync_buffers(void) {

struct buffer_head *bh;

for (bh = lru_list; bh; bh = bh->b_next_lru) {

if (buffer_dirty(bh) && !buffer_locked(bh)) {

ll_rw_block(WRITE, 1, &bh);

}

}

}

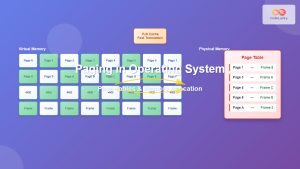

Page Cache: File-Level Caching System

Page cache operates at the file level, managing entire pages of file data (typically 4KB on most systems). It’s more sophisticated than buffer cache, supporting memory-mapped files and providing better integration with virtual memory systems.

Page Cache Data Structures

// Linux page cache structures

struct address_space {

struct inode *host; // Associated inode

struct radix_tree_root page_tree; // Pages indexed by offset

spinlock_t tree_lock; // Protects page_tree

unsigned int i_mmap_writable; // VM_SHARED mappings

struct rb_root i_mmap; // VM areas mapped to this file

atomic_t i_mmap_writable; // Number of writable mappings

unsigned long nrpages; // Number of pages

};

struct page {

unsigned long flags; // Page flags (dirty, locked, etc.)

atomic_t _count; // Reference count

struct address_space *mapping; // Associated address space

pgoff_t index; // Page offset in file

struct list_head lru; // LRU list linkage

void *private; // Filesystem private data

};

Page Cache Lookup and Allocation

// Find or create a page in the page cache

struct page *find_or_create_page(struct address_space *mapping,

pgoff_t index, gfp_t gfp_mask) {

struct page *page;

// Look for existing page

page = find_get_page(mapping, index);

if (page)

return page;

// Allocate new page

page = __page_cache_alloc(gfp_mask);

if (!page)

return NULL;

// Add to page cache

if (add_to_page_cache_lru(page, mapping, index, gfp_mask)) {

put_page(page);

return NULL;

}

return page;

}

Key Differences Between Buffer Cache and Page Cache

| Aspect | Buffer Cache | Page Cache |

|---|---|---|

| Granularity | Block-level (512B – 8KB) | Page-level (4KB typical) |

| Primary Use | Metadata and raw device access | File content caching |

| Integration | Block layer integration | Virtual memory integration |

| Memory Mapping | Not supported | Fully supported |

| Indexing | Hash tables | Radix trees |

Modern Unified Cache Implementation

Contemporary operating systems like Linux have largely unified buffer cache and page cache into a single coherent system. This unified approach eliminates redundancy and provides better memory utilization.

Unified Cache Benefits

- Memory Efficiency: No duplicate caching of the same data

- Coherency: Single source of truth for cached data

- Simplified Management: Unified replacement policies

- Better Performance: Reduced memory fragmentation

Example: Linux Unified Cache Operation

// Reading from a file through unified cache

ssize_t generic_file_read_iter(struct kiocb *iocb, struct iov_iter *iter) {

struct file *file = iocb->ki_filp;

struct address_space *mapping = file->f_mapping;

struct page *page;

loff_t pos = iocb->ki_pos;

while (iov_iter_count(iter)) {

pgoff_t index = pos >> PAGE_SHIFT;

// Find page in cache

page = find_get_page(mapping, index);

if (!page) {

// Page fault - read from storage

page = page_cache_read(file, index);

}

// Copy data to user space

copy_page_to_iter(page, offset, bytes, iter);

put_page(page);

pos += bytes;

}

return bytes_copied;

}

Cache Replacement Algorithms

Both buffer cache and page cache implement sophisticated replacement algorithms to manage limited memory resources efficiently.

LRU (Least Recently Used) Implementation

// Simplified LRU implementation for page cache

struct lru_cache {

struct list_head active_list; // Recently accessed pages

struct list_head inactive_list; // Less recently accessed pages

spinlock_t lru_lock; // Protects LRU lists

unsigned long nr_active; // Count of active pages

unsigned long nr_inactive; // Count of inactive pages

};

// Move page to front of active list

void mark_page_accessed(struct page *page) {

if (PageActive(page)) {

// Already active, move to front

list_move(&page->lru, &active_list);

} else if (PageReferenced(page)) {

// Promote from inactive to active

SetPageActive(page);

ClearPageReferenced(page);

list_move(&page->lru, &active_list);

nr_active++;

nr_inactive--;

} else {

// First access, mark as referenced

SetPageReferenced(page);

}

}

Performance Monitoring and Optimization

Understanding cache performance is crucial for system optimization. Here are key metrics and monitoring techniques:

Cache Performance Metrics

# Monitor cache hit rates and memory usage

cat /proc/meminfo | grep -E "(Cached|Buffers|Dirty|Writeback)"

Buffers: 1048576 kB

Cached: 8388608 kB

SwapCached: 0 kB

Dirty: 12345 kB

Writeback: 0 kB

# Monitor I/O statistics

iostat -x 1

Device r/s w/s rkB/s wkB/s await %util

sda 10.2 5.1 512.4 204.8 2.1 1.2

Optimization Techniques

- Prefetching: Anticipatory loading of data

- Write Coalescing: Combining multiple small writes

- Readahead: Sequential read optimization

- Memory Pressure Management: Dynamic cache size adjustment

Example: Implementing Read-ahead

// Simplified readahead implementation

void page_cache_readahead(struct address_space *mapping,

struct file_ra_state *ra,

struct file *file,

pgoff_t offset,

unsigned long size) {

unsigned long max_ahead = ra->ra_pages;

pgoff_t start = offset;

pgoff_t end = offset + size;

// Determine readahead window

if (ra->prev_pos + 1 == offset) {

// Sequential access - increase readahead

ra->size = min(ra->size * 2, max_ahead);

} else {

// Random access - reset readahead

ra->size = get_init_ra_size(size, max_ahead);

}

// Trigger asynchronous reads

for (pgoff_t page_offset = start;

page_offset < end;

page_offset++) {

if (!find_get_page(mapping, page_offset)) {

__do_page_cache_readahead(mapping, file,

page_offset, 1, 0);

}

}

ra->prev_pos = offset;

}

Real-World Applications and Use Cases

File system caching plays critical roles in various scenarios:

Database Systems

Database management systems rely heavily on caching for performance:

// Database buffer pool management

struct buffer_pool {

struct hash_table *page_hash; // Quick page lookup

struct lru_list *replacement; // Replacement policy

struct page_frame *frames; // Physical page frames

size_t pool_size; // Total buffer pool size

atomic_t hit_count; // Cache hit statistics

atomic_t miss_count; // Cache miss statistics

};

// Buffer pool hit ratio calculation

double get_hit_ratio(struct buffer_pool *pool) {

long hits = atomic_read(&pool->hit_count);

long misses = atomic_read(&pool->miss_count);

return (double)hits / (hits + misses) * 100.0;

}

Web Servers

Web servers benefit from caching static content:

// Web server file caching example

struct file_cache_entry {

char *filename;

char *content;

size_t content_length;

time_t last_modified;

time_t cached_time;

int reference_count;

};

// Serve file with caching

int serve_cached_file(const char *filename, int client_socket) {

struct file_cache_entry *entry = find_cached_file(filename);

if (entry && is_cache_valid(entry)) {

// Cache hit - serve from memory

send(client_socket, entry->content,

entry->content_length, 0);

entry->reference_count++;

return 0;

}

// Cache miss - read from disk and cache

entry = load_and_cache_file(filename);

if (entry) {

send(client_socket, entry->content,

entry->content_length, 0);

return 0;

}

return -1; // File not found

}

Advanced Cache Synchronization

Maintaining cache coherency across multiple processes and systems requires sophisticated synchronization mechanisms:

// Cache coherency protocol implementation

enum cache_state {

CACHE_INVALID = 0,

CACHE_SHARED = 1,

CACHE_MODIFIED = 2,

CACHE_EXCLUSIVE = 3

};

struct cache_line {

void *data;

size_t size;

enum cache_state state;

atomic_t reference_count;

spinlock_t state_lock;

};

// Invalidate cache entry across all caches

void invalidate_cache_entry(struct cache_line *line) {

spin_lock(&line->state_lock);

if (line->state == CACHE_MODIFIED) {

// Write back dirty data

write_back_to_storage(line->data, line->size);

}

line->state = CACHE_INVALID;

spin_unlock(&line->state_lock);

// Notify other cache instances

broadcast_invalidation(line);

}

Troubleshooting Common Cache Issues

Understanding and resolving cache-related problems is essential for system administrators:

Cache Thrashing

# Detect cache thrashing patterns

vmstat 1 10 | awk '

/procs/ { print; next }

/r b/ { print; next }

{

if ($9 > 1000) { # High wait I/O

print $0 " <- High I/O Wait"

} else {

print

}

}'

# Monitor page fault rates

sar -B 1 5

02:30:01 PM pgpgin/s pgpgout/s fault/s majflt/s pgfree/s

02:30:02 PM 245.50 12.87 1234.65 45.21 987.34

Memory Pressure Debugging

# Check memory allocation failures

dmesg | grep -i "out of memory\|killed process"

# Monitor slab allocator for cache objects

cat /proc/slabinfo | head -20

slabinfo - version: 2.1

# name

buffer_head 15234 15240 104 39

dentry 45678 45720 192 21

inode_cache 12345 12360 608 6

Future Trends in File System Caching

Emerging technologies and trends shaping the future of file system caching include:

- NVMe and Persistent Memory: Blurring lines between storage and memory

- Machine Learning: Intelligent prefetching and replacement policies

- Distributed Caching: Cache coherency across network-attached storage

- Container-Aware Caching: Optimizations for containerized workloads

Conclusion

File system caching through buffer cache and page cache mechanisms represents one of the most critical performance optimizations in modern operating systems. Understanding these systems enables developers and system administrators to build more efficient applications and optimize system performance effectively.

The evolution from separate buffer and page caches to unified caching systems demonstrates the continuous refinement of operating system design. As storage technologies advance and workload patterns evolve, caching strategies will continue to adapt, ensuring optimal balance between performance, resource utilization, and data consistency.

Mastering these concepts provides a solid foundation for tackling complex system performance challenges and designing scalable applications that effectively leverage system resources.