Dynamic Programming (DP) is one of the most powerful techniques in computer science and competitive programming. It enables solving complex problems by breaking them down into smaller overlapping subproblems and storing their results to avoid redundant calculations. With its applications spanning from shortest path algorithms to sequence alignment and resource optimization, DP is a must-learn strategy for effective problem-solving.

What is Dynamic Programming?

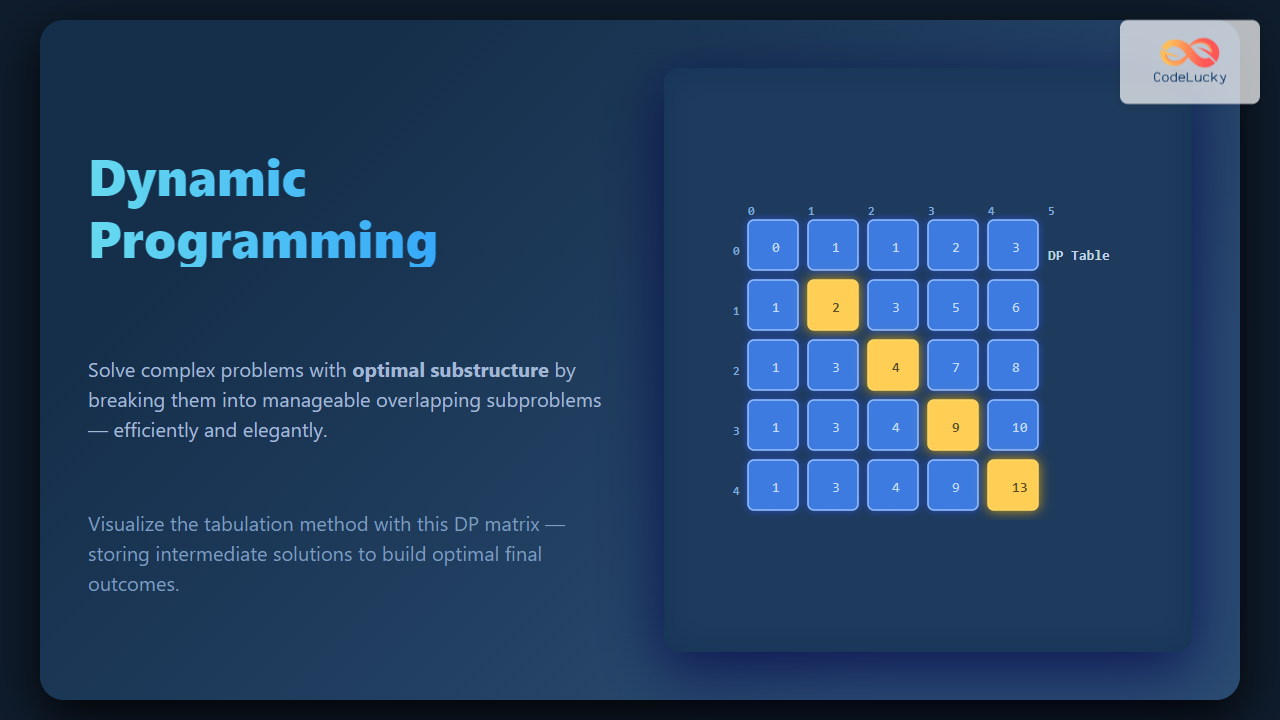

Dynamic Programming is an algorithmic paradigm that optimizes recursive solutions. Instead of re-computing results of subproblems every time they are needed, DP stores them in a table (memoization or tabulation), providing significant efficiency improvements.

Two key properties make a problem suitable for Dynamic Programming:

- Optimal Substructure: The optimal solution to a problem can be built from optimal solutions of its subproblems.

- Overlapping Subproblems: The same subproblems recur multiple times during the computation.

Dynamic Programming Approaches

There are two main ways to implement a DP solution:

- Top-Down (Memoization): Start with the original problem and recursively solve subproblems. Store results in a memo whenever computed.

- Bottom-Up (Tabulation): Iteratively build solutions of smaller subproblems first, then use them to construct solutions to larger problems.

Classic Example: Fibonacci Sequence

The Fibonacci sequence is the simplest example that highlights overlapping subproblems and optimization with DP.

# Fibonacci using Dynamic Programming (Tabulation)

def fibonacci(n):

dp = [0] * (n + 1)

dp[0], dp[1] = 0, 1

for i in range(2, n+1):

dp[i] = dp[i-1] + dp[i-2]

return dp[n]

print(fibonacci(10)) # Output: 55

Without DP, recursive Fibonacci is exponential. With DP, the complexity reduces to O(n).

The above tree shows redundant calculations. DP eliminates these redundancies.

Real-World DP Problem: Knapsack

The 0/1 Knapsack Problem asks: given items with weights and values, what is the maximum value you can carry in a bag of limited capacity?

def knapsack(weights, values, capacity):

n = len(weights)

dp = [[0] * (capacity + 1) for _ in range(n+1)]

for i in range(1, n+1):

for w in range(1, capacity+1):

if weights[i-1] <= w:

dp[i][w] = max(values[i-1] + dp[i-1][w-weights[i-1]], dp[i-1][w])

else:

dp[i][w] = dp[i-1][w]

return dp[n][capacity]

weights = [1, 3, 4, 5]

values = [1, 4, 5, 7]

capacity = 7

print(knapsack(weights, values, capacity)) # Output: 9

Longest Common Subsequence (LCS)

The LCS problem finds the longest subsequence common to two sequences. It has applications in file comparison tools, DNA sequence analysis, and version control systems.

def lcs(X, Y):

m, n = len(X), len(Y)

dp = [[0] * (n+1) for _ in range(m+1)]

for i in range(1, m+1):

for j in range(1, n+1):

if X[i-1] == Y[j-1]:

dp[i][j] = 1 + dp[i-1][j-1]

else:

dp[i][j] = max(dp[i-1][j], dp[i][j-1])

return dp[m][n]

print(lcs("ABCBDAB", "BDCAB")) # Output: 4

DP Optimization Techniques

- Space Optimization: Reduce storage from 2D to 1D arrays when only previous row data is required.

- Bitmask DP: Useful in subset problems (e.g., traveling salesman).

- Divide & Conquer DP: Optimizes over certain monotonic properties.

Interactive Example: Coin Change Problem

The coin change problem asks: how many ways can you make change for a given amount using a set of coins?

def coin_change(coins, amount):

dp = [0] * (amount + 1)

dp[0] = 1

for coin in coins:

for i in range(coin, amount+1):

dp[i] += dp[i-coin]

return dp[amount]

print(coin_change([1, 2, 5], 5)) # Output: 4

This example illustrates how a bottom-up DP solution accumulates possible combinations for a target value.

When to Use Dynamic Programming

Apply DP when:

- The problem exhibits overlapping subproblems.

- It has an optimal substructure.

- Recursive solutions are inefficient (often exponential complexity).

Conclusion

Dynamic Programming transforms hard, exponential-time problems into manageable polynomial-time computations by leveraging overlapping subproblems and optimal substructure. From sequence processing to optimization tasks, DP underpins some of the most efficient algorithms in computer science. Mastering it empowers developers and competitive programmers to confidently tackle problems once thought to be unsolvable in reasonable time.