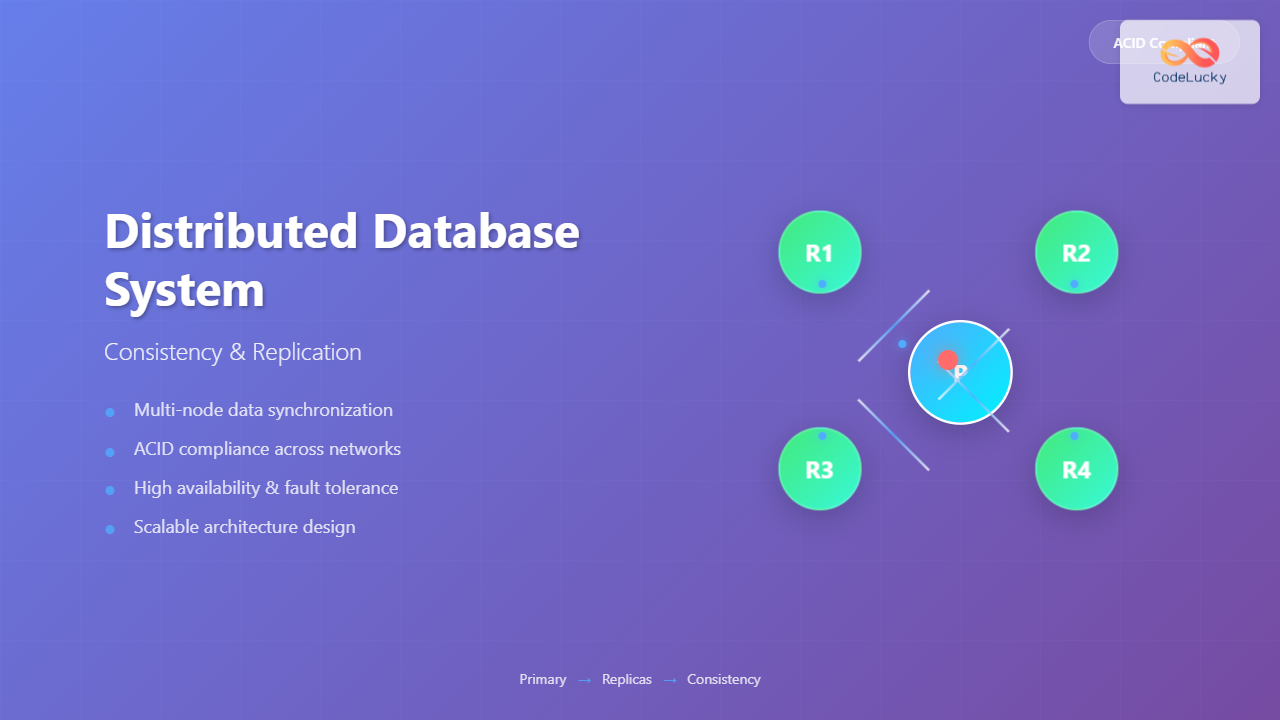

Distributed database systems have become the backbone of modern applications, enabling organizations to handle massive amounts of data across multiple nodes while ensuring high availability and performance. At the heart of these systems lie two critical concepts: consistency and replication. Understanding how these mechanisms work together is essential for building robust, scalable applications that can serve millions of users globally.

What is a Distributed Database System?

A distributed database system is a collection of multiple, logically interrelated databases distributed over a computer network. Unlike traditional centralized databases, distributed systems spread data across multiple physical locations, providing several advantages:

- Scalability: Handle increased load by adding more nodes

- Fault tolerance: Continue operating even when some nodes fail

- Geographic distribution: Reduce latency by placing data closer to users

- Performance: Parallel processing capabilities across multiple machines

Understanding Database Consistency

Consistency in distributed databases refers to the property that ensures all nodes see the same data at the same time. When a write operation occurs, consistency mechanisms determine how and when this change becomes visible across all replicas.

Types of Consistency Models

Strong Consistency

Strong consistency guarantees that once a write operation completes, all subsequent read operations will return the updated value. This is the most restrictive form of consistency but provides the strongest guarantees.

-- Example: Banking transaction with strong consistency

BEGIN TRANSACTION;

UPDATE accounts SET balance = balance - 100 WHERE id = 'user123';

UPDATE accounts SET balance = balance + 100 WHERE id = 'user456';

COMMIT;

-- All nodes must confirm the update before transaction completes

Eventual Consistency

Eventual consistency guarantees that if no new updates are made to a data item, eventually all replicas will converge to the same value. This model allows for temporary inconsistencies but ensures convergence over time.

// Example: Social media post with eventual consistency

const post = {

id: 'post123',

content: 'Hello World!',

timestamp: Date.now(),

author: 'user123'

};

// Write to primary node

await primaryDB.insert('posts', post);

// Asynchronously replicate to other nodes

replicateAsync(post);

// Users in different regions might see the post at different times

Weak Consistency

Weak consistency provides no guarantees about when all replicas will be consistent. Applications must handle potential inconsistencies at the application level.

ACID Properties in Distributed Systems

Traditional ACID properties become more complex in distributed environments:

- Atomicity: Ensuring all-or-nothing execution across multiple nodes

- Consistency: Maintaining data integrity across replicas

- Isolation: Managing concurrent transactions across the network

- Durability: Guaranteeing persistence even with node failures

Database Replication Strategies

Replication involves maintaining multiple copies of data across different nodes to ensure availability and fault tolerance. Different replication strategies offer various trade-offs between consistency, availability, and performance.

Master-Slave Replication

In master-slave replication, one node (master) handles all write operations, while slave nodes handle read operations and receive updates from the master.

class MasterSlaveReplication:

def __init__(self):

self.master = MasterNode()

self.slaves = [SlaveNode() for _ in range(3)]

def write(self, data):

# Write to master

self.master.write(data)

# Asynchronously replicate to slaves

for slave in self.slaves:

slave.replicate_from_master(data)

def read(self):

# Read from any available slave

available_slave = self.get_available_slave()

return available_slave.read()

Master-Master Replication

Master-master replication allows multiple nodes to accept write operations, requiring conflict resolution mechanisms when the same data is modified simultaneously.

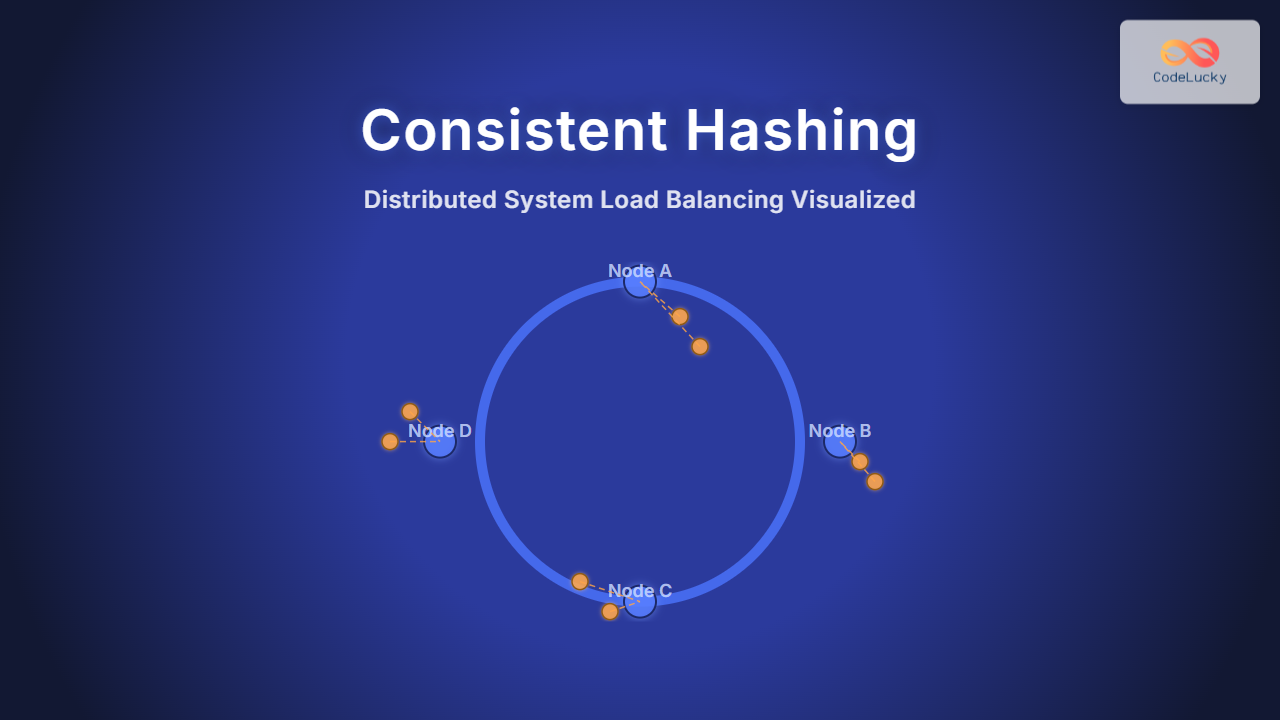

Quorum-Based Replication

Quorum-based systems require a majority of nodes to agree before confirming read or write operations, balancing consistency and availability.

type QuorumConfig struct {

ReadQuorum int

WriteQuorum int

Replicas int

}

func (q *QuorumConfig) CanRead() bool {

return q.ReadQuorum <= q.Replicas/2 + 1

}

func (q *QuorumConfig) CanWrite() bool {

return q.WriteQuorum > q.Replicas/2

}

// Example: 5 replicas, write quorum = 3, read quorum = 2

config := QuorumConfig{

ReadQuorum: 2,

WriteQuorum: 3,

Replicas: 5,

}

The CAP Theorem

The CAP theorem states that any distributed system can only guarantee two of the following three properties simultaneously:

- Consistency (C): All nodes see the same data simultaneously

- Availability (A): System remains operational

- Partition Tolerance (P): System continues despite network failures

Practical CAP Theorem Applications

# CP System Configuration (MongoDB)

mongodb:

replica_set:

primary: true

write_concern:

w: "majority" # Wait for majority acknowledgment

j: true # Journal writes

read_preference: "primary"

# AP System Configuration (Cassandra)

cassandra:

consistency_level:

read: "ONE" # Read from any replica

write: "ONE" # Write to any replica

replication_factor: 3

Consensus Algorithms

Consensus algorithms ensure that distributed nodes agree on a single value or state, even in the presence of failures. These algorithms are crucial for maintaining consistency in distributed systems.

Raft Consensus Algorithm

Raft divides consensus into leader election, log replication, and safety mechanisms:

class RaftNode {

constructor(id) {

this.id = id;

this.state = 'follower'; // follower, candidate, leader

this.currentTerm = 0;

this.log = [];

this.commitIndex = 0;

}

startElection() {

this.state = 'candidate';

this.currentTerm++;

this.voteForSelf();

// Request votes from other nodes

this.requestVotes();

}

appendEntries(entries, leaderTerm) {

if (leaderTerm >= this.currentTerm) {

this.log.push(...entries);

this.state = 'follower';

return { success: true };

}

return { success: false };

}

}

PBFT (Practical Byzantine Fault Tolerance)

PBFT handles Byzantine faults where nodes may behave maliciously or unpredictably:

Conflict Resolution Mechanisms

When multiple nodes can accept writes, conflicts are inevitable. Effective conflict resolution strategies are essential for maintaining data integrity.

Last-Writer-Wins (LWW)

class LWWResolution:

def resolve_conflict(self, version1, version2):

if version1.timestamp > version2.timestamp:

return version1

elif version2.timestamp > version1.timestamp:

return version2

else:

# Tie-breaker using node ID

return version1 if version1.node_id > version2.node_id else version2

# Example usage

conflict_resolver = LWWResolution()

winning_version = conflict_resolver.resolve_conflict(

DataVersion(data="value1", timestamp=1629123456, node_id="node1"),

DataVersion(data="value2", timestamp=1629123457, node_id="node2")

)

Vector Clocks

Vector clocks track causality relationships between events across distributed nodes:

public class VectorClock {

private Map<String, Integer> clock;

public VectorClock() {

this.clock = new HashMap<>();

}

public void increment(String nodeId) {

clock.put(nodeId, clock.getOrDefault(nodeId, 0) + 1);

}

public boolean happensBefore(VectorClock other) {

for (String nodeId : this.clock.keySet()) {

if (this.clock.get(nodeId) > other.clock.getOrDefault(nodeId, 0)) {

return false;

}

}

return !this.equals(other);

}

}

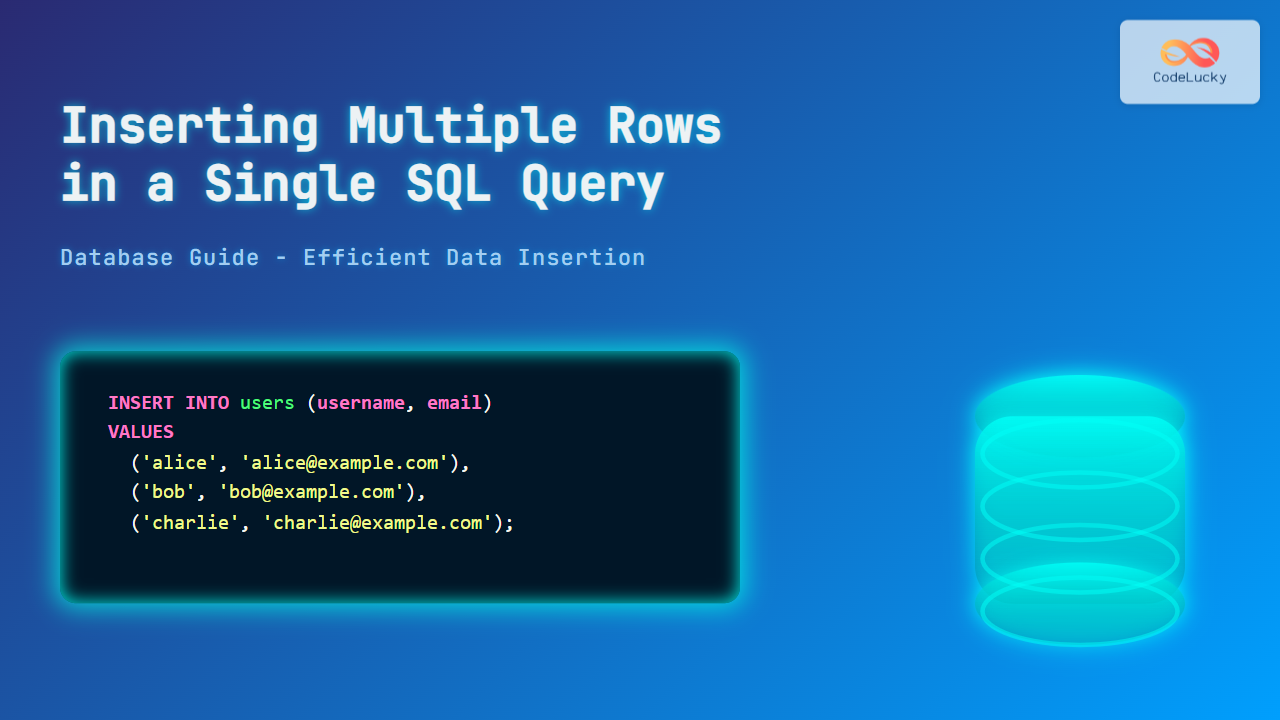

Real-World Implementation Examples

E-commerce Platform Architecture

Consider an e-commerce platform that needs to handle millions of transactions while maintaining inventory consistency:

-- Inventory management with distributed transactions

CREATE TABLE inventory (

product_id VARCHAR(50) PRIMARY KEY,

available_quantity INT NOT NULL,

reserved_quantity INT DEFAULT 0,

last_updated TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

version_vector TEXT -- For conflict resolution

);

-- Two-phase commit for order processing

BEGIN DISTRIBUTED TRANSACTION;

-- Phase 1: Prepare

UPDATE inventory

SET reserved_quantity = reserved_quantity + 1

WHERE product_id = 'PROD123' AND available_quantity > 0;

INSERT INTO orders (order_id, product_id, quantity, status)

VALUES ('ORD456', 'PROD123', 1, 'PENDING');

-- Phase 2: Commit across all nodes

COMMIT DISTRIBUTED TRANSACTION;

Social Media Feed Consistency

// Eventual consistency for social media feeds

class FeedService {

constructor() {

this.writeNodes = ['us-east-1', 'us-west-1', 'eu-west-1'];

this.readNodes = ['us-east-1-read', 'us-west-1-read', 'eu-west-1-read'];

}

async createPost(userId, content) {

const post = {

id: generateId(),

userId: userId,

content: content,

timestamp: Date.now(),

version: 1

};

// Write to primary region immediately

await this.writeToNode(this.getPrimaryRegion(userId), post);

// Asynchronously replicate to other regions

this.replicateAsync(post);

return post.id;

}

async getFeed(userId, region) {

// Read from nearest available replica

const readNode = this.getNearestReadNode(region);

return await readNode.getFeed(userId);

}

}

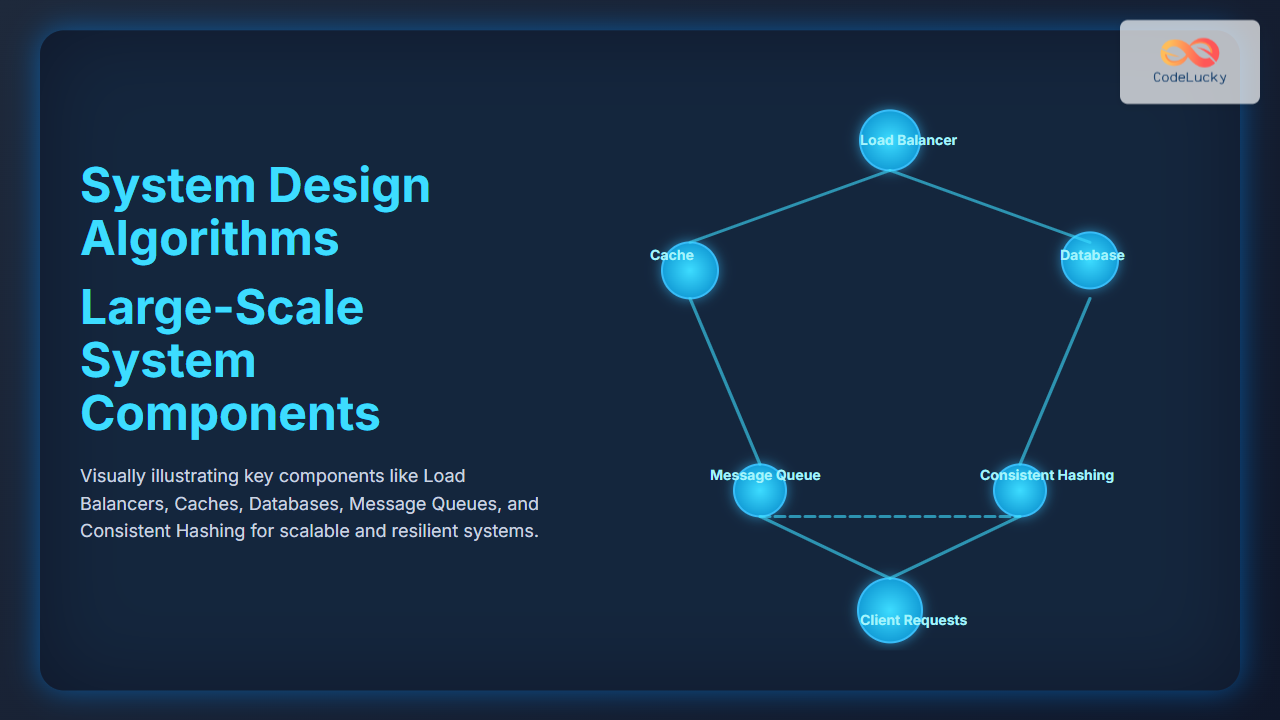

Performance Optimization Strategies

Read Replicas and Write Sharding

Optimize performance by separating read and write workloads:

class DatabaseCluster:

def __init__(self):

self.write_master = MasterNode()

self.read_replicas = [ReplicaNode(i) for i in range(5)]

self.shard_mapping = self.initialize_shards()

def write(self, key, value):

shard = self.get_shard(key)

return shard.write(key, value)

def read(self, key):

# Use read replica for better performance

replica = self.select_read_replica()

return replica.read(key)

def get_shard(self, key):

shard_id = hash(key) % len(self.shard_mapping)

return self.shard_mapping[shard_id]

Caching Strategies

# Redis configuration for distributed caching

CLUSTER NODES

CLUSTER REPLICATE node-id

SET key value EX 3600 # Expire in 1 hour

# Cache-aside pattern

def get_user_profile(user_id):

# Try cache first

cached = redis.get(f"user:{user_id}")

if cached:

return json.loads(cached)

# Fallback to database

profile = database.get_user(user_id)

# Update cache

redis.setex(f"user:{user_id}", 3600, json.dumps(profile))

return profile

Monitoring and Troubleshooting

Effective monitoring is crucial for maintaining distributed database health:

Key Metrics to Monitor

- Replication Lag: Time difference between master and replica updates

- Consistency Violations: Number of inconsistent reads detected

- Node Availability: Percentage of time nodes are operational

- Transaction Throughput: Operations per second across the cluster

- Network Partitions: Frequency and duration of network splits

# Prometheus monitoring configuration

- name: replication_lag

query: |

mysql_slave_seconds_behind_master{instance=~".*"}

alert:

condition: > 30

message: "Replication lag exceeded 30 seconds"

- name: consistency_check

query: |

rate(consistency_violations_total[5m])

alert:

condition: > 0.1

message: "Consistency violations detected"

Best Practices and Recommendations

Design Principles

- Design for Failure: Assume nodes and networks will fail

- Embrace Eventual Consistency: When strong consistency isn’t required

- Implement Idempotency: Ensure operations can be safely retried

- Use Appropriate Consistency Levels: Match requirements to use cases

- Monitor Continuously: Implement comprehensive observability

Common Pitfalls to Avoid

- Over-engineering Consistency: Using strong consistency when eventual consistency suffices

- Ignoring Network Partitions: Not handling split-brain scenarios

- Poor Shard Key Selection: Creating hotspots and uneven distribution

- Inadequate Testing: Not testing failure scenarios thoroughly

Future Trends and Technologies

The landscape of distributed databases continues to evolve with emerging technologies:

- Multi-Model Databases: Supporting multiple data models in one system

- Blockchain Integration: Immutable distributed ledgers for specific use cases

- Edge Computing: Bringing data closer to users at network edges

- AI-Powered Optimization: Machine learning for automatic performance tuning

- Serverless Databases: Auto-scaling without infrastructure management

Distributed database systems with proper consistency and replication strategies form the foundation of modern scalable applications. By understanding the trade-offs between different approaches and implementing appropriate patterns for your use case, you can build robust systems that handle massive scale while maintaining data integrity. The key is to choose the right consistency model and replication strategy based on your specific requirements, rather than applying a one-size-fits-all solution.

Remember that distributed systems are inherently complex, and the best approach often involves carefully balancing consistency, availability, and partition tolerance based on your application’s specific needs and constraints.