Cluster computing represents one of the most powerful approaches to achieving high-performance computing by connecting multiple independent computers to work together as a unified system. This distributed computing paradigm enables organizations to tackle computationally intensive tasks that would be impossible or impractical on single machines.

What is Cluster Computing?

Cluster computing involves linking multiple computers (nodes) through high-speed networks to create a single, more powerful computing resource. Each node in the cluster operates independently but collaborates with others to execute complex tasks, process large datasets, or provide fault-tolerant services.

Key characteristics of cluster computing include:

- Scalability: Easy addition or removal of nodes based on computational needs

- Fault tolerance: System continues operating even if individual nodes fail

- Cost-effectiveness: Using commodity hardware instead of expensive supercomputers

- Load distribution: Workload spread across multiple processing units

Types of Cluster Computing

1. High Availability Clusters (HA)

High availability clusters focus on eliminating single points of failure to ensure continuous service availability. These clusters automatically detect node failures and redistribute services to healthy nodes.

Example: E-commerce websites use HA clusters to maintain 99.9% uptime during peak shopping seasons or hardware failures.

2. Load Balancing Clusters

Load balancing clusters distribute incoming requests across multiple nodes to optimize resource utilization and response times. This approach prevents any single node from becoming overwhelmed.

Example: Web servers handling thousands of simultaneous user requests distribute traffic across cluster nodes based on current load metrics.

3. High Performance Computing (HPC) Clusters

HPC clusters maximize computational power by parallelizing complex calculations across numerous processing cores. These clusters excel at CPU-intensive tasks requiring significant computational resources.

Example: Weather prediction models use HPC clusters to process atmospheric data and generate forecasts using parallel algorithms.

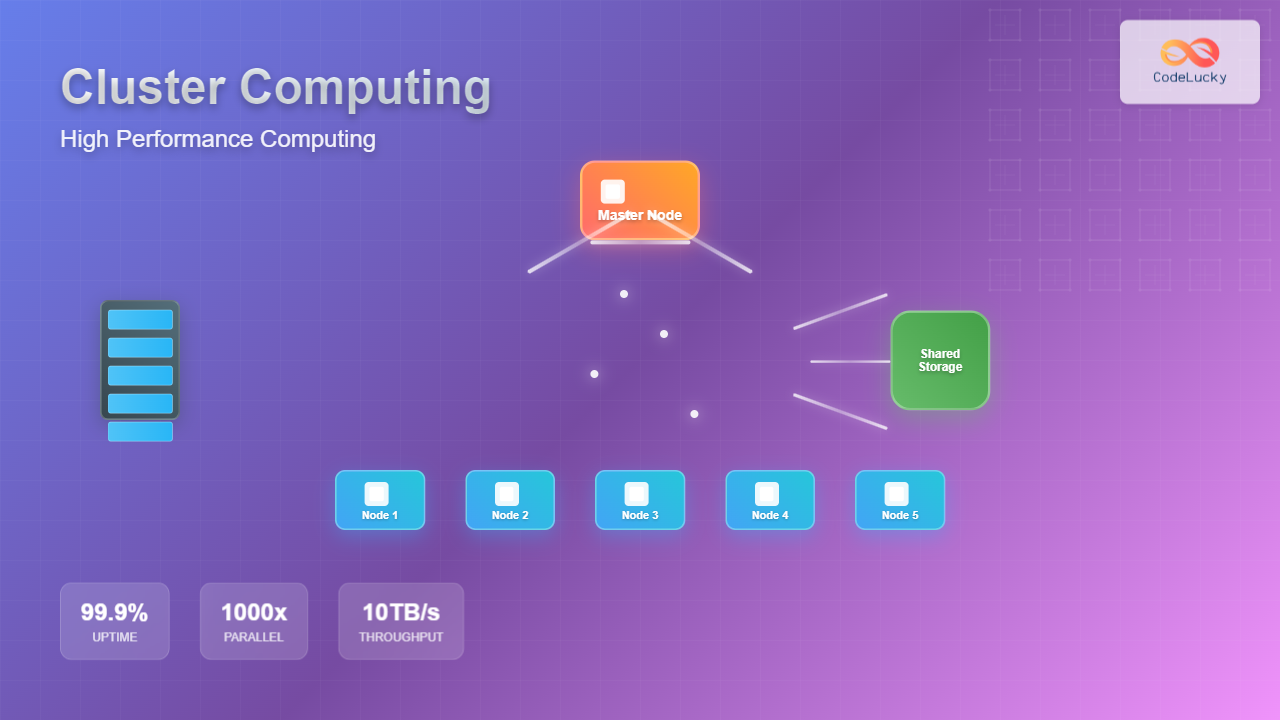

Cluster Architecture Components

Master Node (Head Node)

The master node serves as the central coordination point for the entire cluster, managing job scheduling, resource allocation, and cluster monitoring. It maintains cluster state information and handles client interactions.

Worker Nodes (Compute Nodes)

Worker nodes execute the actual computational tasks assigned by the master node. These nodes typically contain multiple CPU cores, adequate RAM, and local storage for processing data efficiently.

Interconnect Network

High-speed networking infrastructure connects cluster nodes, enabling rapid data exchange and communication. Common technologies include Ethernet, InfiniBand, or specialized cluster networks.

Storage System

Shared storage systems provide centralized data access across all cluster nodes. This may include Network File Systems (NFS), parallel file systems, or distributed storage solutions.

Cluster Computing Technologies

Message Passing Interface (MPI)

MPI provides a standardized communication protocol for parallel computing across distributed systems. It enables processes on different nodes to exchange data and coordinate execution.

#include <mpi.h>

#include <stdio.h>

int main(int argc, char** argv) {

MPI_Init(&argc, &argv);

int world_size, world_rank;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

printf("Hello from process %d of %d\n", world_rank, world_size);

MPI_Finalize();

return 0;

}

Apache Spark

Spark provides a unified analytics engine for large-scale data processing with built-in cluster computing capabilities. It offers APIs for distributed data processing across cluster nodes.

from pyspark.sql import SparkSession

# Initialize Spark cluster session

spark = SparkSession.builder \

.appName("ClusterComputing") \

.master("spark://master:7077") \

.getOrCreate()

# Distribute data processing across cluster

data = spark.range(1000000).repartition(10)

result = data.map(lambda x: x * 2).reduce(lambda a, b: a + b)

print(f"Cluster computation result: {result}")

Kubernetes

Kubernetes orchestrates containerized applications across cluster environments, providing automated deployment, scaling, and management of distributed applications.

apiVersion: apps/v1

kind: Deployment

metadata:

name: compute-cluster

spec:

replicas: 5

selector:

matchLabels:

app: compute-worker

template:

metadata:

labels:

app: compute-worker

spec:

containers:

- name: worker

image: compute-app:latest

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 500m

memory: 512Mi

Performance Optimization Techniques

Load Balancing Algorithms

Round Robin: Distributes tasks sequentially across available nodes, ensuring even distribution regardless of node capacity.

Least Connections: Routes new tasks to nodes with the fewest active connections, optimizing resource utilization.

Weighted Least Connections: Considers both active connections and node capacity when making routing decisions.

Parallel Processing Strategies

Data Parallelism: Distributes large datasets across multiple nodes, with each node processing its portion independently.

Task Parallelism: Divides complex algorithms into independent tasks that execute simultaneously on different nodes.

# Data Parallelism Example

from multiprocessing import Pool

import numpy as np

def process_chunk(data_chunk):

# Process each data chunk on different cluster nodes

return np.sum(data_chunk ** 2)

# Distribute data across cluster nodes

data = np.random.rand(1000000)

chunks = np.array_split(data, 10) # Split for 10 nodes

with Pool(processes=10) as pool:

results = pool.map(process_chunk, chunks)

total_result = sum(results)

print(f"Distributed computation result: {total_result}")

Real-World Applications

Scientific Computing

Research institutions use cluster computing for molecular dynamics simulations, climate modeling, and genomic analysis. These applications require massive computational resources that single machines cannot provide.

Financial Services

Banks and trading firms employ clusters for risk analysis, algorithmic trading, and fraud detection. Real-time processing of market data across distributed systems enables rapid decision-making.

Media and Entertainment

Animation studios and video production companies use render farms (specialized clusters) for 3D rendering and visual effects processing. Each frame renders on separate nodes, dramatically reducing production time.

Machine Learning and AI

Training large neural networks requires distributed computing power. Cluster computing enables parallel model training across multiple GPUs and nodes, accelerating the development of AI applications.

Implementation Considerations

Network Configuration

Proper network design ensures minimal latency and maximum bandwidth between cluster nodes. Consider using dedicated cluster networks separate from general-purpose infrastructure.

Resource Management

Implement job scheduling systems like SLURM or PBS to efficiently allocate computational resources based on job priorities and requirements.

#!/bin/bash

#SBATCH --job-name=cluster_job

#SBATCH --nodes=4

#SBATCH --ntasks-per-node=8

#SBATCH --time=02:00:00

#SBATCH --partition=compute

# Load required modules

module load mpi/openmpi

# Execute parallel application

mpirun -np 32 ./my_parallel_application

Monitoring and Maintenance

Deploy comprehensive monitoring solutions to track cluster health, resource utilization, and job performance. Tools like Ganglia, Nagios, or Prometheus provide essential cluster insights.

Future Trends in Cluster Computing

Edge Computing Integration

Hybrid architectures combining traditional clusters with edge computing nodes bring processing closer to data sources, reducing latency and bandwidth requirements.

Quantum-Classical Hybrid Clusters

Emerging quantum computing technologies integrate with classical cluster systems, enabling new approaches to optimization and simulation problems.

Green Computing Initiatives

Energy-efficient cluster designs focus on power optimization and renewable energy integration, addressing environmental concerns in high-performance computing.

Cluster computing continues evolving as a fundamental technology for high-performance computing, enabling organizations to tackle increasingly complex computational challenges through distributed processing power. Understanding cluster architectures, implementation strategies, and optimization techniques provides the foundation for leveraging this powerful computing paradigm effectively.