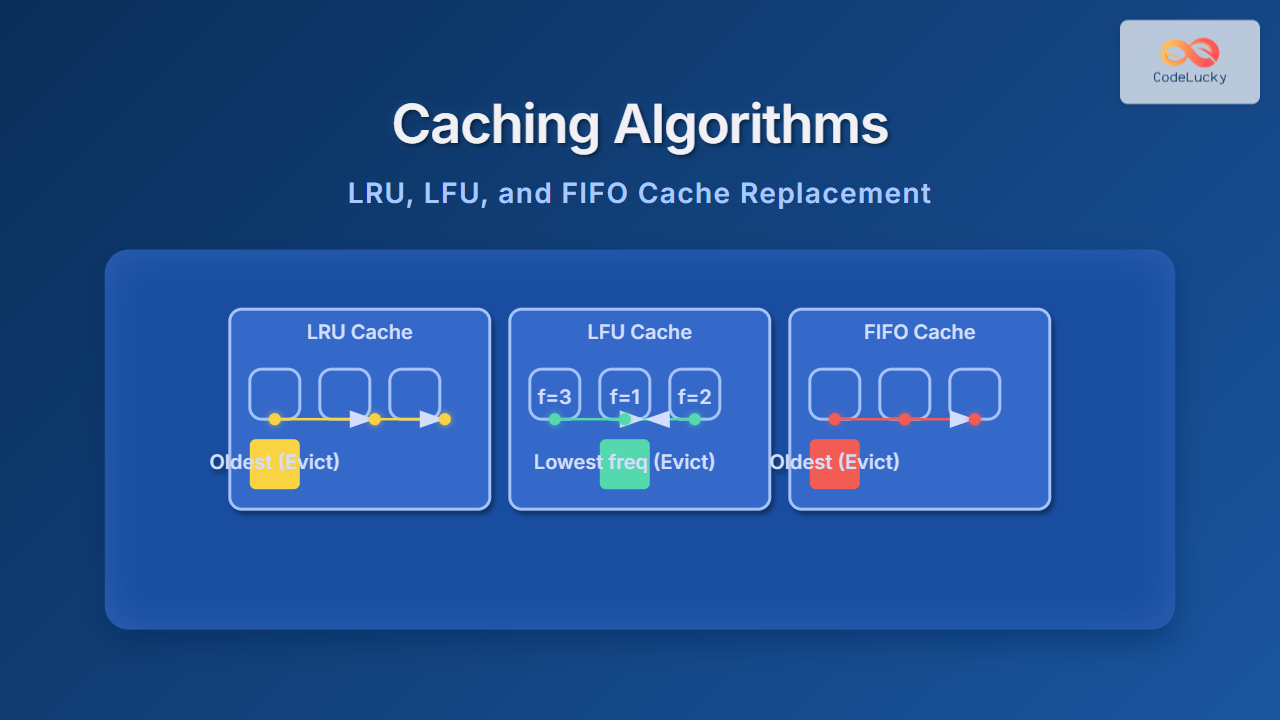

Caching algorithms play a vital role in optimizing computing performance by determining which items to keep and which to replace in the cache during memory management. This article dives deeply into three prominent cache replacement policies—LRU (Least Recently Used), LFU (Least Frequently Used), and FIFO (First In First Out). Understanding these algorithms is essential for developers, computer scientists, and system architects seeking to improve application speed and resource efficiency.

What Is Cache and Why Cache Replacement Matters?

A cache is a smaller, faster memory component that stores copies of data from frequently used main memory locations. It enables quicker access to data, minimizing latency and improving overall system responsiveness. However, because cache size is limited, cache replacement algorithms decide which data to evict when adding new data.

Key Requirements for Cache Replacement Algorithms

- Efficiently maintain relevant data with minimum latency.

- Reduce costly cache misses that force fetching from slower memory layers.

- Adapt dynamically to changing data usage patterns.

1. LRU Cache: Least Recently Used

The LRU (Least Recently Used) algorithm prioritizes evicting the cache entry that has not been accessed for the longest time. It operates under the intuition that data used recently is more likely to be used again soon.

How LRU Works: Maintain the order of data by access time. On a cache hit, move the accessed item to the front of the usage list. On a cache miss requiring replacement, remove the item at the tail.

LRU Example with Cache Size 3

// Cache sequence: Insert 1, 2, 3

Cache: [1, 2, 3]

// Access 1 again (moves 1 to most recent)

Cache: [2, 3, 1]

// Insert 4 (cache full, evict LRU: 2)

Cache: [3, 1, 4]

2. LFU Cache: Least Frequently Used

LFU (Least Frequently Used) keeps track of access frequency for each cache item. It evicts the item that has been used the least number of times, assuming those with fewer accesses are less valuable.

LFU Example with Cache Size 3

// Insert 1, 2, 3

Cache: {1: freq=1, 2: freq=1, 3: freq=1}

// Access 1 (freq = 2)

Cache: {1: freq=2, 2: freq=1, 3: freq=1}

// Insert 4 (cache full, evict LFU: either 2 or 3)

// Evict 2 (freq 1) arbitrarily if tie

Cache: {1: freq=2, 3: freq=1, 4: freq=1}

LFU requires maintaining a frequency count and often uses efficient data structures such as min-heaps or balanced trees to track frequencies for quick access and eviction decisions.

3. FIFO Cache: First In First Out

FIFO (First In First Out) is the simplest replacement strategy where the oldest inserted cache item (the one inserted first) is evicted without considering usage patterns.

FIFO Example with Cache Size 3

// Inserted sequence: 1, 2, 3

Cache: [1, 2, 3]

// Insert 4 (evict first inserted: 1)

Cache: [2, 3, 4]

FIFO is easy to implement using a queue data structure, but it often performs poorly compared to LRU or LFU since it ignores actual usage.

Comparison of LRU, LFU, and FIFO

| Algorithm | Eviction Policy | Pros | Cons | Use Cases |

|---|---|---|---|---|

| LRU | Least recently used item | Good temporal locality support, dynamically adapts to usage | Requires extra storage for tracking order, overhead in moving items | Web caching, memory management, database buffers |

| LFU | Least frequently used item | Captures long-term frequency patterns, efficient for stable usage | More complex to implement, may evict recently used items with low frequency | Recommendation systems, streaming data caches |

| FIFO | Oldest inserted item | Simple implementation, low overhead | Poor cache hit rates, ignores usage | Simple, low-criticality caches |

Interactive Example: Simulating LRU Cache

This code snippet simulates an LRU Cache and can be tested with different input sequences. (The HTML here is paste-ready for embedding on CodeLucky.com, for quick experimentation by readers.)

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>LRU Cache Simulator</title>

<style>

body { font-family: Arial, sans-serif; padding: 20px; }

input, button { padding: 8px; margin: 5px; font-size: 16px; }

.cache { margin-top: 10px; }

.cache div { display: inline-block; padding: 8px 12px; background: #007acc; color: white; margin-right: 5px; border-radius: 4px; }

</style>

</head>

<body>

<h3>LRU Cache Simulator (Capacity: 3)</h3>

<input type="number" id="keyInput" placeholder="Enter key to access" />

<button onclick="accessCache()">Access</button>

<div class="cache" id="cacheDisplay"></div>

<script>

const capacity = 3;

let cache = new Map();

function accessCache() {

const key = document.getElementById('keyInput').value;

if (!key) return;

if (cache.has(key)) {

// Move accessed key to the end (most recent)

cache.delete(key);

} else if (cache.size >= capacity) {

// Remove least recently used (first inserted)

const firstKey = cache.keys().next().value;

cache.delete(firstKey);

}

cache.set(key, true);

renderCache();

}

function renderCache() {

const container = document.getElementById('cacheDisplay');

container.innerHTML = '';

for (const key of cache.keys()) {

const div = document.createElement('div');

div.textContent = key;

container.appendChild(div);

}

}

</script>

</body>

</html>

Summary

Understanding caching algorithms such as LRU, LFU, and FIFO is foundational for optimizing memory management and system performance. LRU balances recency and complexity, making it a popular choice in real-world applications. LFU enhances frequency awareness, useful in scenarios with repetitive access patterns. FIFO offers simple implementation but lacks adaptive intelligence. Choosing the right algorithm depends on usage context, performance goals, and system constraints.

For software developers and system designers, mastering these concepts leads to improved cache hit rates, reduced latency, and more efficient resource usage across diverse computing environments.