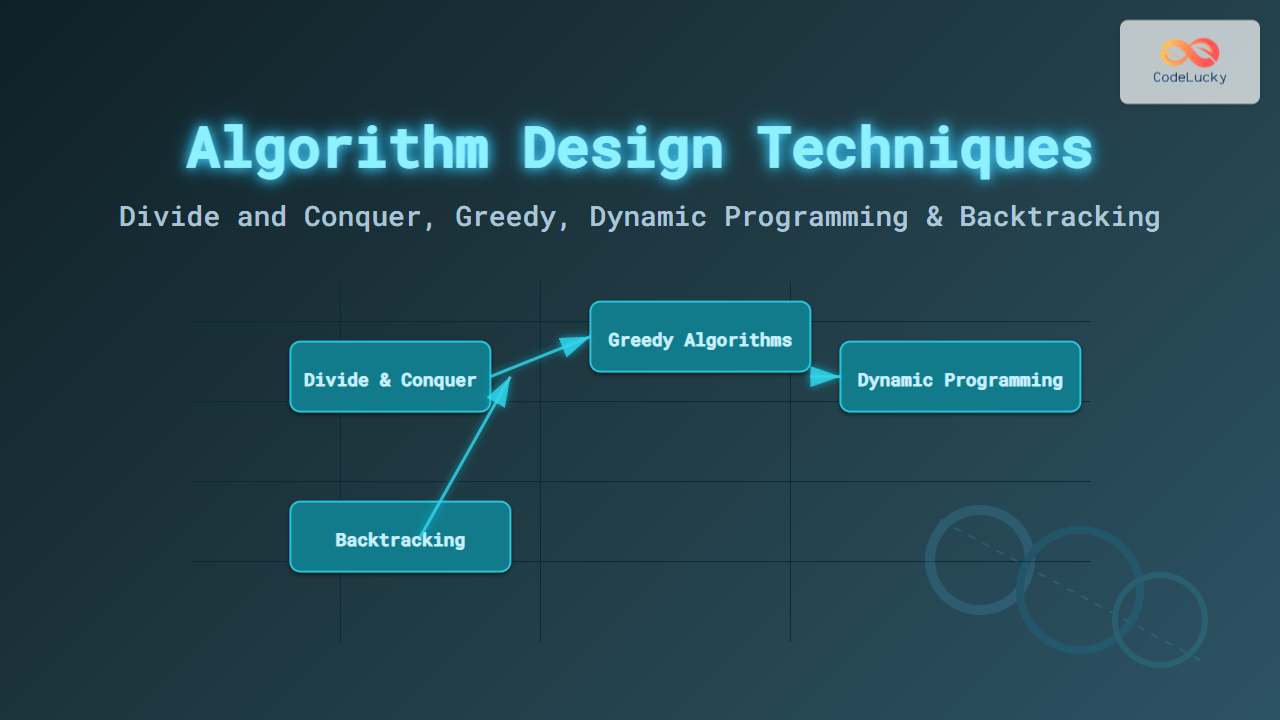

Effective algorithm design is a cornerstone of computer science and software development. Understanding different algorithm design techniques enables programmers to solve complex problems efficiently. This article dives deeply into popular algorithm strategies like Divide and Conquer, Greedy Algorithms, Dynamic Programming, and Backtracking. Through clear examples, visual diagrams, and insightful explanations, readers will learn to identify and apply these methods effectively.

What Are Algorithm Design Techniques?

Algorithm design techniques are systematic approaches used for creating algorithms that solve problems efficiently. Each technique provides a strategic framework tailored to specific problem types, optimizing time and space complexity while ensuring correctness.

Divide and Conquer

The Divide and Conquer technique breaks a problem into smaller subproblems of the same type, solves them recursively, and then combines their solutions. It is often used for problems where recursion naturally fits, such as sorting and searching.

Key Idea:

- Divide: Break the problem into smaller subproblems.

- Conquer: Solve subproblems recursively.

- Combine: Merge solutions of subproblems for the final result.

Example: Merge Sort Algorithm

Merge Sort sorts an array by recursively splitting the array in half until arrays contain one element, then merges sorted halves back into a sorted array.

Visual Output (Example):

Unsorted: [38, 27, 43, 3, 9, 82, 10] Split: [38, 27, 43, 3] and [9, 82, 10] Further splits and sorts... Final Sorted: [3, 9, 10, 27, 38, 43, 82]

Interactive Insight

Imagine the array as a tree being split down to leaves (single elements), then merged back up in sorted order. This ensures a time complexity of O(n log n), efficient for large data sets.

Greedy Algorithms

Greedy algorithms build up a solution piece by piece, always choosing the next piece that offers the most immediate benefit. They do not reconsider choices once made, making them efficient but not always optimal for all problems.

Key Characteristics:

- Local optimum choice at each step

- Faster execution due to less backtracking

- Works best where greedy choice leads to global optimum

Example: Coin Change Problem (Minimal Coins)

Find the minimum number of coins needed to make a given amount using greedy selection of the largest denomination first.

Visual Output:

Amount = 63 cents Available coins = [25, 10, 5, 1] Steps: Choose 25 -> Remaining 38 Choose 25 -> Remaining 13 Choose 10 -> Remaining 3 Choose 1 -> Remaining 2 Choose 1 -> Remaining 1 Choose 1 -> Remaining 0 Coins used = 6

Dynamic Programming

Dynamic Programming (DP) solves complex problems by breaking them into overlapping subproblems and storing the results to avoid redundant computations, significantly improving efficiency.

Core Concepts:

- Divide problem into subproblems

- Store results (memoization or tabulation)

- Construct solution from stored results

Example: Fibonacci Numbers with Memoization

Calculates Fibonacci numbers efficiently by storing intermediate results instead of re-computing them.

Backtracking

Backtracking is a brute-force algorithm design technique that builds candidates to the solution incrementally and abandons a candidate as soon as it determines this candidate cannot possibly lead to a valid solution.

Use Cases:

- Constraint satisfaction problems

- Permutation and combination problems

- Finding all possible solutions

Example: N-Queens Problem

Place N queens on an N×N chessboard so that no two queens threaten each other, using backtracking to test possible placements.

Other Algorithm Design Techniques

Beyond these primary strategies, other techniques include:

- Branch and Bound: Used for optimization problems to prune the search space.

- Randomized Algorithms: Incorporate randomness to improve average performance or simplify solutions.

- Heuristics: Approximate methods for problems where exact solutions are costly.

Choosing the Right Technique

The decision on which design technique to use depends on problem characteristics such as:

- Problem size and complexity

- Optimality requirements

- Time and memory constraints

- Whether subproblems overlap or are independent

Summary

Algorithm design techniques form the foundation for creating efficient solutions. Divide and Conquer excels in problems that naturally divide into independent subproblems. Greedy Algorithms provide fast, locally optimal solutions, suitable when this leads to a global optimum. Dynamic Programming is great where subproblems overlap, and Backtracking helps explore all possibilities with early pruning. Mastery of these techniques empowers developers to solve a broad spectrum of computational problems efficiently and elegantly.